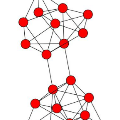

Dynamic Network Embedding (DNE) has recently attracted considerable attention due to the advantage of network embedding in various fields and the dynamic nature of many real-world networks. An input dynamic network to DNE is often assumed to have smooth changes over snapshots, which however would not hold for all real-world scenarios. It is natural to ask if existing DNE methods can perform well for an input dynamic network without smooth changes. To quantify it, an index called Degree of Changes (DoCs) is suggested so that the smaller DoCs indicates the smoother changes. Our comparative study shows several DNE methods are not robust enough to different DoCs even if the corresponding input dynamic networks come from the same dataset, which would make these methods unreliable and hard to use for unknown real-world applications. To propose an effective and more robust DNE method, we follow the notion of ensembles where each base learner adopts an incremental Skip-Gram embedding model. To further boost the performance, a simple yet effective strategy is designed to enhance the diversity among base learners at each timestep by capturing different levels of local-global topology. Extensive experiments demonstrate the superior effectiveness and robustness of the proposed method compared to state-of-the-art DNE methods, as well as the benefits of special designs in the proposed method and its scalability.

翻译:由于在各个领域嵌入网络的优势和许多现实世界网络的动态性质,动态网络最近吸引了相当多的关注。DNE的输入动态网络往往假定其光滑的改变胜过所有现实世界的情景,但对于所有现实世界的情景来说,这些变化不会维持。很自然地会问,现有的DNE方法能否在不平稳变化的情况下运行良好的输入动态网络。为了量化它,建议了一个名为“变化程度”的指数,这样小的DoC(DoCs)就能显示更平稳的变化。我们的比较研究显示,即使相应的输入动态网络来自同一数据集,对不同的 doCs来说,DNE方法也不够强大,使这些方法不可靠,难以用于未知的现实世界应用程序。为了提出有效和更强有力的DNE方法,我们遵循了每家基础学习者采用递增跳格嵌入模式的ensemble概念。为了进一步提升绩效,我们设计了一个简单而有效的战略,通过捕捉到不同级别的地方全球顶层学水平,提高基础学习者之间的多样性,从而显示拟议的方法的优越性和稳健性,从而展示了拟议的特殊方法的准确性。