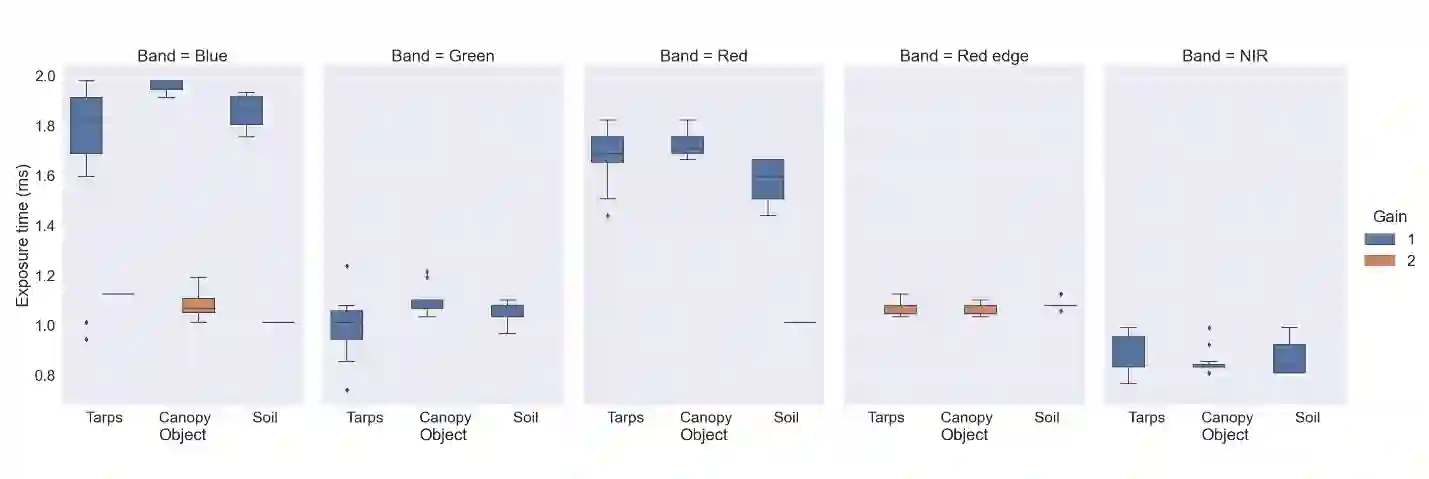

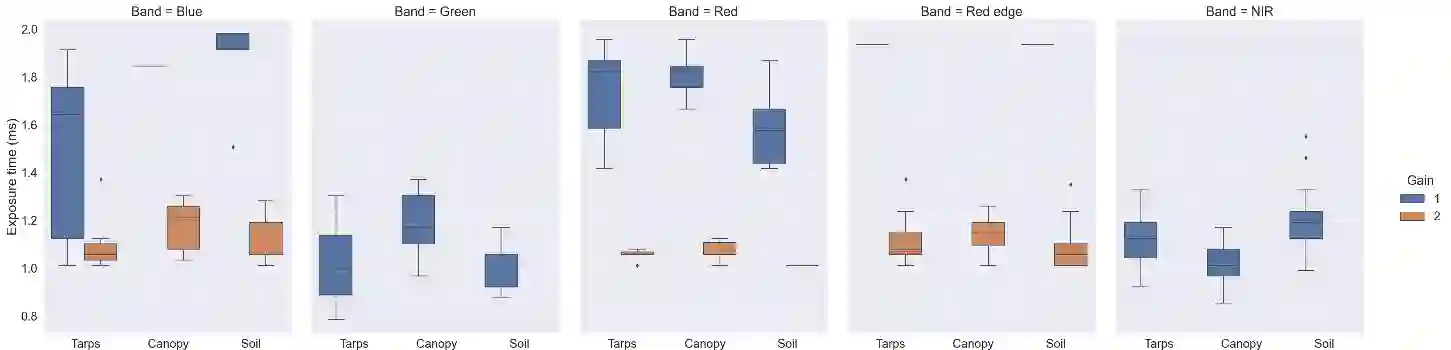

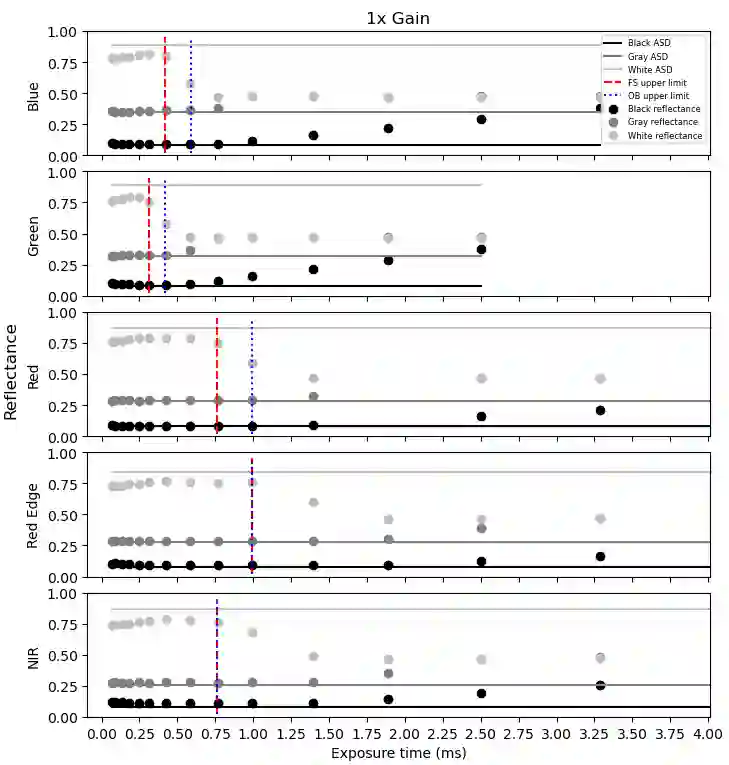

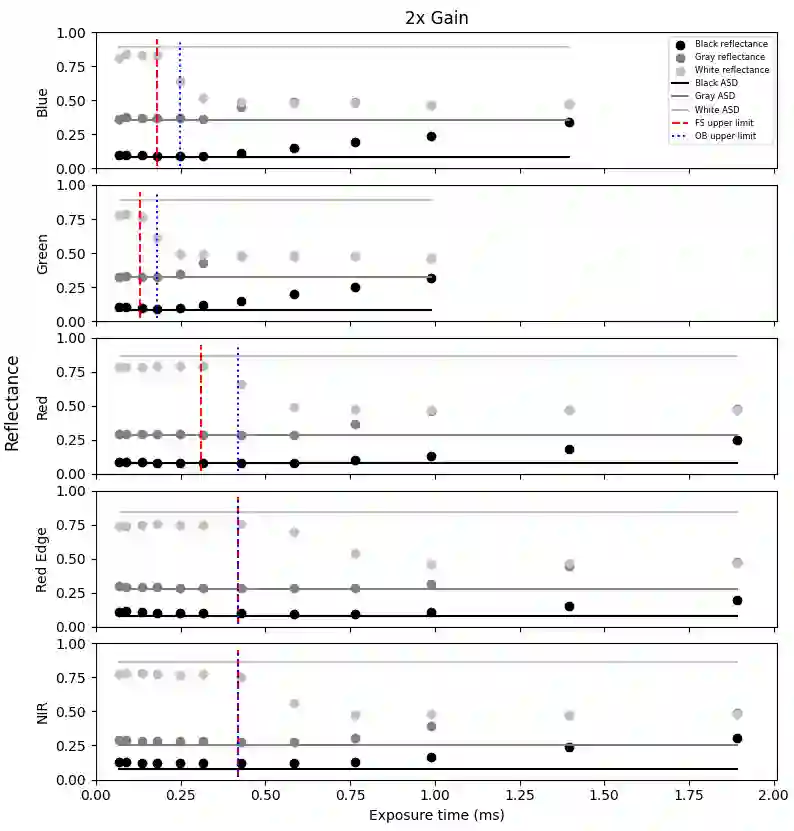

Radiometric accuracy of data is crucial in quantitative precision agriculture, to produce reliable and repeatable data for modeling and decision making. The effect of exposure time and gain settings on the radiometric accuracy of multispectral images was not explored enough. The goal of this study was to determine if having a fixed exposure (FE) time during image acquisition improved radiometric accuracy of images, compared to the default auto-exposure (AE) settings. This involved quantifying the errors from auto-exposure and determining ideal exposure values within which radiometric mean absolute percentage error (MAPE) were minimal (< 5%). The results showed that FE orthomosaic was closer to ground-truth (higher R2 and lower MAPE) than AE orthomosaic. An ideal exposure range was determined for capturing canopy and soil objects, without loss of information from under-exposure or saturation from over-exposure. A simulation of errors from AE showed that MAPE < 5% for the blue, green, red, and NIR bands and < 7% for the red edge band for exposure settings within the determined ideal ranges and increased exponentially beyond the ideal exposure upper limit. Further, prediction of total plant nitrogen uptake (g/plant) using vegetation indices (VIs) from two different growing seasons were closer to the ground truth (mostly, R2 > 0.40, and MAPE = 12 to 14%, p < 0.05) when FE was used, compared to the prediction from AE images (mostly, R2 < 0.13, MAPE = 15 to 18%, p >= 0.05).

翻译:暂无翻译