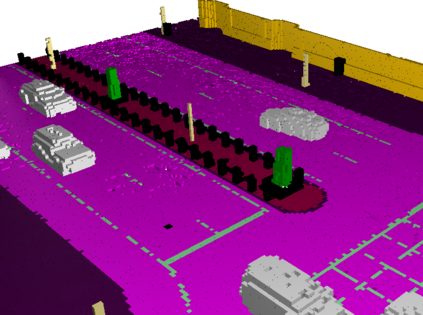

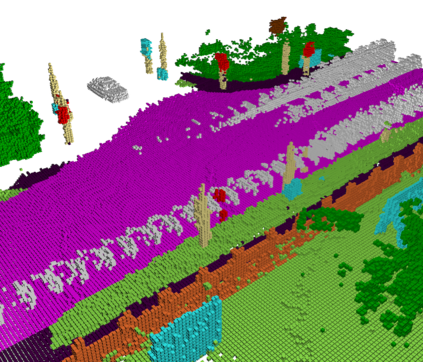

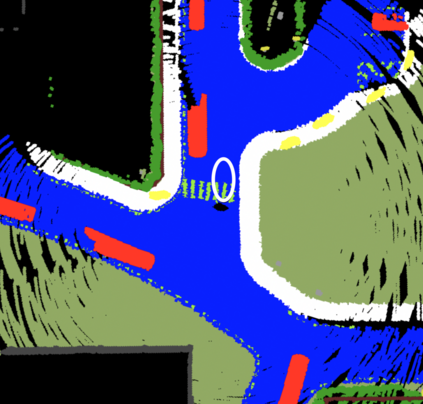

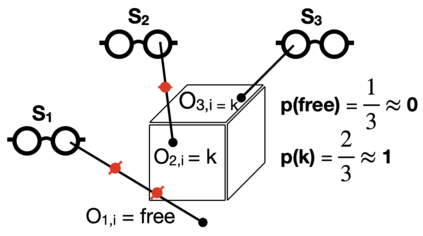

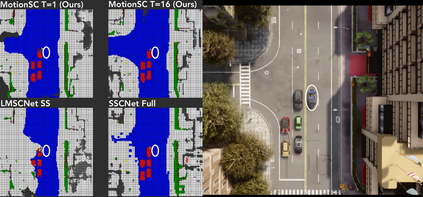

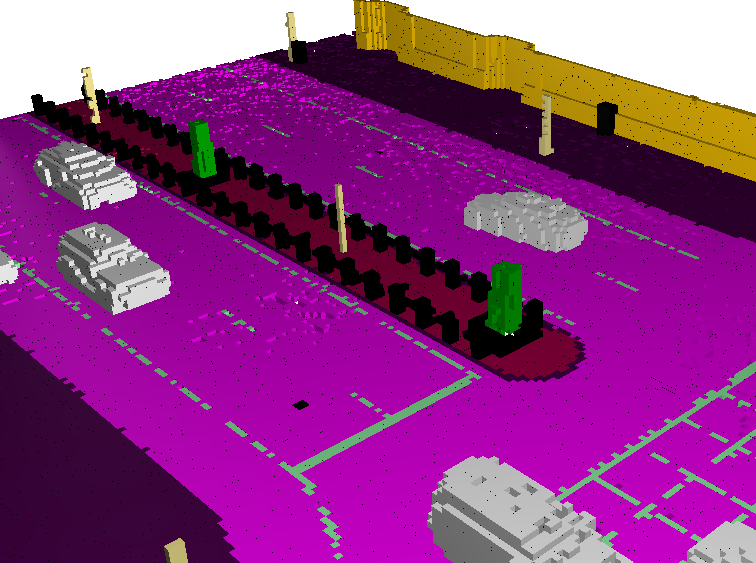

This work addresses a gap in semantic scene completion (SSC) data by creating a novel outdoor data set with accurate and complete dynamic scenes. Our data set is formed from randomly sampled views of the world at each time step, which supervises generalizability to complete scenes without occlusions or traces. We create SSC baselines from state-of-the-art open source networks and construct a benchmark real-time dense local semantic mapping algorithm, MotionSC, by leveraging recent 3D deep learning architectures to enhance SSC with temporal information. Our network shows that the proposed data set can quantify and supervise accurate scene completion in the presence of dynamic objects, which can lead to the development of improved dynamic mapping algorithms. All software is available at https://github.com/UMich-CURLY/3DMapping.

翻译:这项工作通过创建具有准确和完整动态场景的新型户外数据集来解决语义场景完成数据的差距。 我们的数据集来自对世界每个时间步骤的随机抽样观点,该数据集监督通用性,在没有隔离或痕迹的情况下完成场景。 我们从最先进的开放源网络创建了SSC基线,并通过利用最近的3D深层学习结构,利用时间信息来增强SSC。我们的网络显示,拟议的数据集可以在动态物体出现时量化和监督准确场景完成情况,从而可以导致改进动态绘图算法。所有软件都可在https://github.com/UMich-CURLY/3Dapting上查阅。