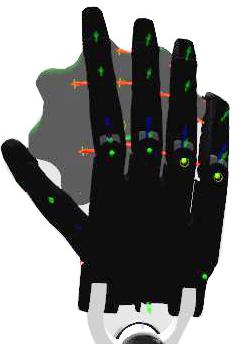

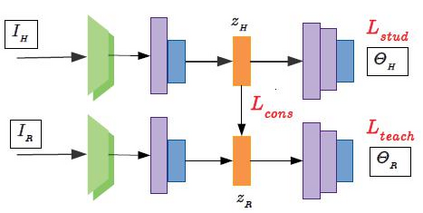

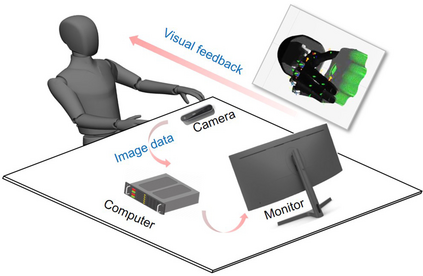

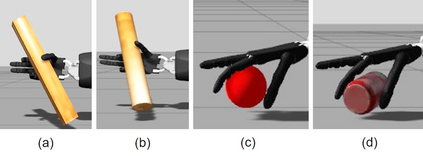

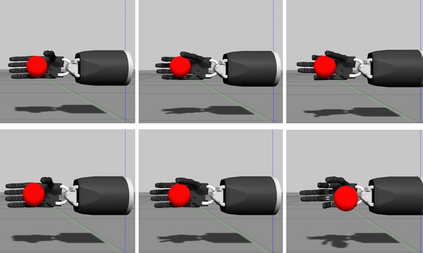

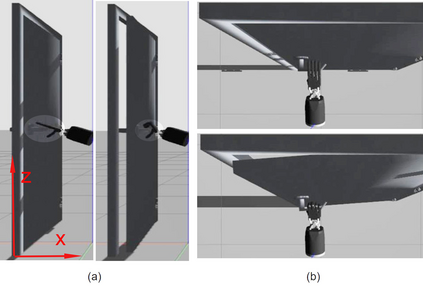

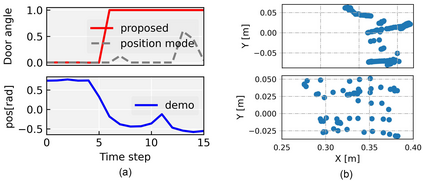

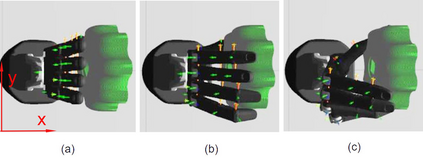

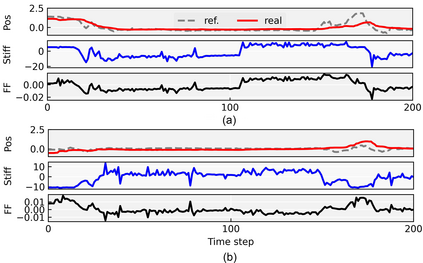

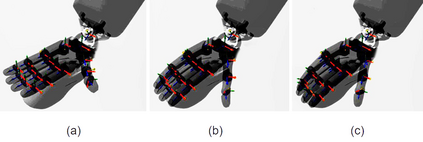

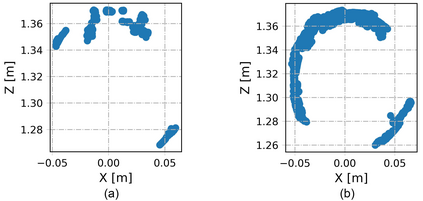

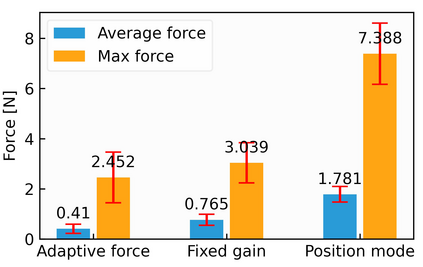

In this work, we focus on improving the robot's dexterous capability by exploiting visual sensing and adaptive force control. TeachNet, a vision-based teleoperation learning framework, is exploited to map human hand postures to a multi-fingered robot hand. We augment TeachNet, which is originally based on an imprecise kinematic mapping and position-only servoing, with a biomimetic learning-based compliance control algorithm for dexterous manipulation tasks. This compliance controller takes the mapped robotic joint angles from TeachNet as the desired goal, computes the desired joint torques. It is derived from a computational model of the biomimetic control strategy in human motor learning, which allows adapting the control variables (impedance and feedforward force) online during the execution of the reference joint angle trajectories. The simultaneous adaptation of the impedance and feedforward profiles enables the robot to interact with the environment in a compliant manner. Our approach has been verified in multiple tasks in physics simulation, i.e., grasping, opening-a-door, turning-a-cap, and touching-a-mouse, and has shown more reliable performances than the existing position control and the fixed-gain-based force control approaches.

翻译:在这项工作中,我们侧重于通过利用视觉感测和适应性力量控制来提高机器人的超光速能力。TeachNet是一个基于视觉的远程操作学习框架,用于将人的手姿势映射成多手指机器人手。我们扩大TeachNet,它最初基于不精确的动能映射和定位专用悬浮,并配有用于极速操作任务的生物模拟学习基于学习的合规控制算法。这个合规控制器将图画的机器人联合角度从TeachNet(TeachNet)作为预期的目标,计算所期望的联合硬石。它来自人类运动学习的生物模拟控制战略的计算模型,该模型允许在使用参考联合角轨迹时将控制变量(阻碍和进食力向前)进行在线调整。同时调整阻力和进向前配置使机器人能够以兼容的方式与环境互动。我们在物理模拟的多项任务中已经验证了我们的方法,即掌握、打开门、打开门、转变屏和感触动力控制方法,并显示更可靠的现有固定控制方法。