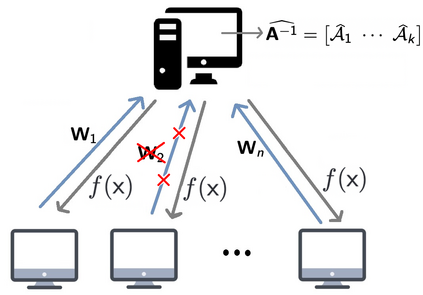

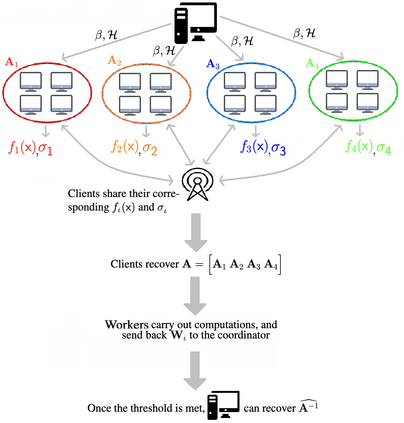

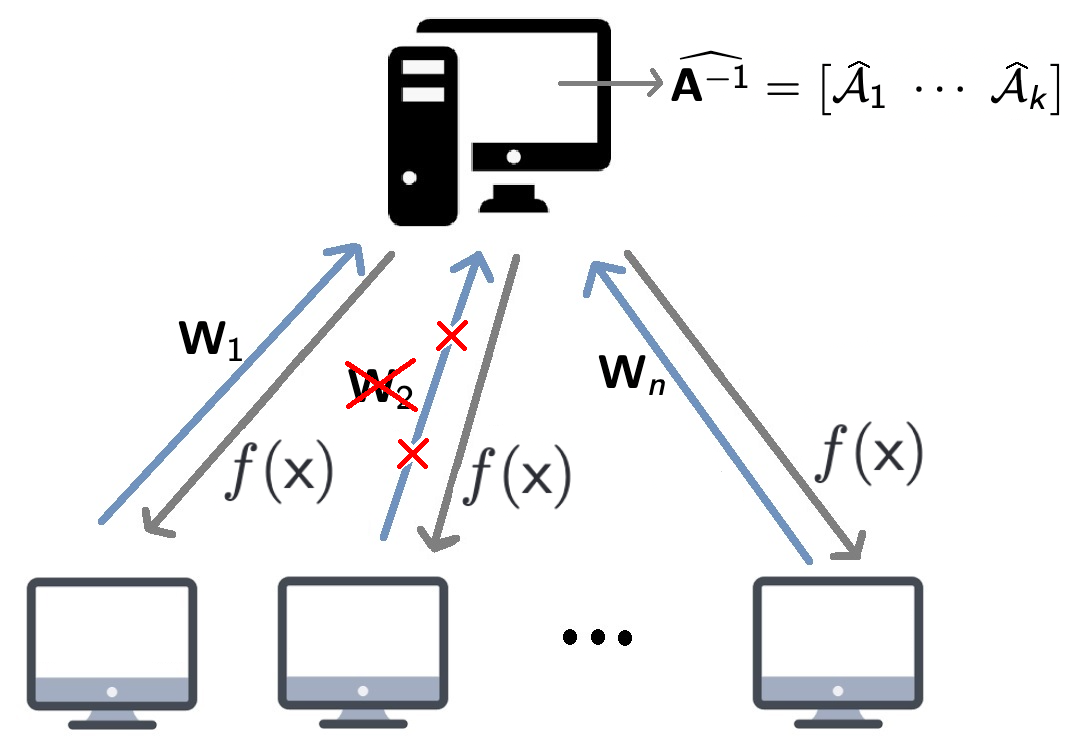

Federated learning (FL) is a decentralized model for training data distributed across client devices. Coded computing (CC) is a method for mitigating straggling workers in a centralized computing network, by using erasure-coding techniques. In this work we propose approximating the inverse of a data matrix, where the data is generated by clients; similar to the FL paradigm, while also being resilient to stragglers. To do so, we propose a CC method based on gradient coding. We modify this method so that the coordinator does not need to have access to the local data, the network we consider is not centralized, and the communications which take place are secure against potential eavesdroppers.

翻译:联邦学习(FL)是一种分散式的培训模式,用于在客户设备之间传播培训数据。 编码计算(CC)是一种通过使用消化编码技术减少中央计算网络工人在集中计算网络中被挤压的方法。 在这项工作中,我们提议对数据矩阵的反向进行近似调整,因为数据矩阵是由客户生成的;类似于FL模式,同时也对排泄器具有弹性。 为了这样做,我们提议了一种基于梯度编码的CC方法。 我们修改这一方法,使协调员不必获得本地数据,我们认为网络不是集中式的,通信对于潜在的窃听者来说是安全的。