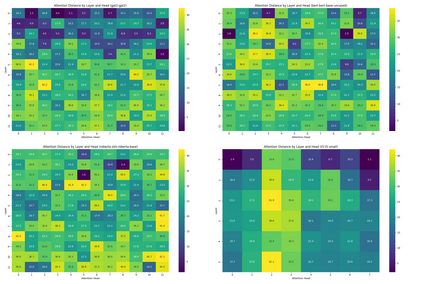

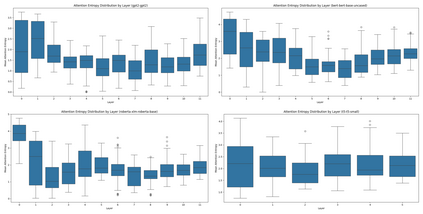

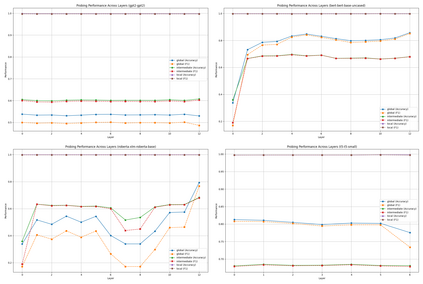

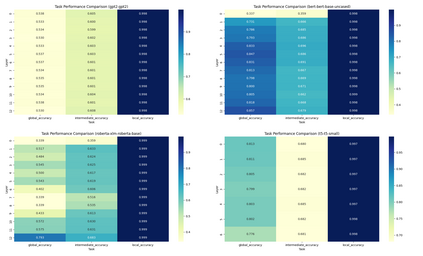

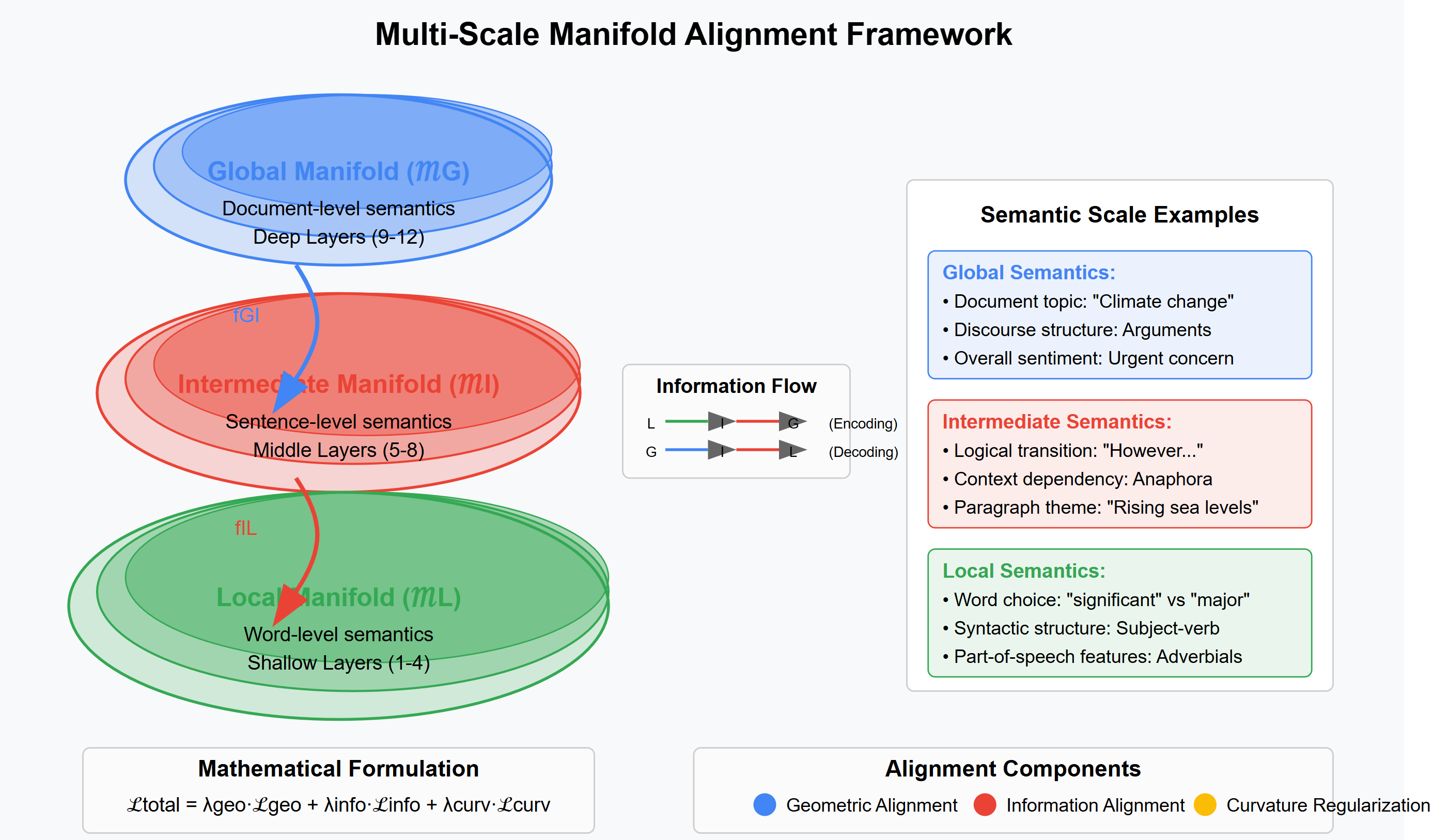

We present Multi-Scale Manifold Alignment(MSMA), an information-geometric framework that decomposes LLM representations into local, intermediate, and global manifolds and learns cross-scale mappings that preserve geometry and information. Across GPT-2, BERT, RoBERTa, and T5, we observe consistent hierarchical patterns and find that MSMA improves alignment metrics under multiple estimators (e.g., relative KL reduction and MI gains with statistical significance across seeds). Controlled interventions at different scales yield distinct and architecture-dependent effects on lexical diversity, sentence structure, and discourse coherence. While our theoretical analysis relies on idealized assumptions, the empirical results suggest that multi-objective alignment offers a practical lens for analyzing cross-scale information flow and guiding representation-level control.

翻译:暂无翻译