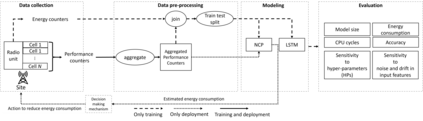

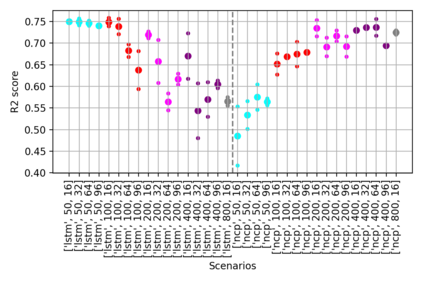

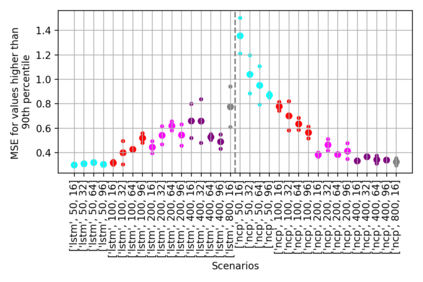

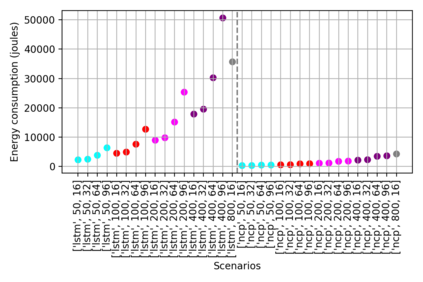

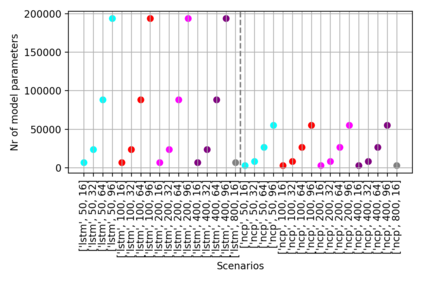

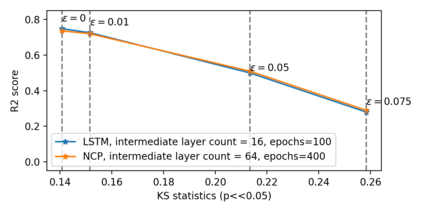

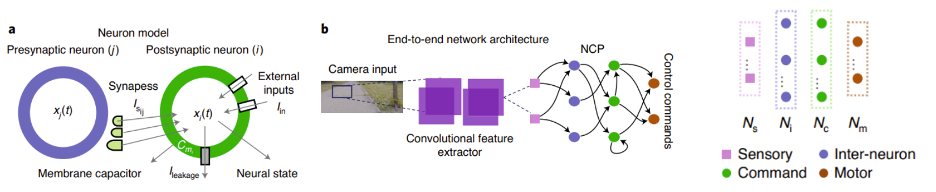

Optimization of radio hardware and AI-based network management software yield significant energy savings in radio access networks. The execution of underlying Machine Learning (ML) models, which enable energy savings through recommended actions, may require additional compute and energy, highlighting the opportunity to explore and adopt accurate and energy-efficient ML technologies. This work evaluates the novel use of sparsely structured Neural Circuit Policies (NCPs) in a use case to estimate the energy consumption of base stations. Sparsity in ML models yields reduced memory, computation and energy demand, hence facilitating a low-cost and scalable solution. We also evaluate the generalization capability of NCPs in comparison to traditional and widely used ML models such as Long Short Term Memory (LSTM), via quantifying their sensitivity to varying model hyper-parameters (HPs). NCPs demonstrated a clear reduction in computational overhead and energy consumption. Moreover, results indicated that the NCPs are robust to varying HPs such as number of epochs and neurons in each layer, making them a suitable option to ease model management and to reduce energy consumption in Machine Learning Operations (MLOps) in telecommunications.

翻译:暂无翻译