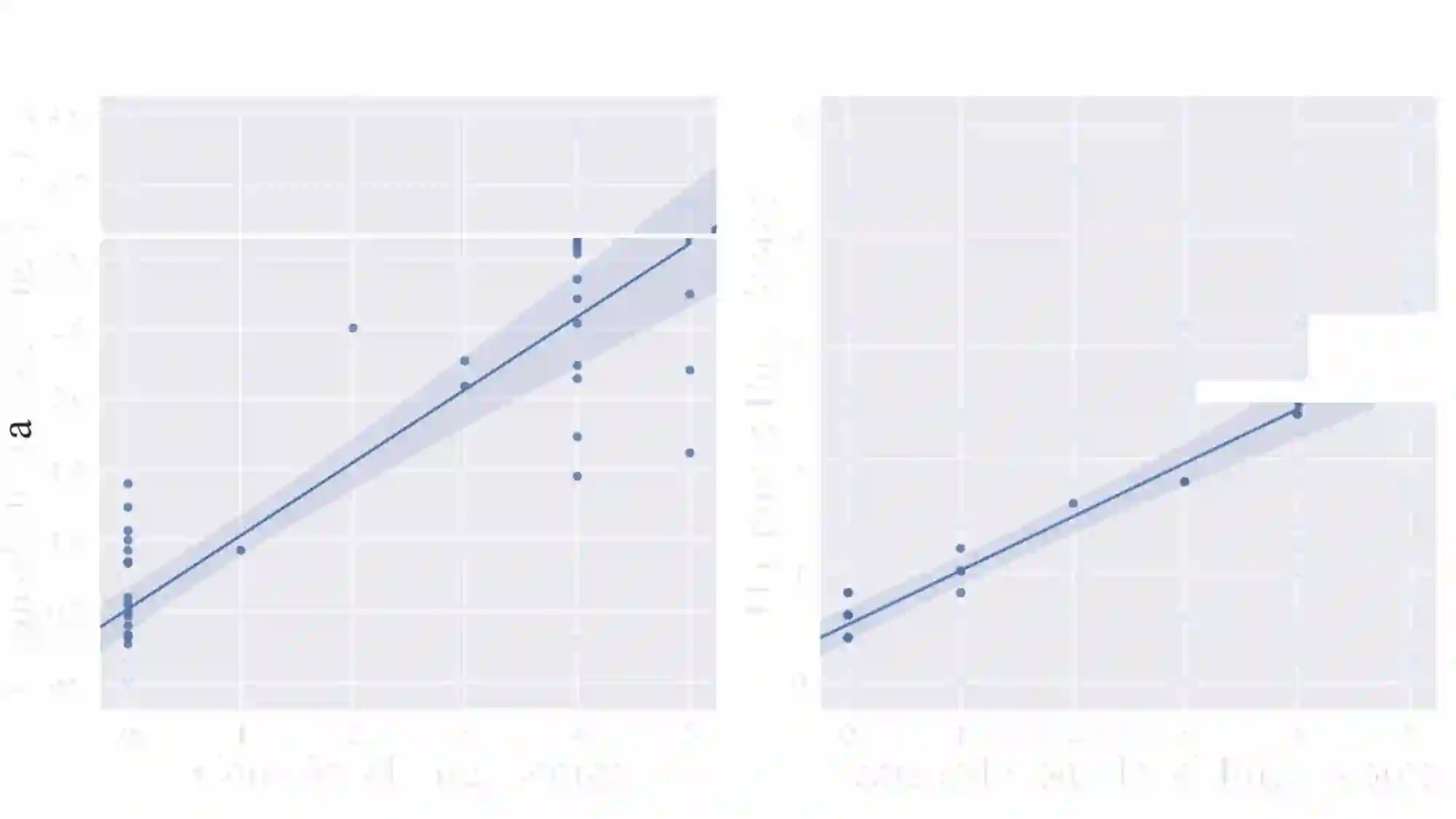

User engagement is a critical metric for evaluating the quality of open-domain dialogue systems. Prior work has focused on conversation-level engagement by using heuristically constructed features such as the number of turns and the total time of the conversation. In this paper, we investigate the possibility and efficacy of estimating utterance-level engagement and define a novel metric, {\em predictive engagement}, for automatic evaluation of open-domain dialogue systems. Our experiments demonstrate that (1) human annotators have high agreement on assessing utterance-level engagement scores; (2) conversation-level engagement scores can be predicted from properly aggregated utterance-level engagement scores. Furthermore, we show that the utterance-level engagement scores can be learned from data. These scores can improve automatic evaluation metrics for open-domain dialogue systems, as shown by correlation with human judgements. This suggests that predictive engagement can be used as a real-time feedback for training better dialogue models.

翻译:用户参与是评价开放域对话系统质量的关键衡量标准。 先前的工作重点是通过使用诸如旋转次数和对话总时间等超自然构建的特征进行对话级接触。 在本文中,我们调查了估计发言级接触的可能性和有效性,并定义了对开放域对话系统进行自动评价的新颖衡量标准, 即 ~ eem 预测性接触 。 我们的实验表明:(1) 人类通知员对评估谈话级接触分数高度一致;(2) 对话级接触分数可以从适当汇总的谈话级接触分数中预测。 此外,我们表明,从数据中可以学到发言级接触分数。 这些分数可以改进开放域对话系统的自动评价度量,这与人类的判断有关。 这意味着,预测性接触可以作为实时反馈,用于培训更好的对话模式。