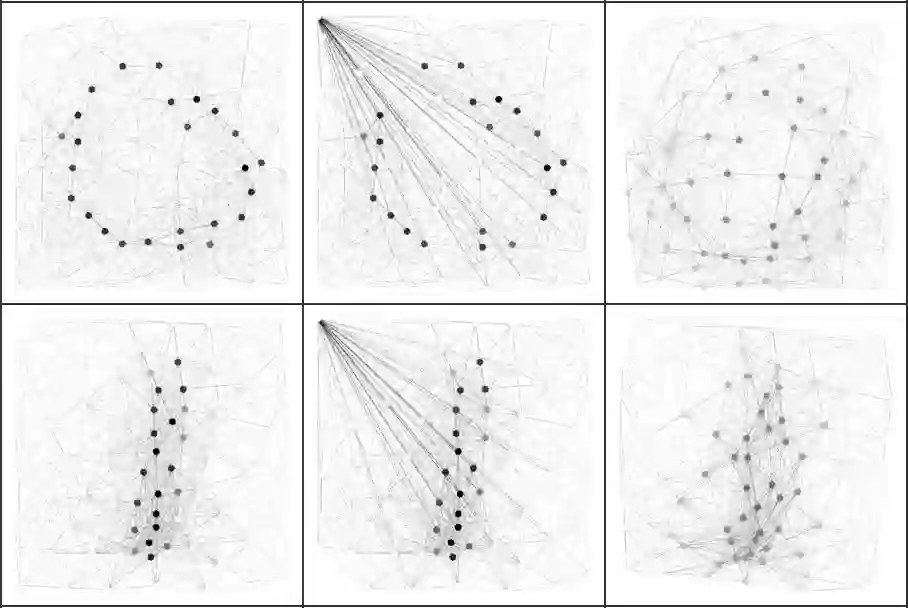

We propose the Graph Context Encoder (GCE), a simple but efficient approach for graph representation learning based on graph feature masking and reconstruction. GCE models are trained to efficiently reconstruct input graphs similarly to a graph autoencoder where node and edge labels are masked. In particular, our model is also allowed to change graph structures by masking and reconstructing graphs augmented by random pseudo-edges. We show that GCE can be used for novel graph generation, with applications for molecule generation. Used as a pretraining method, we also show that GCE improves baseline performances in supervised classification tasks tested on multiple standard benchmark graph datasets.

翻译:我们建议使用图形背景编码器(GCE),这是基于图形特征掩码和重建的图形代表学习的一种简单而有效的方法。GCE模型经过培训,可以有效地重建输入图形,类似于图形自动编码器,节点和边缘标签被遮盖。特别是,我们的模型还可以通过掩码和重新利用随机伪格子来补充图形来改变图形结构。我们显示,GCE可用于新颖的图形生成,并用于分子生成。作为一种预培训方法,我们还表明,GCE改进了在多标准基准图表数据集中测试的监督分类任务的基准绩效。