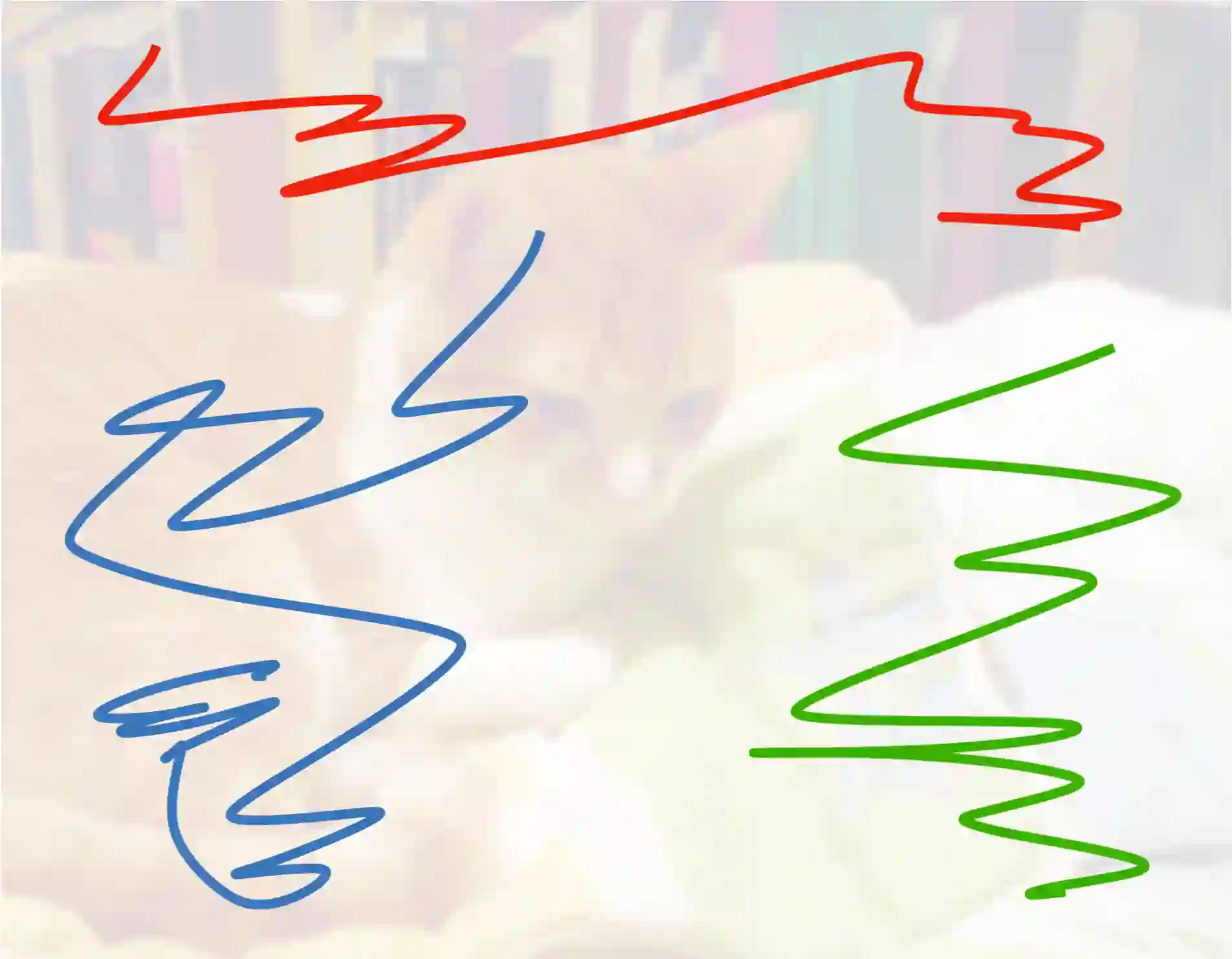

Computer vision tasks such as object detection and semantic/instance segmentation rely on the painstaking annotation of large training datasets. In this paper, we propose LocTex that takes advantage of the low-cost localized textual annotations (i.e., captions and synchronized mouse-over gestures) to reduce the annotation effort. We introduce a contrastive pre-training framework between images and captions and propose to supervise the cross-modal attention map with rendered mouse traces to provide coarse localization signals. Our learned visual features capture rich semantics (from free-form captions) and accurate localization (from mouse traces), which are very effective when transferred to various downstream vision tasks. Compared with ImageNet supervised pre-training, LocTex can reduce the size of the pre-training dataset by 10x or the target dataset by 2x while achieving comparable or even improved performance on COCO instance segmentation. When provided with the same amount of annotations, LocTex achieves around 4% higher accuracy than the previous state-of-the-art "vision+language" pre-training approach on the task of PASCAL VOC image classification.

翻译:计算机的视觉任务,如对象探测和语义/内分解等,取决于大型培训数据集的艰苦说明。在本文中,我们建议LocTex利用低成本本地化文本说明(如标题和同步鼠标翻转手势)来减少批注努力。我们引入图像和字幕之间的对比性培训前框架,并提议监督跨模式关注地图,通过鼠标的痕迹提供粗略的本地化信号。我们所学的视觉特征捕捉到丰富的语义(来自自由形式说明)和准确本地化(来自鼠标痕迹),这些语义在转移到各种下游视觉任务时非常有效。与图像网络监管前培训相比,LocTex可以将培训前数据集的大小减少10x或目标数据集减少2x,同时实现CO例分解的可比较性或甚至改进性能。如果提供同样数量的说明,LocTex在PALOC图像分类上比先前的“视觉+语言”状态前培训方法高出4%左右。