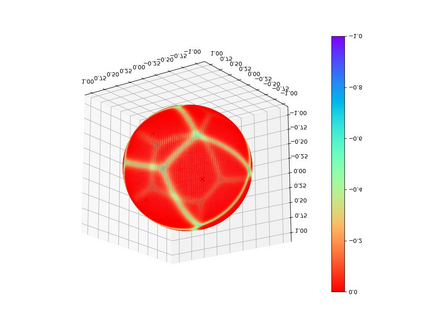

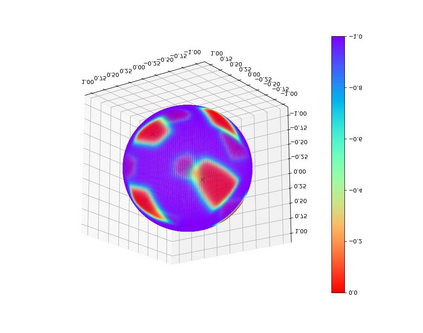

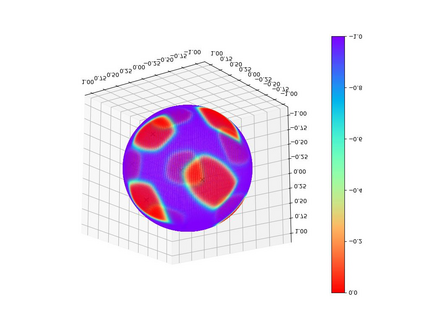

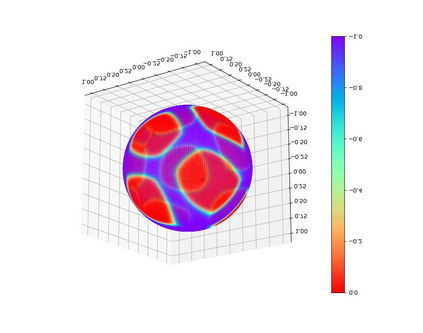

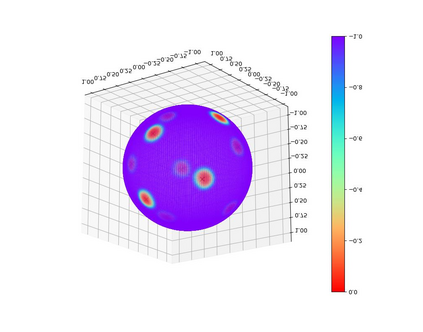

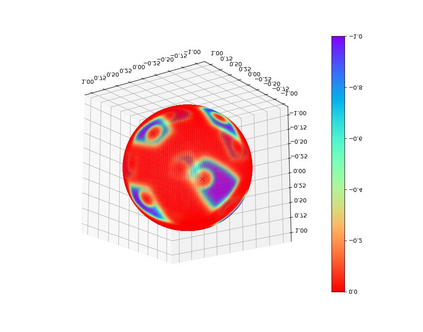

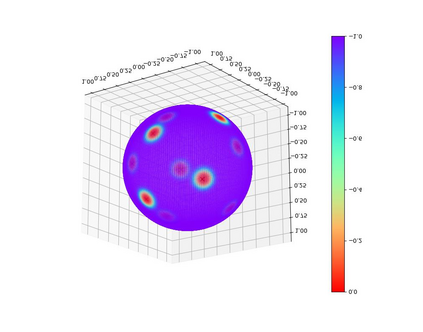

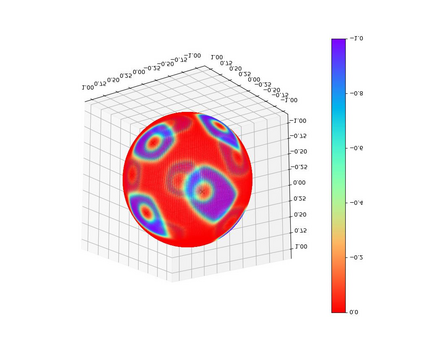

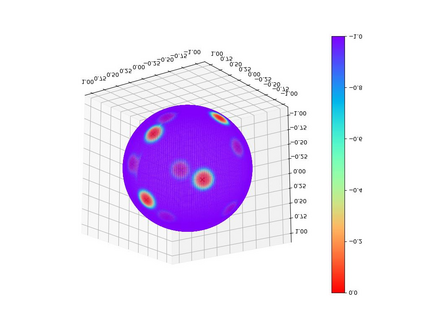

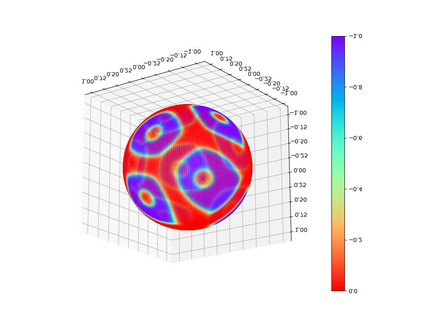

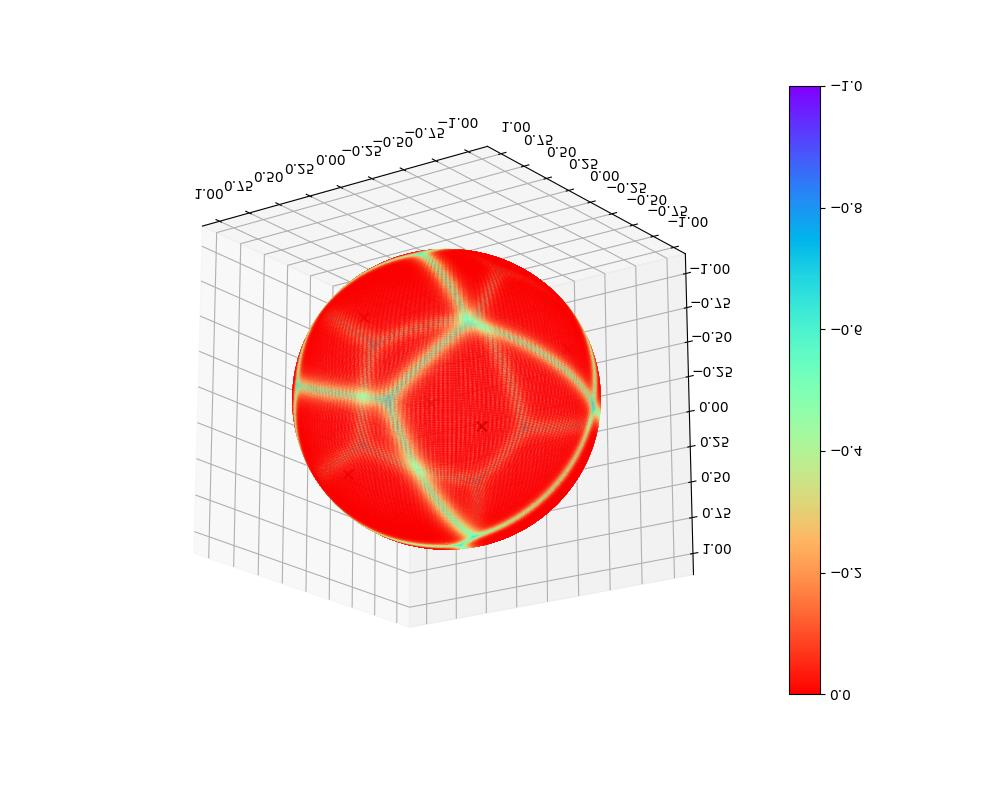

Existing classification-based face recognition methods have achieved remarkable progress, introducing large margin into hypersphere manifold to learn discriminative facial representations. However, the feature distribution is ignored. Poor feature distribution will wipe out the performance improvement brought about by margin scheme. Recent studies focus on the unbalanced inter-class distribution and form a equidistributed feature representations by penalizing the angle between identity and its nearest neighbor. But the problem is more than that, we also found the anisotropy of intra-class distribution. In this paper, we propose the `gradient-enhancing term' that concentrates on the distribution characteristics within the class. This method, named IntraLoss, explicitly performs gradient enhancement in the anisotropic region so that the intra-class distribution continues to shrink, resulting in isotropic and more compact intra-class distribution and further margin between identities. The experimental results on LFW, YTF and CFP-FP show that our outperforms state-of-the-art methods by gradient enhancement, demonstrating the superiority of our method. In addition, our method has intuitive geometric interpretation and can be easily combined with existing methods to solve the previously ignored problems.

翻译:现有基于分类的面部识别方法取得了显著进展,在超视距中引入了巨大的宽度,以学习歧视性面部表征。然而,特征分布被忽略了。特征分布不良将消除边际计划带来的性能改善。最近的研究侧重于阶级间分布不平衡的问题,并通过惩罚身份与近邻之间的角来形成均衡分布特征。但问题还不止于此,我们还发现阶级内部分布的厌食症。在本文中,我们建议了集中关注类内分布特征的“渐渐增强术语 ” 。这个名为IntraLos的方法,明确在类内分配过程中进行梯度增强,从而使类内分布继续缩小,从而导致异性分布和更为紧凑的阶级内部分布以及身份之间的更大距离。关于LFW、YTF和CFP-FP的实验结果显示,我们采用梯度增强的方式超越了最先进的方法,显示了我们方法的优越性。此外,我们的方法有直观的几何解释,可以轻易地与以前解决问题的方法结合起来。