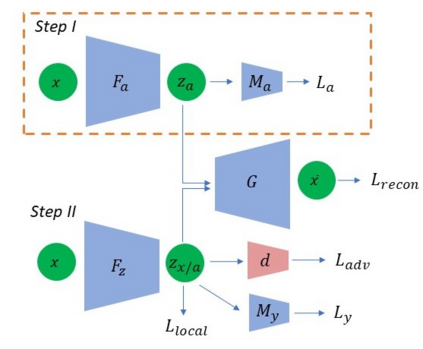

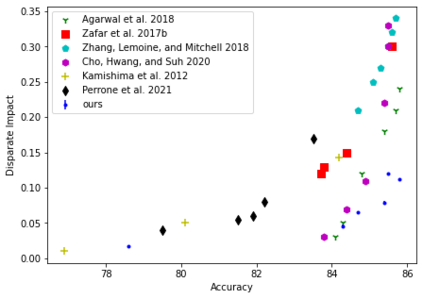

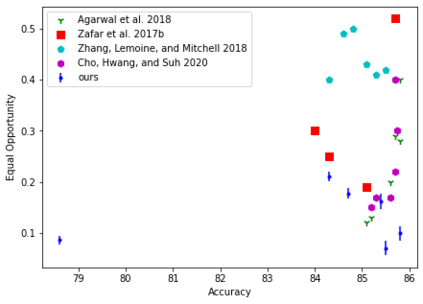

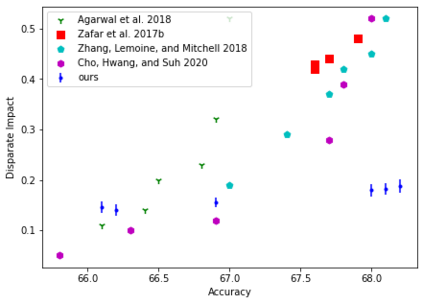

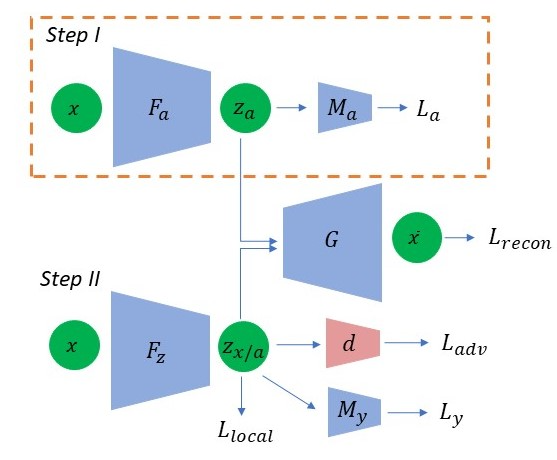

We study the problem of performing classification in a manner that is fair for sensitive groups, such as race and gender. This problem is tackled through the lens of disentangled and locally fair representations. We learn a locally fair representation, such that, under the learned representation, the neighborhood of each sample is balanced in terms of the sensitive attribute. For instance, when a decision is made to hire an individual, we ensure that the $K$ most similar hired individuals are racially balanced. Crucially, we ensure that similar individuals are found based on attributes not correlated to their race. To this end, we disentangle the embedding space into two representations. The first of which is correlated with the sensitive attribute while the second is not. We apply our local fairness objective only to the second, uncorrelated, representation. Through a set of experiments, we demonstrate the necessity of both disentangled and local fairness for obtaining fair and accurate representations. We evaluate our method on real-world settings such as predicting income and re-incarceration rate and demonstrate the advantage of our method.

翻译:我们研究以对种族和性别等敏感群体公平的方式进行分类的问题,这个问题是通过分解和当地公平代表的镜头来解决的。我们学习当地公平代表制,这样,在学习到的代表制下,每个抽样的周边在敏感属性方面是平衡的。例如,当决定雇用个人时,我们确保最相似的雇用个人在种族上是平衡的。关键是,我们确保根据与种族无关的属性发现类似的个人。为此,我们将嵌入的空间分解为两个代表制。第一个与敏感属性相关,第二个与敏感属性无关。我们只将地方公平性目标应用于第二个无关的代表制。通过一系列实验,我们证明既需要分解又地方公平,以获得公平和准确的表述。我们评估真实世界环境的方法,例如预测收入和再受损失率,并展示我们方法的优势。