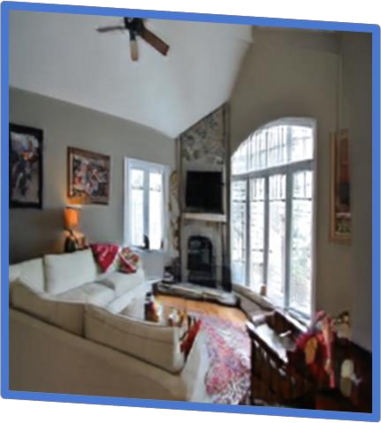

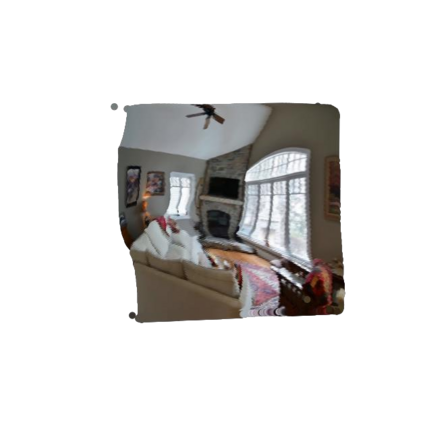

Recent advancements in differentiable rendering and 3D reasoning have driven exciting results in novel view synthesis from a single image. Despite realistic results, methods are limited to relatively small view change. In order to synthesize immersive scenes, models must also be able to extrapolate. We present an approach that fuses 3D reasoning with autoregressive modeling to outpaint large view changes in a 3D-consistent manner, enabling scene synthesis. We demonstrate considerable improvement in single image large-angle view synthesis results compared to a variety of methods and possible variants across simulated and real datasets. In addition, we show increased 3D consistency compared to alternative accumulation methods. Project website: https://crockwell.github.io/pixelsynth/

翻译:最近不同投影和3D推理的进步促使从单一图像中的新视角合成取得了令人振奋的结果。尽管取得了现实的结果,但方法仅限于相对较小的视图变化。为了综合浸泡场景,模型也必须能够推断出来。我们提出了一个方法,将3D推理与自动递增模型结合起来,以三维一致的方式超越大视图变化,促成场景合成。我们展示了单一图像大角合成结果与模拟和真实数据集的各种方法和可能的变体相比有了相当大的改进。此外,我们展示了与替代积累方法相比,3D一致性提高了。项目网站:https://crockwell.github.io/pixelsonth/。项目网站:https://crockwell.github.io/pixelsonth/