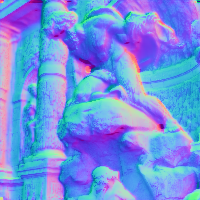

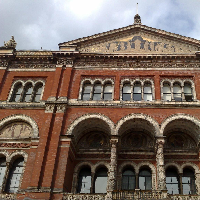

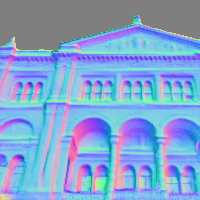

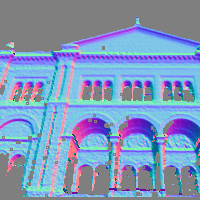

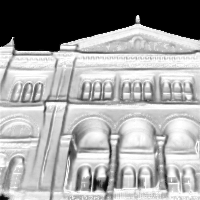

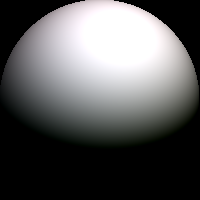

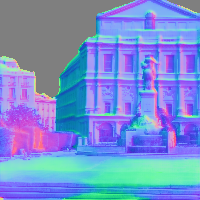

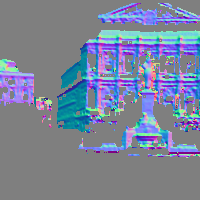

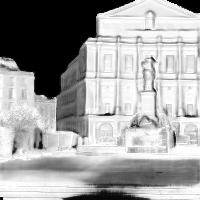

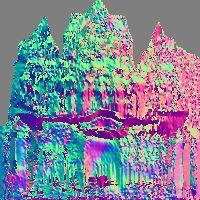

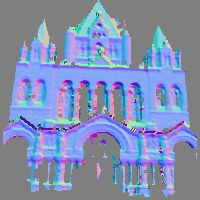

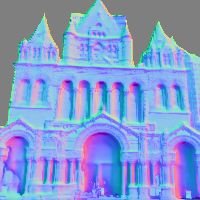

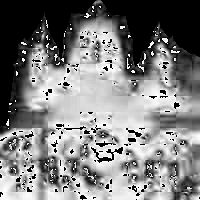

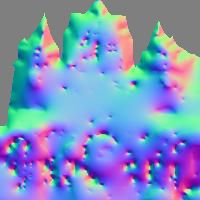

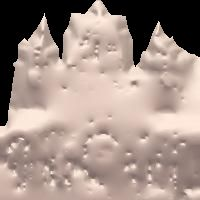

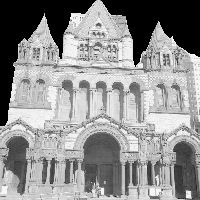

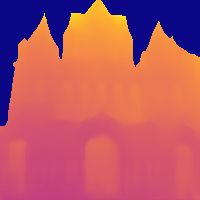

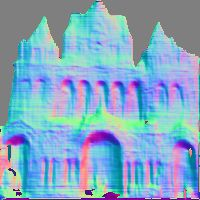

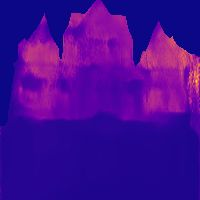

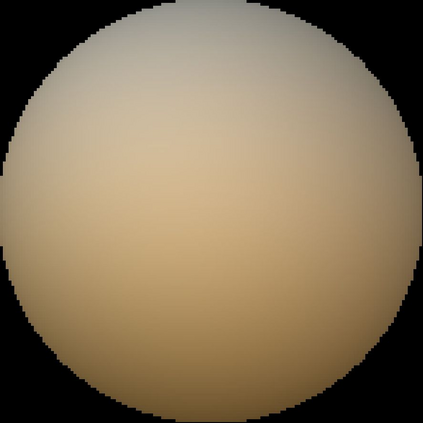

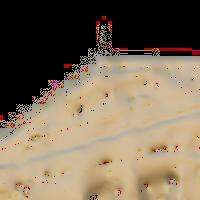

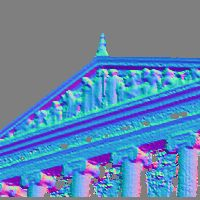

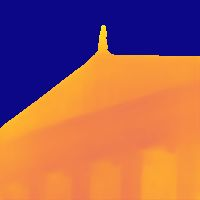

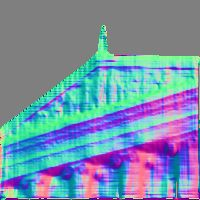

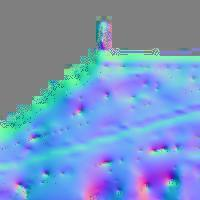

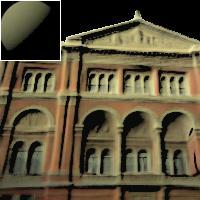

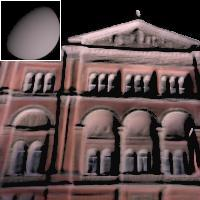

We show how to train a fully convolutional neural network to perform inverse rendering from a single, uncontrolled image. The network takes an RGB image as input, regresses albedo and normal maps from which we compute lighting coefficients. Our network is trained using large uncontrolled image collections without ground truth. By incorporating a differentiable renderer, our network can learn from self-supervision. Since the problem is ill-posed we introduce additional supervision: 1. We learn a statistical natural illumination prior, 2. Our key insight is to perform offline multiview stereo (MVS) on images containing rich illumination variation. From the MVS pose and depth maps, we can cross project between overlapping views such that Siamese training can be used to ensure consistent estimation of photometric invariants. MVS depth also provides direct coarse supervision for normal map estimation. We believe this is the first attempt to use MVS supervision for learning inverse rendering.

翻译:我们展示如何训练一个完全进化的神经网络,从一个不受控制的图像中进行反向转换。 网络将RGB图像作为输入, 反向反向反向反向反向反向反射, 以及我们用来计算照明系数的正常地图。 我们的网络是用大量不受控制的图像收集来训练的, 没有地面真相的。 我们的网络可以通过吸收一个不同的投影器, 从自我监督中学习。 由于问题不严重, 我们引入了额外的监督 : 1. 我们之前学会了统计性自然污染, 2. 我们的关键洞察力是用含有丰富照明变异的图像进行离线多视立体( MVS) 。 从 MVS 的外观和深度地图中, 我们可以在重叠的视图中进行交叉工程, 这样, 西亚的训练可以用来确保对变异体中光度进行一致的估算。 MVSS 深度还可以为正常的地图估算提供直接粗略的监控。 我们认为这是第一次尝试使用 MVS监督来进行反向的学习。