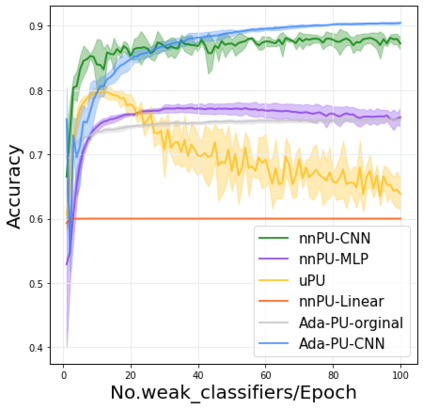

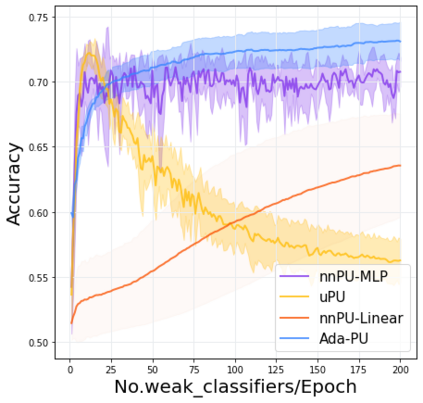

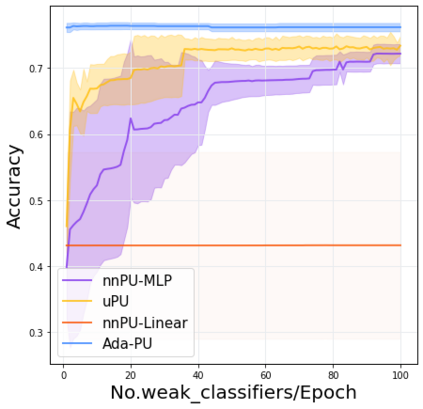

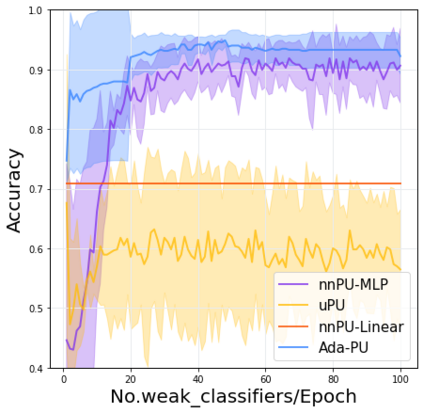

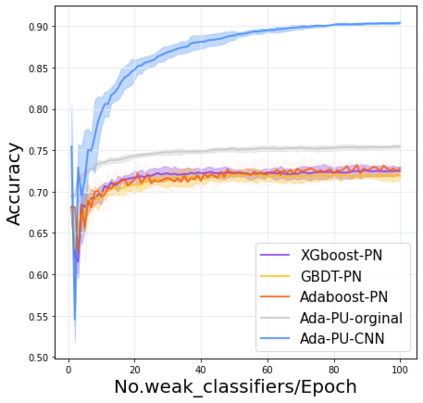

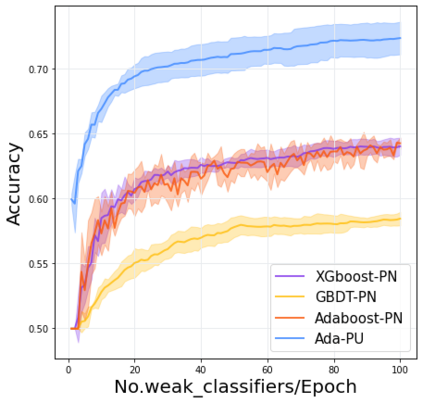

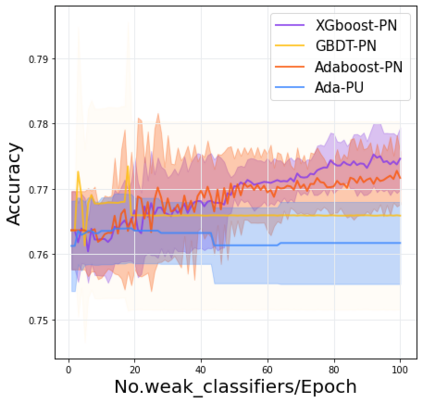

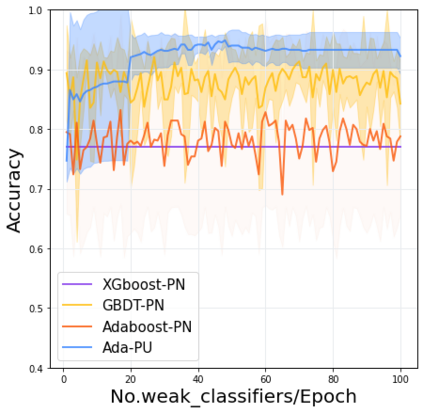

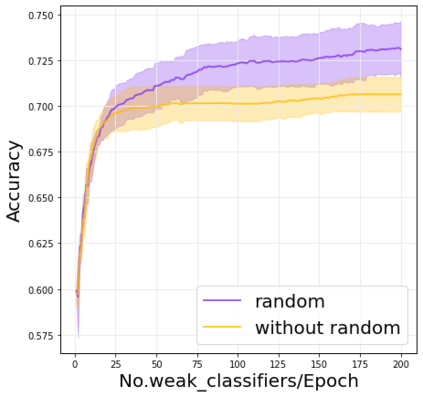

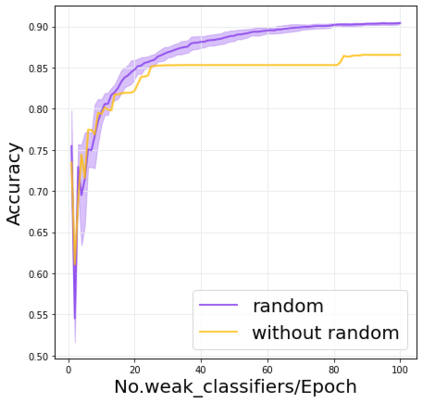

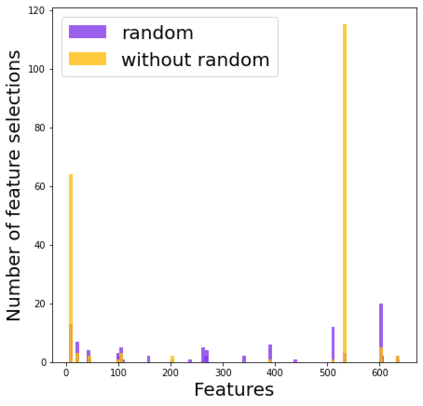

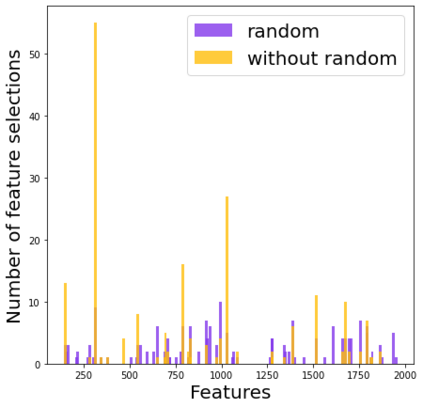

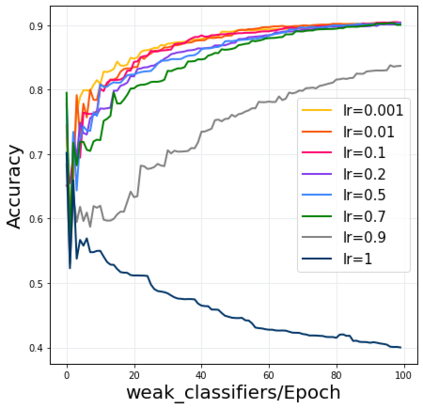

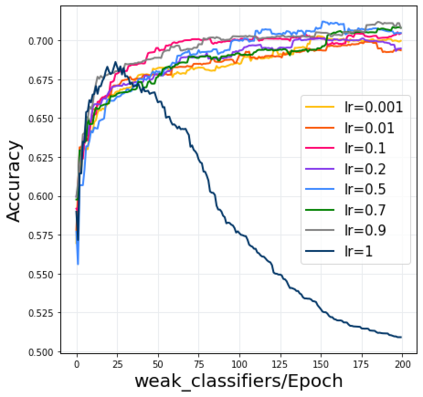

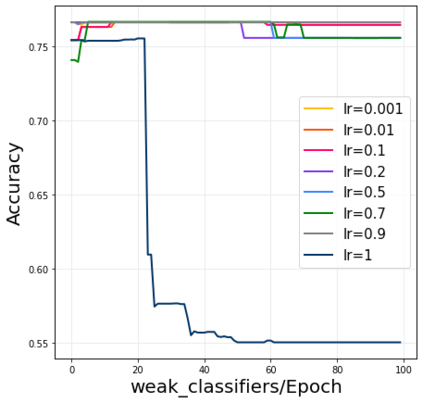

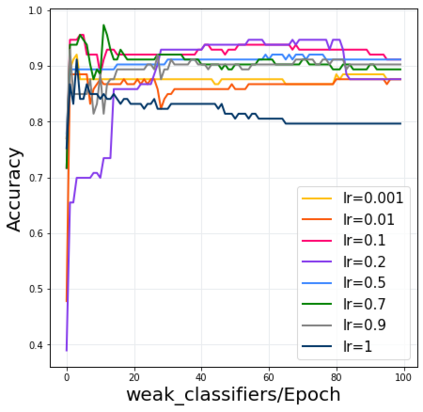

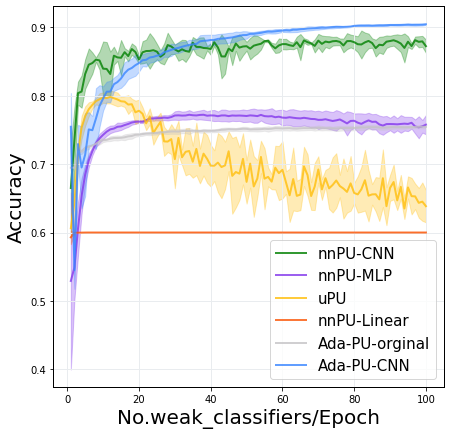

Positive-unlabeled (PU) learning deals with binary classification problems when only positive (P) and unlabeled (U) data are available. A lot of PU methods based on linear models and neural networks have been proposed; however, there still lacks study on how the theoretically sound boosting-style algorithms could work with P and U data. Considering that in some scenarios when neural networks cannot perform as good as boosting algorithms even with fully-supervised data, we propose a novel boosting algorithm for PU learning: Ada-PU, which compares against neural networks. Ada-PU follows the general procedure of AdaBoost while two different distributions of P data are maintained and updated. After a weak classifier is learned on the newly updated distribution, the corresponding combining weight for the final ensemble is estimated using only PU data. We demonstrated that with a smaller set of base classifiers, the proposed method is guaranteed to keep the theoretical properties of boosting algorithm. In experiments, we showed that Ada-PU outperforms neural networks on benchmark PU datasets. We also study a real-world dataset UNSW-NB15 in cyber security and demonstrated that Ada-PU has superior performance for malicious activities detection.

翻译:当只有正(P)和无标(U)数据时,阳性(PU)学习涉及二进制分类问题。许多基于线性模型和神经网络的PU方法已经提出;然而,对于理论性加速式算法如何与P和U数据相配合,仍然缺乏研究。考虑到在某些情况下,神经网络即使使用完全监督的数据也不能像提法一样好于提法,我们建议为PU学习采用新的推算法:Ada-PU,它比照神经网络。Ada-PU遵循AdaBoost的一般程序,同时两种不同的P数据分布得到维护和更新。在新更新的分布学得弱的分类器后,对最终共性的相应综合权重仅使用PU数据估算。我们证明,如果使用较小的一组基础分类器,拟议的方法就能保证保持提法的理论性能。在实验中,我们显示Ada-PU在基准的PU数据集上将神经网络网络化。我们还研究了一种真实的检测和高级的网络性能。