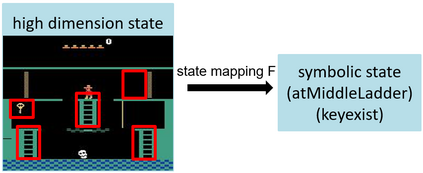

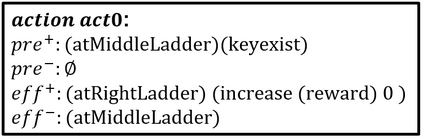

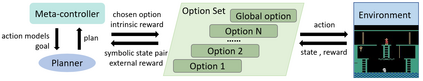

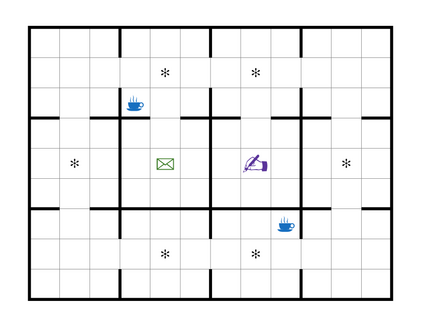

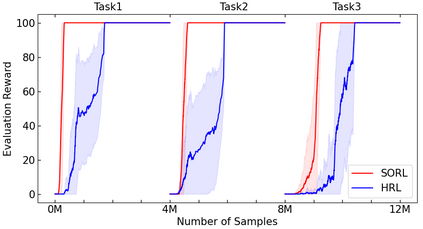

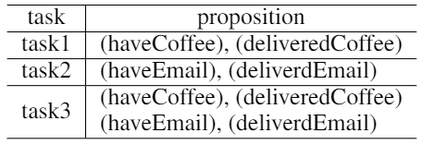

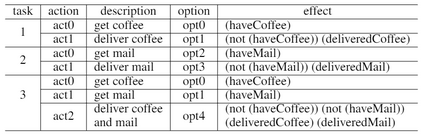

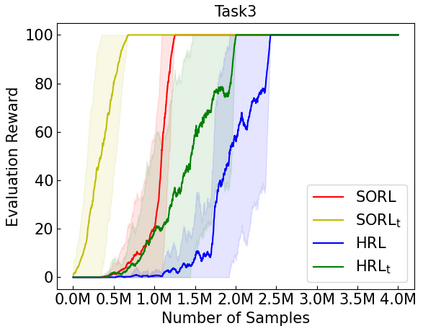

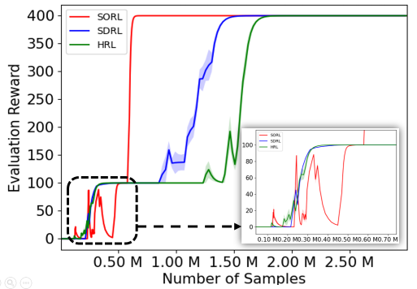

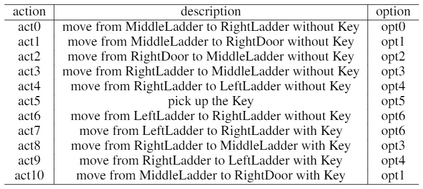

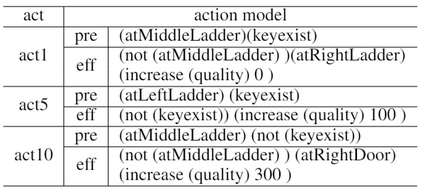

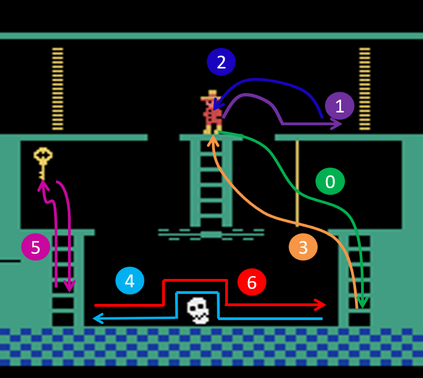

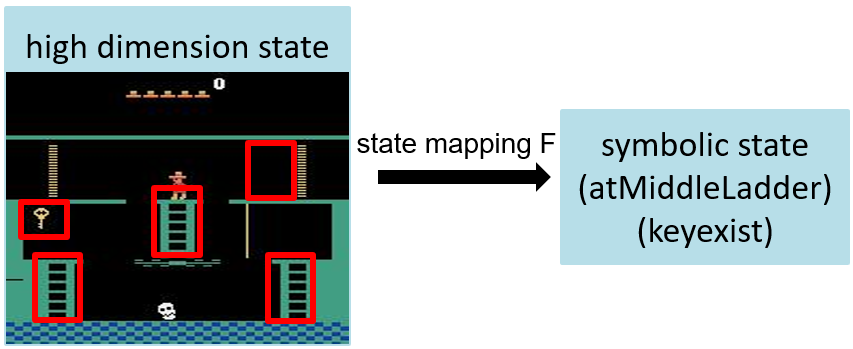

Despite of achieving great success in real-world applications, Deep Reinforcement Learning (DRL) is still suffering from three critical issues, i.e., data efficiency, lack of the interpretability and transferability. Recent research shows that embedding symbolic knowledge into DRL is promising in addressing those challenges. Inspired by this, we introduce a novel deep reinforcement learning framework with symbolic options. Our framework features a loop training procedure, which enables guiding the improvement of policy by planning with planning models (including action models and hierarchical task network models) and symbolic options learned from interactive trajectories automatically. The learned symbolic options alleviate the dense requirement of expert domain knowledge and provide inherent interpretability of policies. Moreover, the transferability and data efficiency can be further improved by planning with the symbolic planning models. To validate the effectiveness of our framework, we conduct experiments on two domains, Montezuma's Revenge and Office World, respectively. The results demonstrate the comparable performance, improved data efficiency, interpretability and transferability.

翻译:暂无翻译