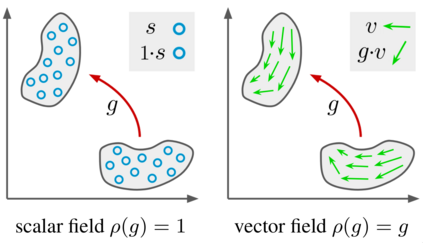

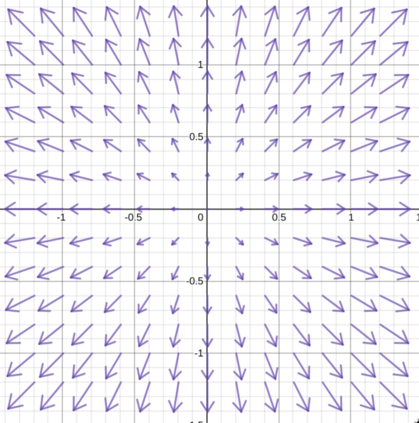

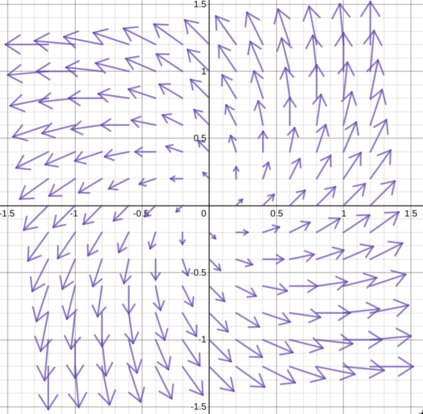

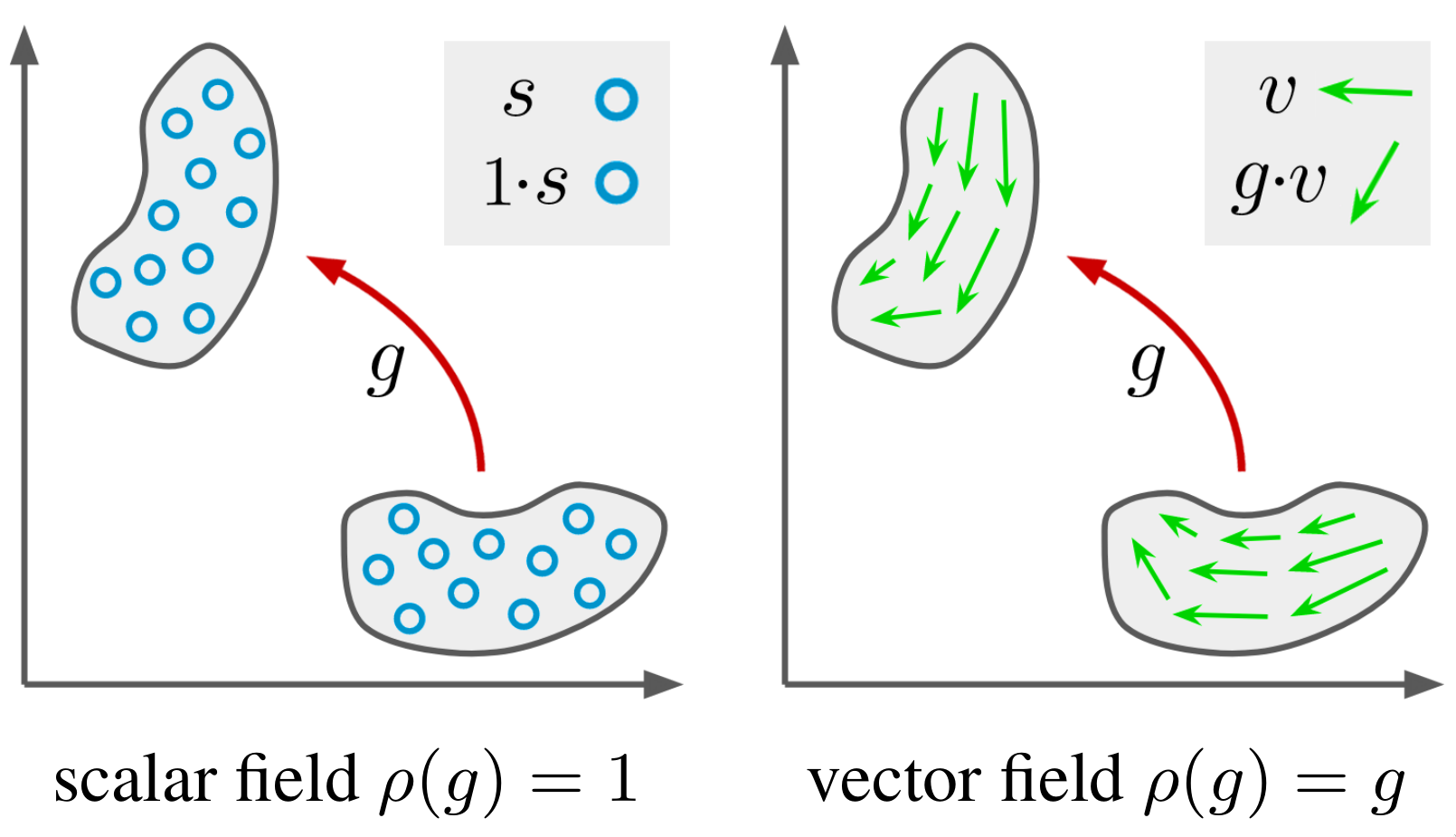

Recent work in equivariant deep learning bears strong similarities to physics. Fields over a base space are fundamental entities in both subjects, as are equivariant maps between these fields. In deep learning, however, these maps are usually defined by convolutions with a kernel, whereas they are partial differential operators (PDOs) in physics. Developing the theory of equivariant PDOs in the context of deep learning could bring these subjects even closer together and lead to a stronger flow of ideas. In this work, we derive a $G$-steerability constraint that completely characterizes when a PDO between feature vector fields is equivariant, for arbitrary symmetry groups $G$. We then fully solve this constraint for several important groups. We use our solutions as equivariant drop-in replacements for convolutional layers and benchmark them in that role. Finally, we develop a framework for equivariant maps based on Schwartz distributions that unifies classical convolutions and differential operators and gives insight about the relation between the two.

翻译:近代相异深层学习的最近工作与物理学有很强的相似之处。 基础空间上的字段是这两个学科的基本实体, 以及这些领域之间的等式地图。 然而,在深层学习中, 这些地图通常由内核混在一起来定义, 而它们是物理方面的部分操作者。 在深层学习中发展等式 PDO 理论可以使这些科目更加接近,并导致更强有力的思想流动。 在这项工作中, 我们产生了一个$G$的可变性限制, 它完全代表了特性矢量场之间的 PDO 是等式的, 用于任意的对称组 $G。 我们随后完全解决了这个限制。 我们用我们的解决方案作为变相层的等式投影替换器, 并以此作用作为基准。 最后, 我们开发一个基于Schwartz分布的变异性地图框架, 使古典变异体和差异操作者相互融合, 并洞察两者之间的关系。