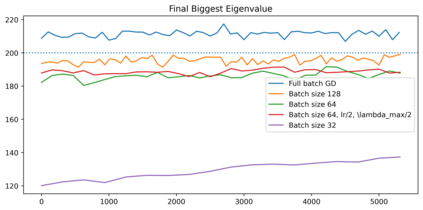

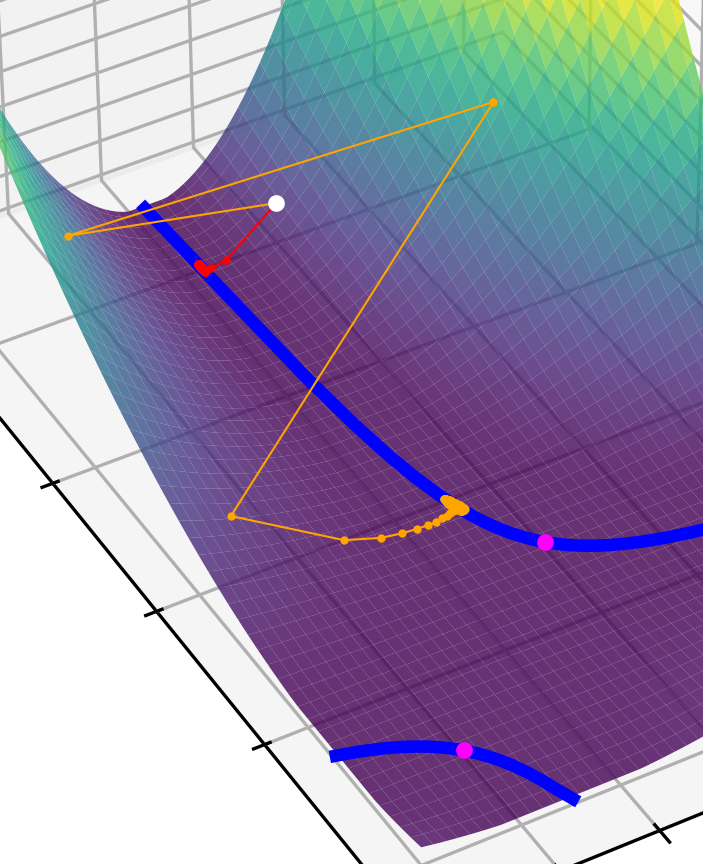

This article examines the implicit regularization effect of Stochastic Gradient Descent (SGD). We consider the case of SGD without replacement, the variant typically used to optimize large-scale neural networks. We analyze this algorithm in a more realistic regime than typically considered in theoretical works on SGD, as, e.g., we allow the product of the learning rate and Hessian to be $O(1)$. Our core theoretical result is that optimizing with SGD without replacement is locally equivalent to making an additional step on a novel regularizer. This implies that the trajectory of SGD without replacement diverges from both noise-injected GD and SGD with replacement (in which batches are sampled i.i.d.). Indeed, the two SGDs travel flat regions of the loss landscape in distinct directions and at different speeds. In expectation, SGD without replacement may escape saddles significantly faster and present a smaller variance. Moreover, we find that SGD implicitly regularizes the trace of the noise covariance in the eigendirections of small and negative Hessian eigenvalues. This coincides with penalizing a weighted trace of the Fisher Matrix and the Hessian on several vision tasks, thus encouraging sparsity in the spectrum of the Hessian of the loss in line with empirical observations from prior work. We also propose an explanation for why SGD does not train at the edge of stability (as opposed to GD).

翻译:暂无翻译