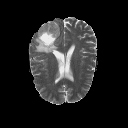

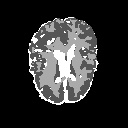

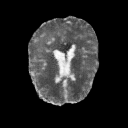

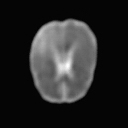

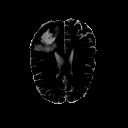

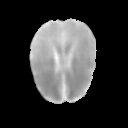

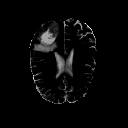

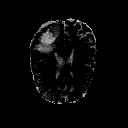

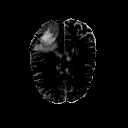

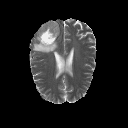

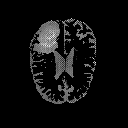

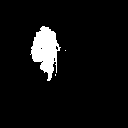

The goal of unsupervised anomaly segmentation (UAS) is to detect the pixel-level anomalies unseen during training. It is a promising field in the medical imaging community, e.g, we can use the model trained with only healthy data to segment the lesions of rare diseases. Existing methods are mainly based on Information Bottleneck, whose underlying principle is modeling the distribution of normal anatomy via learning to compress and recover the healthy data with a low-dimensional manifold, and then detecting lesions as the outlier from this learned distribution. However, this dimensionality reduction inevitably damages the localization information, which is especially essential for pixel-level anomaly detection. In this paper, to alleviate this issue, we introduce the semantic space of healthy anatomy in the process of modeling healthy-data distribution. More precisely, we view the couple of segmentation and synthesis as a special Autoencoder, and propose a novel cycle translation framework with a journey of 'image->semantic->image'. Experimental results on the BraTS and ISLES databases show that the proposed approach achieves significantly superior performance compared to several prior methods and segments the anomalies more accurately.

翻译:未经监督的异常分解(UAS)的目标是检测培训期间看不见的像素级异常现象,这是医学成像界中一个很有希望的领域,例如,我们可以使用只用健康数据培训的模型来分解稀有疾病的损伤。现有方法主要基于信息瓶颈,其根本原则是通过学习压缩和用低维元恢复健康数据,然后发现健康数据,然后作为这一已学分布的外源。然而,这种维度的减少不可避免地会损害本地化信息,而这种信息对于像素级异常检测特别重要。在本文中,为了缓解这一问题,我们在健康数据分布模型的模型过程中引入健康解剖学的语义空间。更确切地说,我们把分解和合成的结合作为特殊的自动解剖器进行模拟,并提议一个新型循环翻译框架,其旅程为“image->sematic-image ” 。 BRATS和ISLES数据库的实验结果显示,与以前的一些方法和部分相比,拟议方法的异常性表现更加精确。