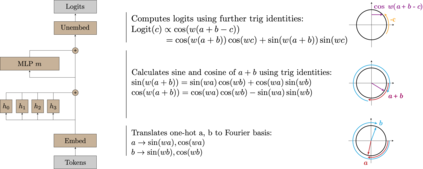

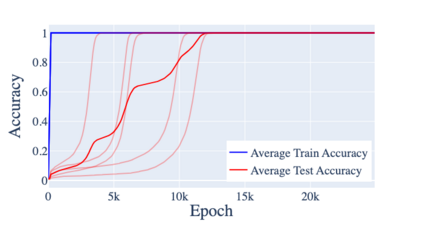

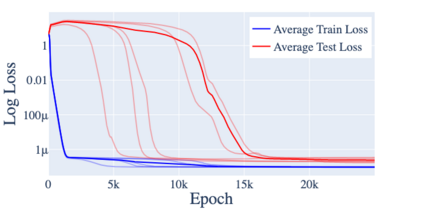

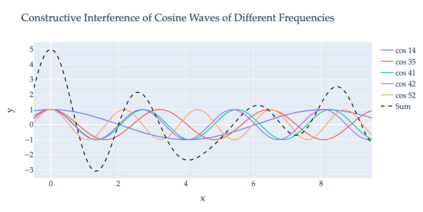

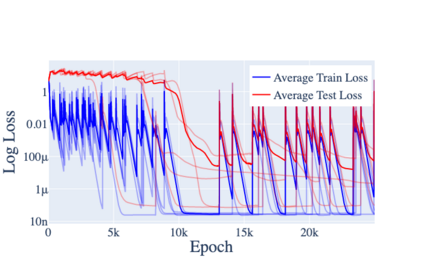

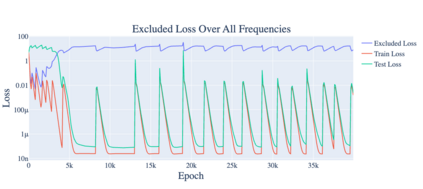

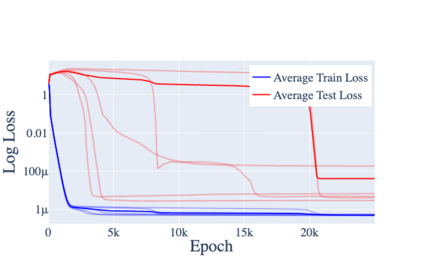

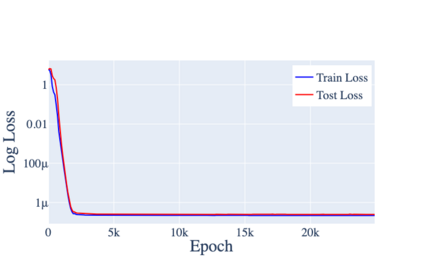

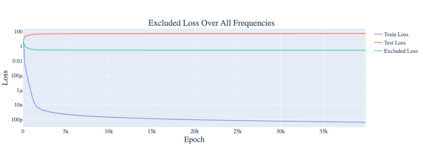

Neural networks often exhibit emergent behavior, where qualitatively new capabilities arise from scaling up the amount of parameters, training data, or training steps. One approach to understanding emergence is to find continuous \textit{progress measures} that underlie the seemingly discontinuous qualitative changes. We argue that progress measures can be found via mechanistic interpretability: reverse-engineering learned behaviors into their individual components. As a case study, we investigate the recently-discovered phenomenon of ``grokking'' exhibited by small transformers trained on modular addition tasks. We fully reverse engineer the algorithm learned by these networks, which uses discrete Fourier transforms and trigonometric identities to convert addition to rotation about a circle. We confirm the algorithm by analyzing the activations and weights and by performing ablations in Fourier space. Based on this understanding, we define progress measures that allow us to study the dynamics of training and split training into three continuous phases: memorization, circuit formation, and cleanup. Our results show that grokking, rather than being a sudden shift, arises from the gradual amplification of structured mechanisms encoded in the weights, followed by the later removal of memorizing components.

翻译:神经网络往往表现出突发行为, 而在增加参数数量、 培训数据或培训步骤时, 产生了质量上的新能力。 理解出现的方法之一是找到连续的\ textit{ 进步度量, 以支撑看起来不连续的质量变化 。 我们主张, 可以通过机械化解释来找到进步措施 : 反向工程学习的行为 。 作为案例研究, 我们调查最近发现的“ 烘干” 现象, 由经过模块化任务培训的小型变压器展示 。 我们完全改变这些网络所学的算法, 它使用离散的 Fourier 变换和三角特征来转换为圆形。 我们确认算法, 方法是分析激活和重量, 在 Fourier 空间进行布局。 基于这一理解, 我们定义了进展措施, 使我们能够研究培训的动态, 并将培训分为三个连续阶段: 记忆化、 电路形成和清理。 我们的结果显示, 引力, 而不是突变法, 产生于在重量组成部分中逐步重新编码的机械化机制,, 之后将我移除。

相关内容

Source: Apple - iOS 8