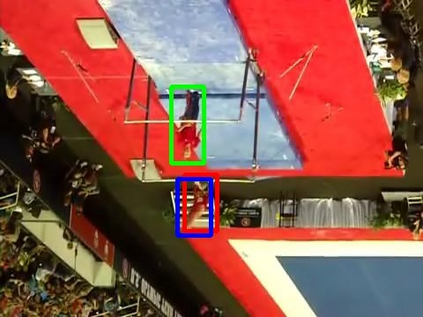

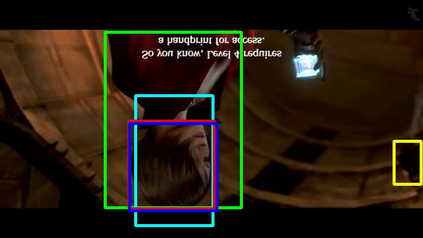

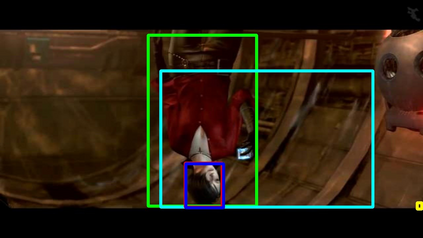

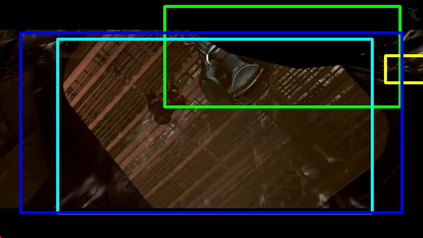

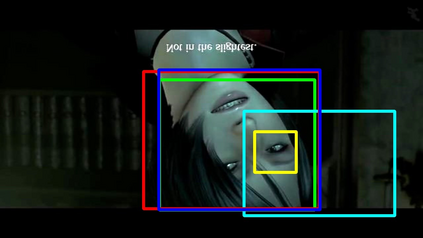

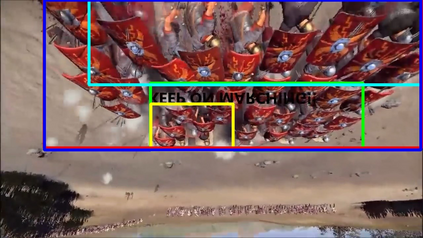

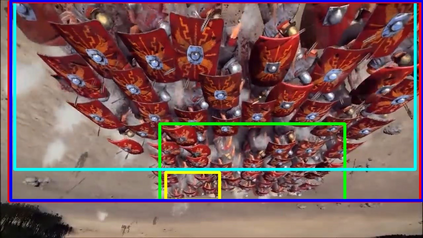

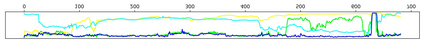

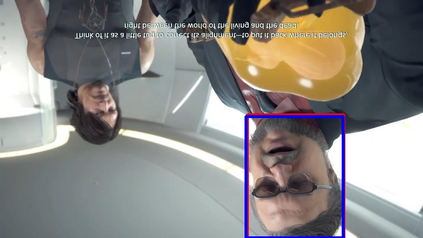

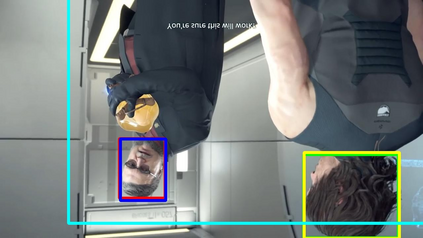

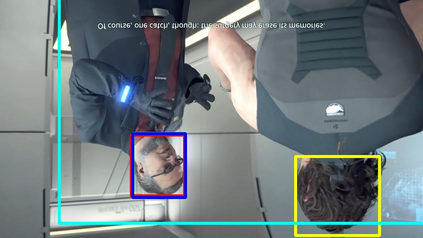

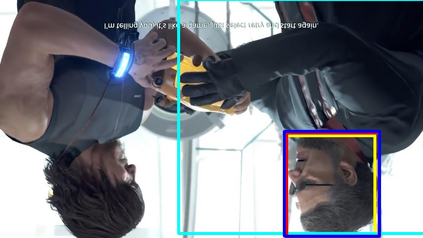

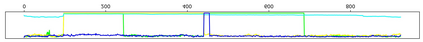

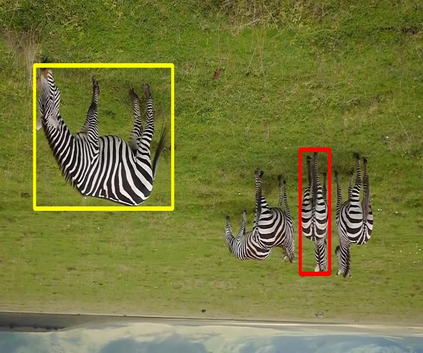

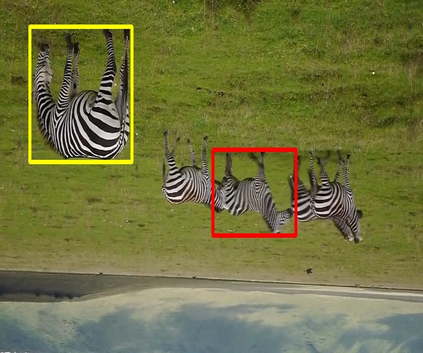

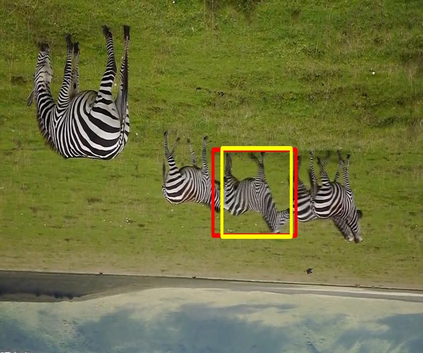

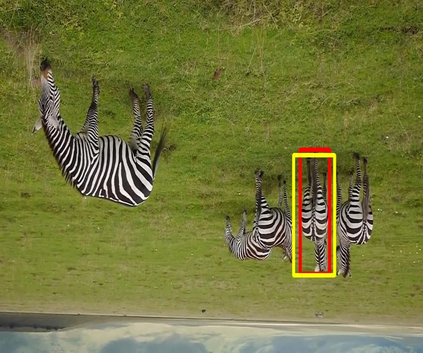

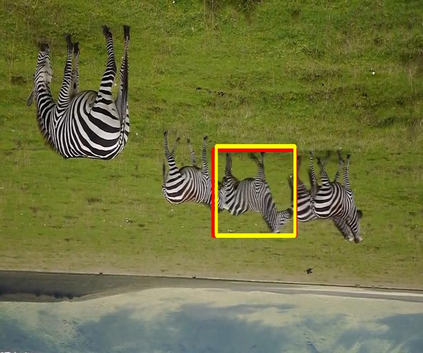

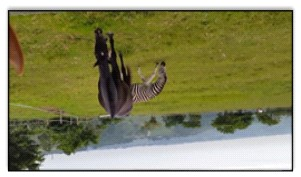

Tracking by natural language specification aims to locate the referred target in a sequence based on the natural language description. Existing algorithms solve this issue in two steps, visual grounding and tracking, and accordingly deploy the separated grounding model and tracking model to implement these two steps, respectively. Such a separated framework overlooks the link between visual grounding and tracking, which is that the natural language descriptions provide global semantic cues for localizing the target for both two steps. Besides, the separated framework can hardly be trained end-to-end. To handle these issues, we propose a joint visual grounding and tracking framework, which reformulates grounding and tracking as a unified task: localizing the referred target based on the given visual-language references. Specifically, we propose a multi-source relation modeling module to effectively build the relation between the visual-language references and the test image. In addition, we design a temporal modeling module to provide a temporal clue with the guidance of the global semantic information for our model, which effectively improves the adaptability to the appearance variations of the target. Extensive experimental results on TNL2K, LaSOT, OTB99, and RefCOCOg demonstrate that our method performs favorably against state-of-the-art algorithms for both tracking and grounding. Code is available at https://github.com/lizhou-cs/JointNLT.

翻译:跟踪自然语言说明旨在根据自然语言描述在序列中定位所指目标。现有算法通过两个步骤解决此问题,即视觉定位和跟踪,并相应地部署分离的定位模型和跟踪模型来执行这两个步骤。这种分离的框架忽略了视觉定位和跟踪之间的联系,即自然语言说明为两个步骤都提供了全局语义线索以定位目标。此外,这种分离的框架很难进行端到端的训练。为了解决这些问题,我们提出了一个联合视觉定位和跟踪框架,该框架将定位和跟踪重新定义为一个统一的任务:根据给定的视觉语言参考定位所指目标。具体地,我们提出了一个多源关系建模模块,有效地建立了视觉语言参考和测试图像之间的关系。此外,我们设计了一个时间建模模块,以全局语义信息的指导提供时间线索,从而有效地提高了我们的模型对目标外观变化的适应性。在 TNL2K,LaSOT,OTB99 和 RefCOCOg 上的广泛实验结果表明,我们的方法在跟踪和定位方面均表现出色。代码可在 https://github.com/lizhou-cs/JointNLT 上获取。