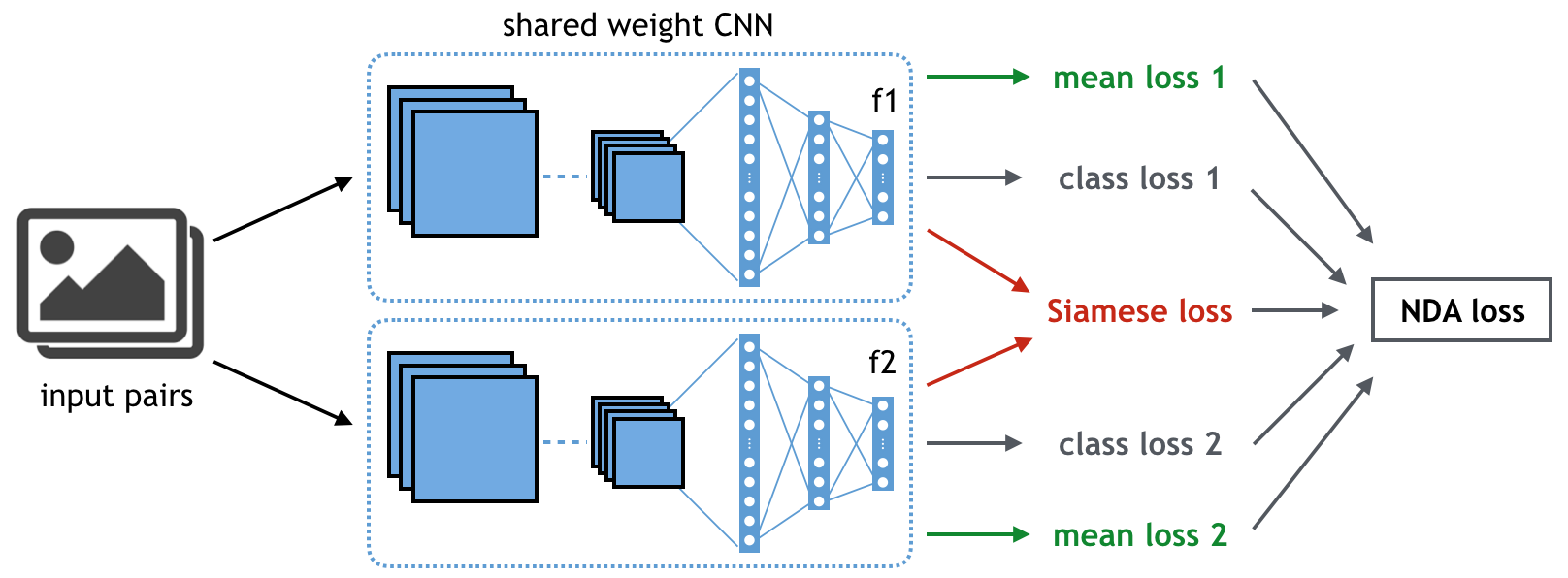

Discriminative features play an important role in image and object classification and also in other fields of research such as semi-supervised learning, fine-grained classification, out of distribution detection. Inspired by Linear Discriminant Analysis (LDA), we propose an optimization called Neural Discriminant Analysis (NDA) for Deep Convolutional Neural Networks (DCNNs). NDA transforms deep features to become more discriminative and, therefore, improves the performances in various tasks. Our proposed optimization has two primary goals for inter- and intra-class variances. The first one is to minimize variances within each individual class. The second goal is to maximize pairwise distances between features coming from different classes. We evaluate our NDA optimization in different research fields: general supervised classification, fine-grained classification, semi-supervised learning, and out of distribution detection. We achieve performance improvements in all the fields compared to baseline methods that do not use NDA. Besides, using NDA, we also surpass the state of the art on the four tasks on various testing datasets.

翻译:差异性特征在图像和物体分类以及其他研究领域,如半监督学习、细微分类、分配检测之外的其他研究领域发挥着重要作用。在线性差异分析(LDA)的启发下,我们建议为深演神经网络优化名为神经差异分析(NDA)。NDA将深层特征转化成更加歧视的特征,从而改进各项任务的业绩。我们提议的优化有两个主要目标,即不同类别之间和内部的差异。第一个目标是最大限度地减少各个类别之间差异。第二个目标是最大限度地扩大不同类别地物之间的对对对对相距离。我们评估我们的NDA在不同研究领域的优化:一般监督分类、精细分类、半监督学习以及分配检测。我们在所有领域都取得了绩效改进,与不使用NDA的基线方法相比。此外,利用NDA,我们还超越了不同测试数据集四项任务方面的艺术状况。