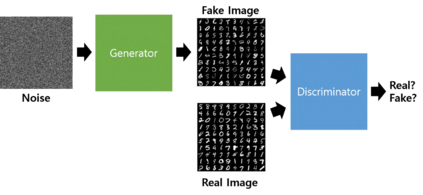

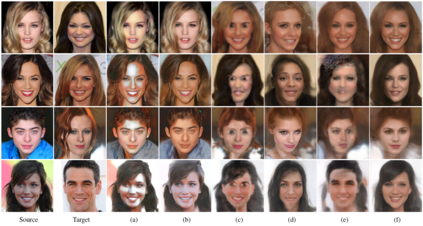

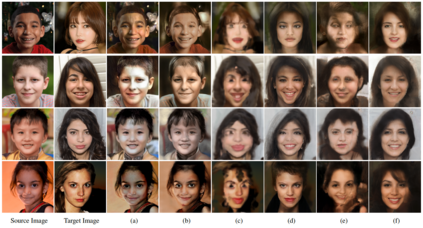

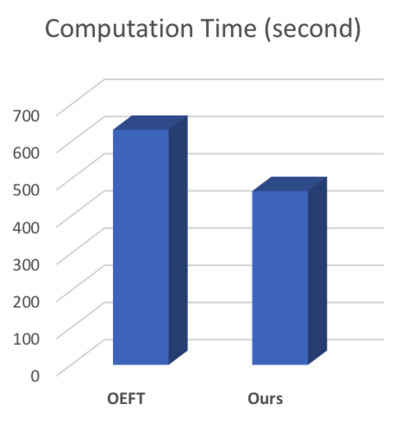

Existing state-of-the-art techniques in exemplar-based image-to-image translation hold several critical concerns. Existing methods related to exemplar-based image-to-image translation are impossible to translate on an image tuple input (source, target) that is not aligned. Additionally, we can confirm that the existing method exhibits limited generalization ability to unseen images. In order to overcome this limitation, we propose Multiple GAN Inversion for Exemplar-based Image-to-Image Translation. Our novel Multiple GAN Inversion avoids human intervention by using a self-deciding algorithm to choose the number of layers using Fr\'echet Inception Distance(FID), which selects more plausible image reconstruction results among multiple hypotheses without any training or supervision. Experimental results have in fact, shown the advantage of the proposed method compared to existing state-of-the-art exemplar-based image-to-image translation methods.

翻译:此外,我们可以确认,现有的方法显示,对看不见图像的概括化能力有限。为了克服这一限制,我们提议将多种GAN Inversion转换为基于Exemplar的图像到图像翻译。我们的小说“多重GAN Inversion”通过使用自定算法来使用Fr\'echet Inception Convention(FID)选择层数来避免人类干预,该算法在没有任何培训或监督的情况下在多个假设中选择更可信的图像重建结果。事实上,实验结果显示了拟议方法相对于现有最先进的Exemplal图像到图像翻译方法的优势。