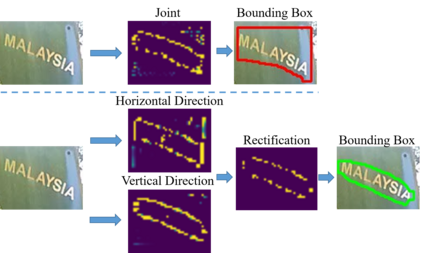

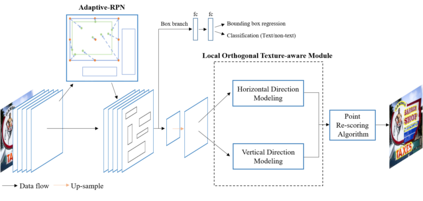

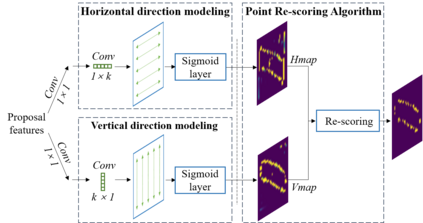

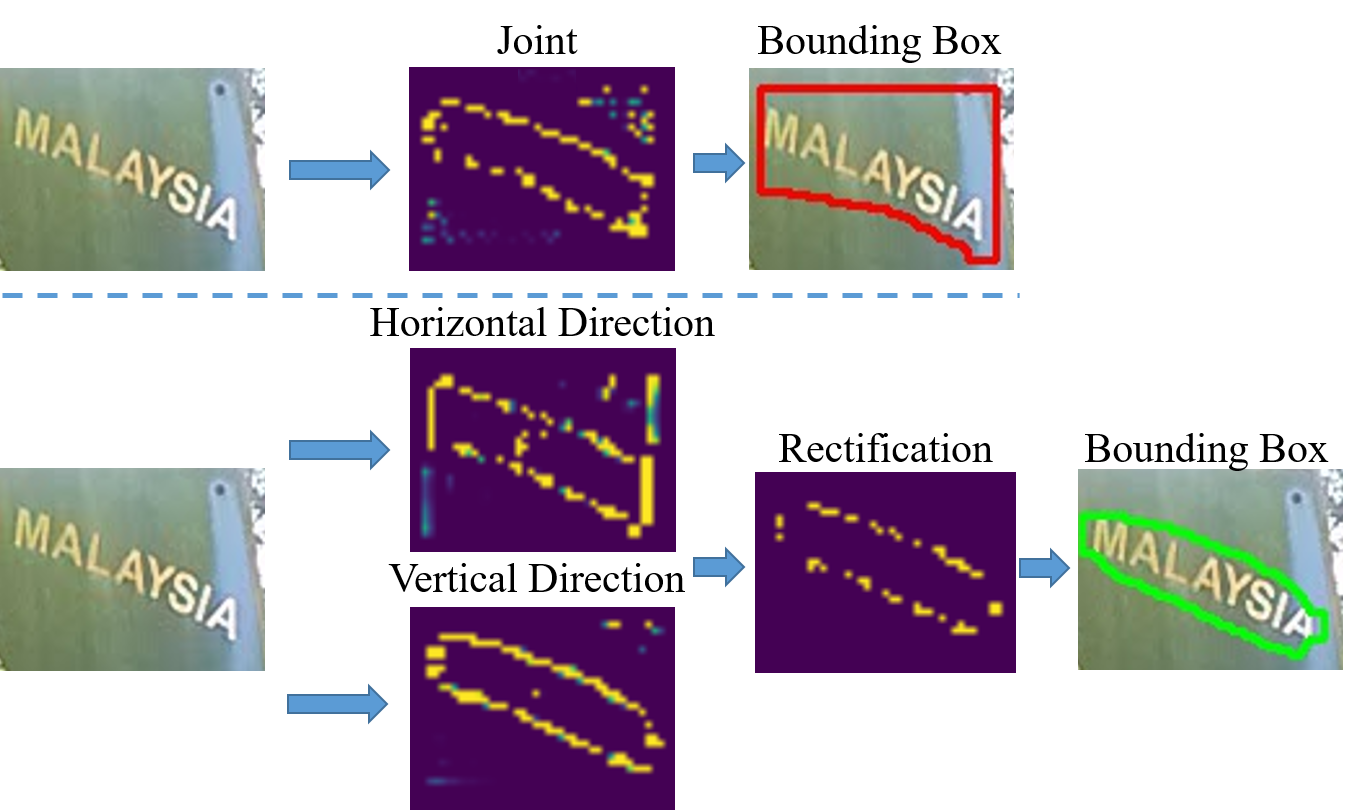

Scene text detection has witnessed rapid development in recent years. However, there still exists two main challenges: 1) many methods suffer from false positives in their text representations; 2) the large scale variance of scene texts makes it hard for network to learn samples. In this paper, we propose the ContourNet, which effectively handles these two problems taking a further step toward accurate arbitrary-shaped text detection. At first, a scale-insensitive Adaptive Region Proposal Network (Adaptive-RPN) is proposed to generate text proposals by only focusing on the Intersection over Union (IoU) values between predicted and ground-truth bounding boxes. Then a novel Local Orthogonal Texture-aware Module (LOTM) models the local texture information of proposal features in two orthogonal directions and represents text region with a set of contour points. Considering that the strong unidirectional or weakly orthogonal activation is usually caused by the monotonous texture characteristic of false-positive patterns (e.g. streaks.), our method effectively suppresses these false positives by only outputting predictions with high response value in both orthogonal directions. This gives more accurate description of text regions. Extensive experiments on three challenging datasets (Total-Text, CTW1500 and ICDAR2015) verify that our method achieves the state-of-the-art performance. Code is available at https://github.com/wangyuxin87/ContourNet.

翻译:近年来,对文本的检测迅速发展。然而,仍然存在两大挑战:(1) 许多方法在文本显示中存在虚假的正数;(2) 场景文本的大规模差异使得网络很难学习样本。 在本文中,我们提议ContourNet, 有效地处理这两个问题, 朝着准确任意形状的文本检测迈出了一步。 首先, 提议建立一个对比例不敏感的适应区域建议网络(Adaptive-RPN), 生成文本建议, 仅侧重于Intercrection by Interexion (IoU) 的数值, 预测的和地面真理的框。 然后, 一个全新的本地 Orthogonal Texture-aware 模块(LOTM) 模型, 以两个或不同方向的本地文本信息, 并用一系列的轮廓点代表文本区域。 考虑到强势的单向或弱度调控区域激活通常是由虚调的文本特征(e.g. stusts.), 我们的方法有效地抑制了这些虚假的正值, 仅通过高压的输出式的 AR- CD adalal adal adviewal deal adal deal deal deviews deview at the the sal deal deviewal deal deviewdal deal deviews lactions.