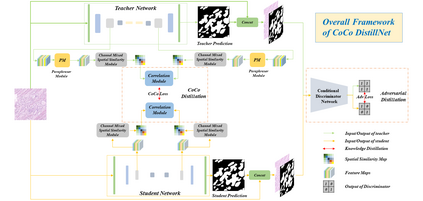

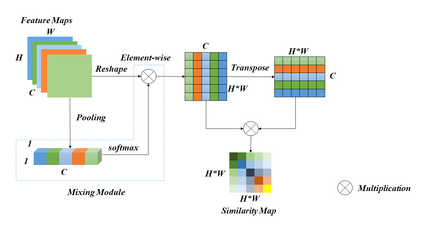

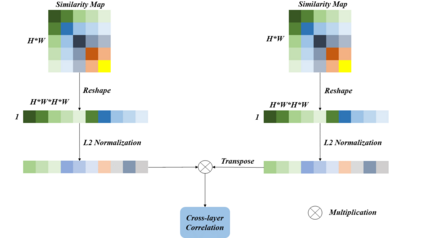

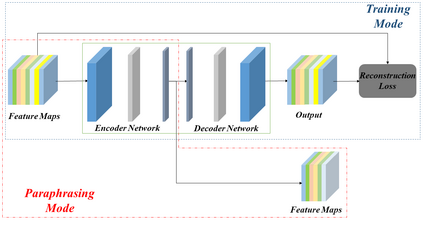

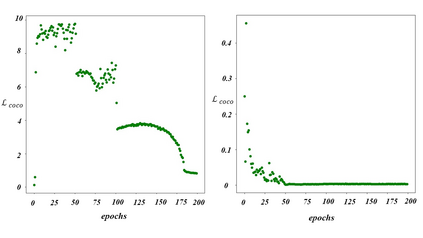

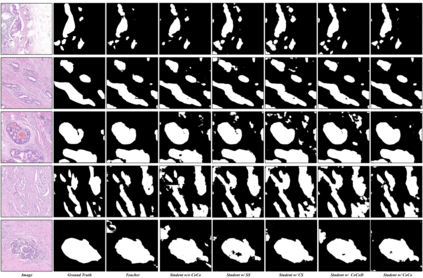

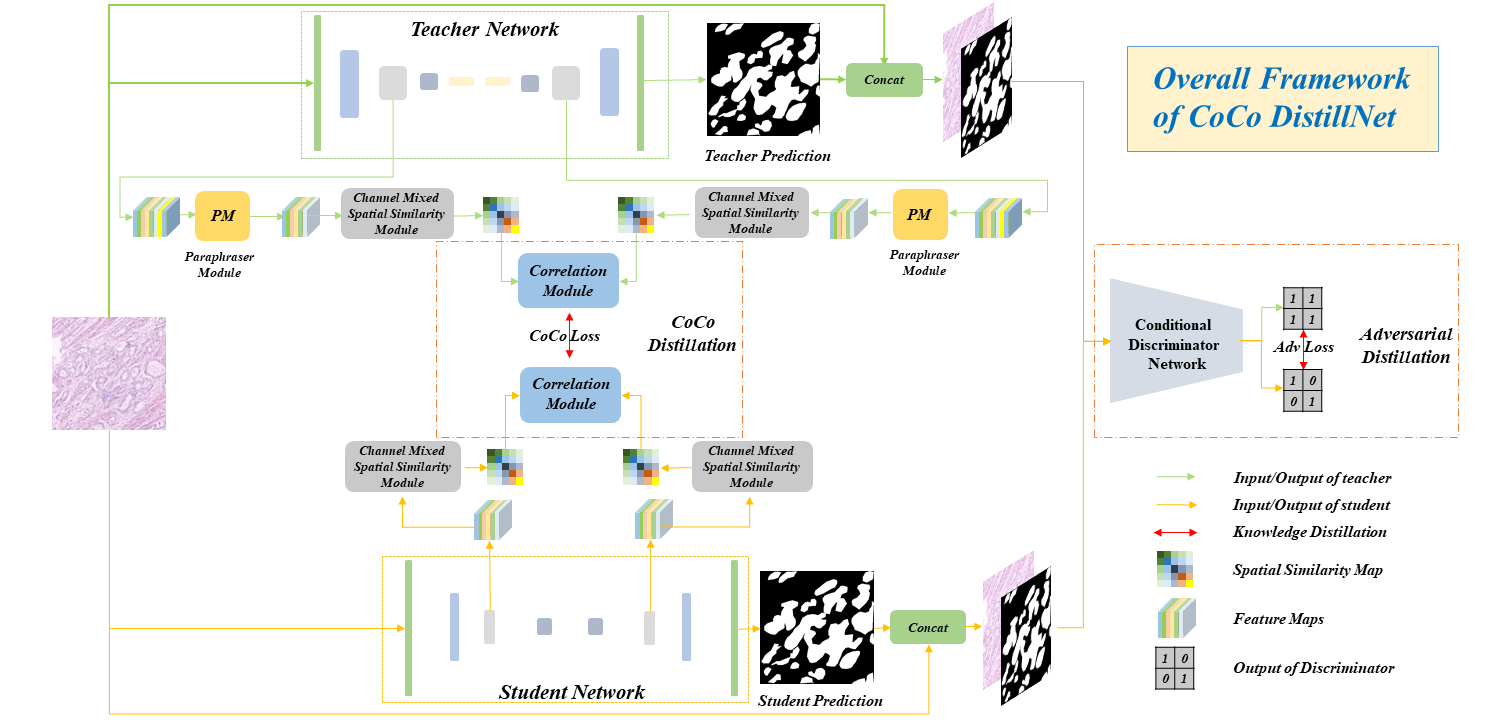

In recent years, deep convolutional neural networks have made significant advances in pathology image segmentation. However, pathology image segmentation encounters with a dilemma in which the higher-performance networks generally require more computational resources and storage. This phenomenon limits the employment of high-accuracy networks in real scenes due to the inherent high-resolution of pathological images. To tackle this problem, we propose CoCo DistillNet, a novel Cross-layer Correlation (CoCo) knowledge distillation network for pathological gastric cancer segmentation. Knowledge distillation, a general technique which aims at improving the performance of a compact network through knowledge transfer from a cumbersome network. Concretely, our CoCo DistillNet models the correlations of channel-mixed spatial similarity between different layers and then transfers this knowledge from a pre-trained cumbersome teacher network to a non-trained compact student network. In addition, we also utilize the adversarial learning strategy to further prompt the distilling procedure which is called Adversarial Distillation (AD). Furthermore, to stabilize our training procedure, we make the use of the unsupervised Paraphraser Module (PM) to boost the knowledge paraphrase in the teacher network. As a result, extensive experiments conducted on the Gastric Cancer Segmentation Dataset demonstrate the prominent ability of CoCo DistillNet which achieves state-of-the-art performance.

翻译:近些年来,深层神经神经网络在病理学图象分解方面取得了显著进步,然而,病理学图象分解却遇到进退两难的困境,因为高性能网络通常需要更多的计算资源和存储。这种现象限制了高精度网络在真实场面的使用,因为病理图象具有内在的高度分辨率。为了解决这个问题,我们提议Co DistillNet,这是一个新型的跨层相亲关系知识蒸馏网络(Co-Co-Co-Co-Col-Col-Col-Colentrication),用于病理学胃癌分解的分解。知识蒸馏是一种一般性技术,目的是通过从一个繁琐的网络转让知识来改善紧凑网络的性能。具体地说,我们的CoCo-StillNet模型模型模拟了不同层次之间频道混合空间相似性关系的相互关系,然后将这一知识从一个经过事先训练的烦琐的教师网络转移到一个未经训练的紧凑的学生网络。此外,我们还利用对抗性学习战略来推动称为Adversarial 蒸馏(AD) 稳定我们的训练程序。此外,我们利用了未经校略的Plas-Plasl-Calstillet-Cal-Cal-stalstalstalstalstalstalstalstalstalstalstalpalpal) 演示的师的实验结果。