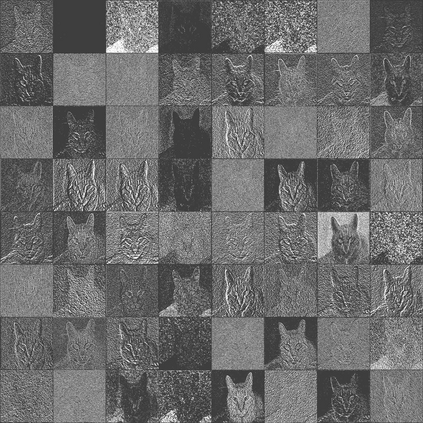

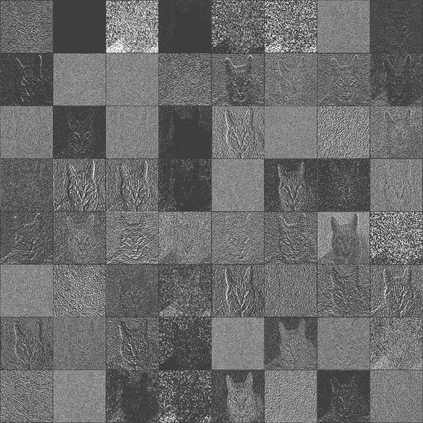

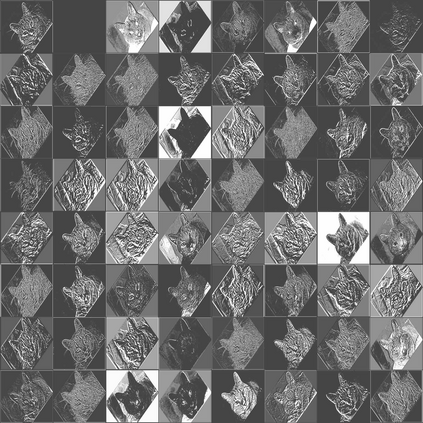

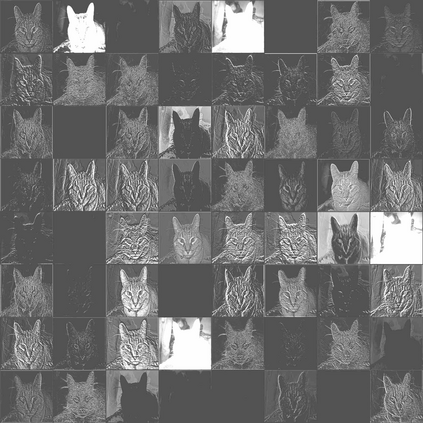

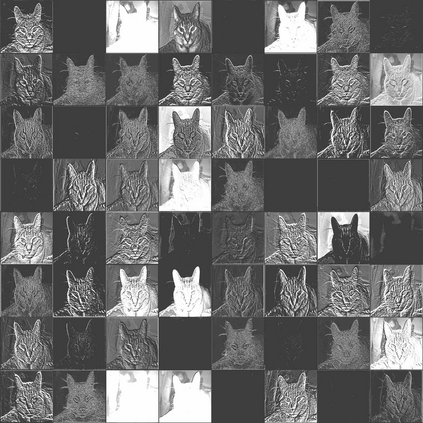

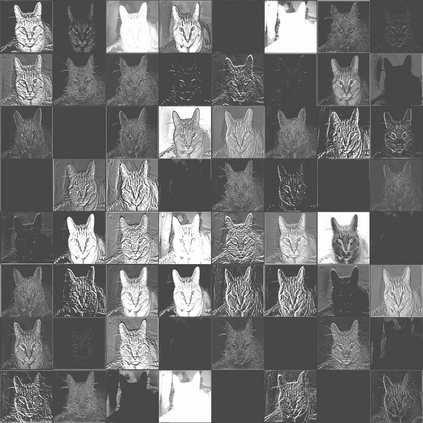

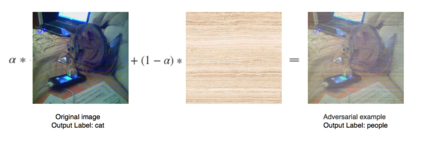

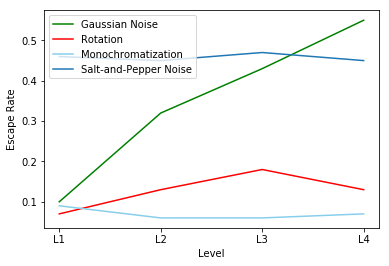

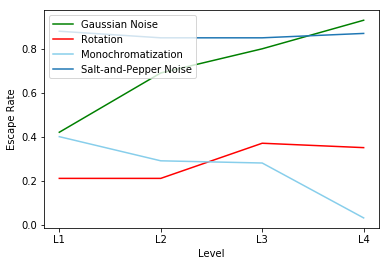

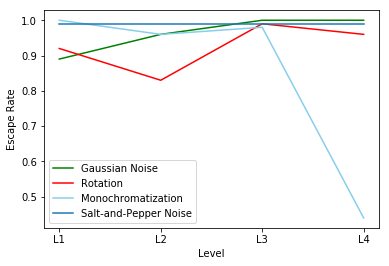

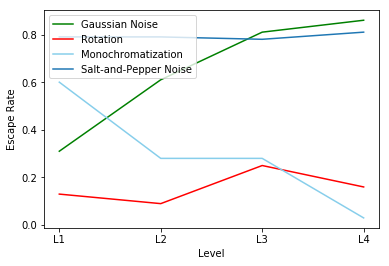

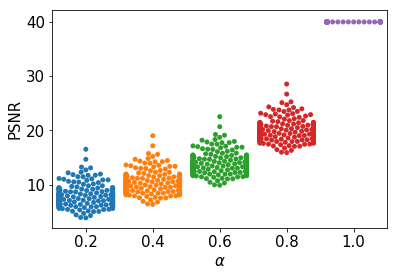

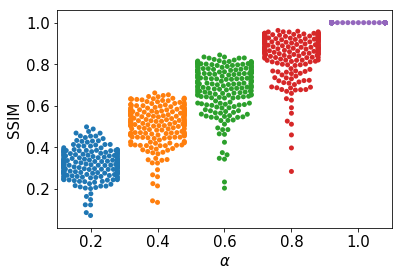

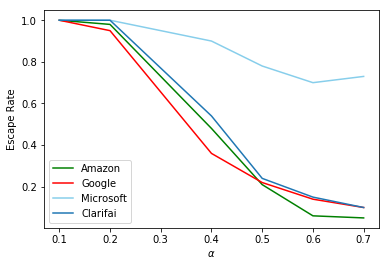

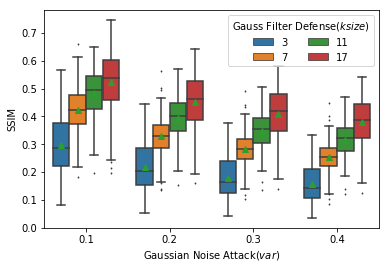

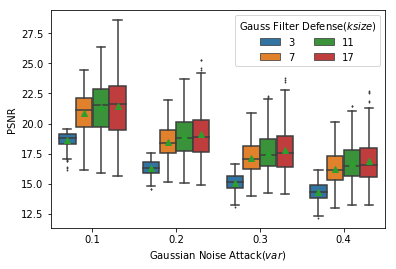

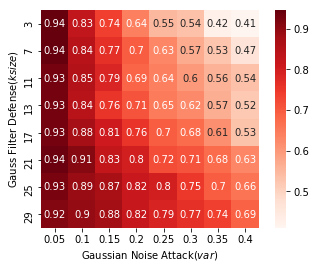

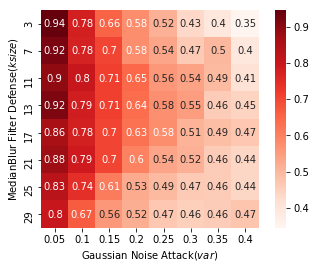

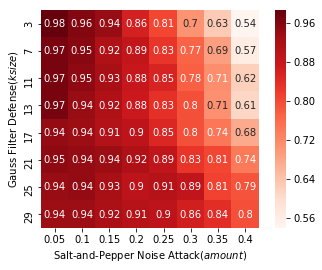

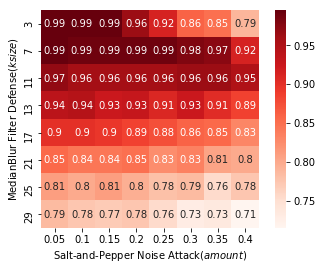

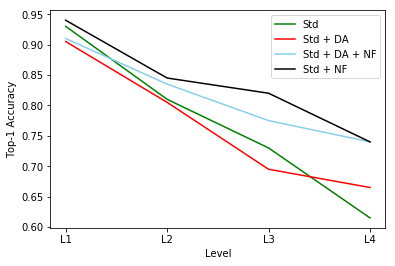

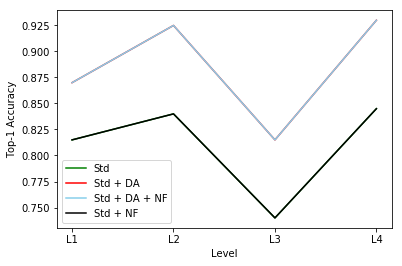

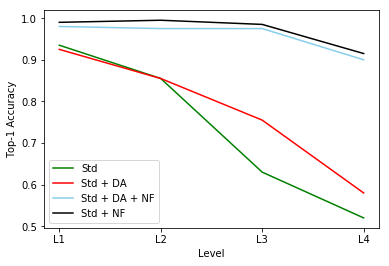

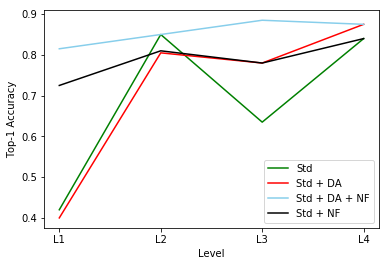

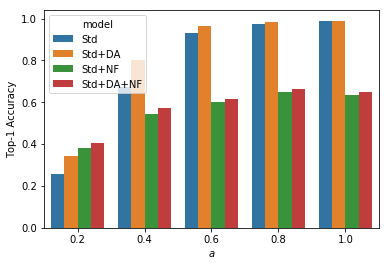

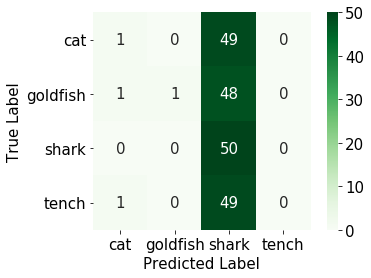

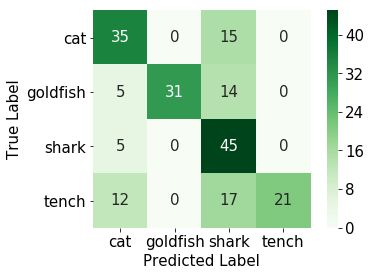

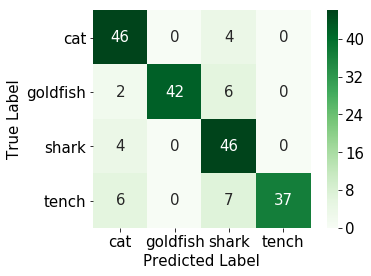

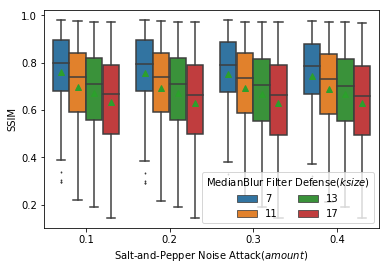

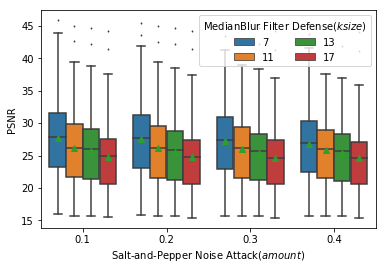

Many recent works demonstrated that Deep Learning models are vulnerable to adversarial examples.Fortunately, generating adversarial examples usually requires white-box access to the victim model, and the attacker can only access the APIs opened by cloud platforms. Thus, keeping models in the cloud can usually give a (false) sense of security.Unfortunately, cloud-based image classification service is not robust to simple transformations such as Gaussian Noise, Salt-and-Pepper Noise, Rotation and Monochromatization. In this paper,(1) we propose one novel attack method called Image Fusion(IF) attack, which achieve a high bypass rate,can be implemented only with OpenCV and is difficult to defend; and (2) we make the first attempt to conduct an extensive empirical study of Simple Transformation (ST) attacks against real-world cloud-based classification services. Through evaluations on four popular cloud platforms including Amazon, Google, Microsoft, Clarifai, we demonstrate that ST attack has a success rate of approximately 100% except Amazon approximately 50%, IF attack have a success rate over 98% among different classification services. (3) We discuss the possible defenses to address these security challenges.Experiments show that our defense technology can effectively defend known ST attacks.

翻译:近期许多作品都表明深学习模式很容易受到对抗性实例的影响。 不幸的是,生成对抗性实例通常需要使用受害者模式的白箱访问,攻击者只能访问云平台打开的API。 因此, 将模型保存在云中通常能带来( 假的)安全感。 不幸的是, 云基图像分类服务对于简单的转换来说并不强大, 比如高山噪音、 盐和粉末噪音、 旋转和单色化等。 本文中, (1) 我们建议使用一种名为图像融合(IF)攻击的新式攻击方法, 这种方法可以达到高绕行率, 只能通过 OpenCV 实施, 并且难以防御; (2) 我们第一次尝试对简单转型(ST) 攻击真实世界云基分类服务进行广泛的实验性研究。 通过对亚马逊、 谷歌、 微软、 克拉里法伊等四个流行的云平台的评估, 我们证明ST攻击的成功率约为100 %, 除了亚马孙 约50 %, IF攻击成功率超过98 %, 在不同已知的分类服务中, 我们讨论可能防御的防御挑战。