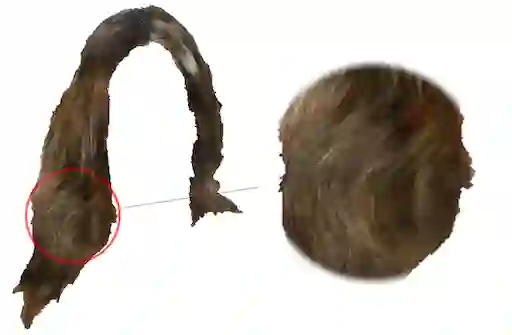

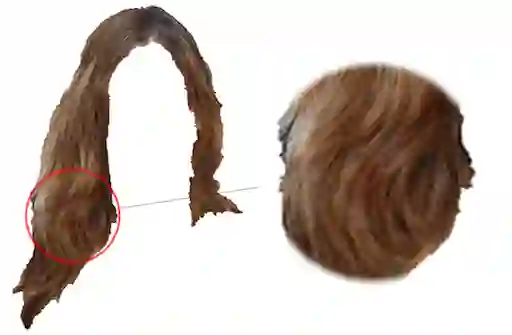

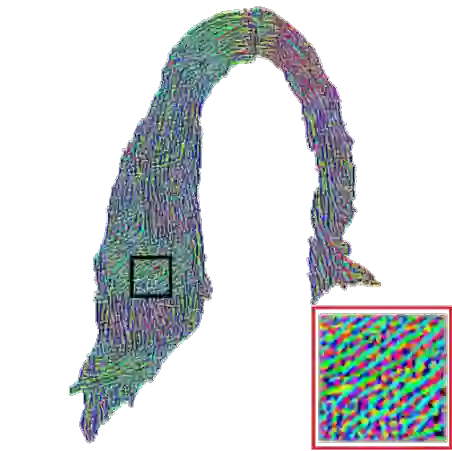

Generating plausible hair image given limited guidance, such as sparse sketches or low-resolution image, has been made possible with the rise of Generative Adversarial Networks (GANs). Traditional image-to-image translation networks can generate recognizable results, but finer textures are usually lost and blur artifacts commonly exist. In this paper, we propose a two-phase generative model for high-quality hair image synthesis. The two-phase pipeline first generates a coarse image by an existing image translation model, then applies a re-generating network with self-enhancing capability to the coarse image. The self-enhancing capability is achieved by a proposed structure extraction layer, which extracts the texture and orientation map from a hair image. Extensive experiments on two tasks, Sketch2Hair and Hair Super-Resolution, demonstrate that our approach is able to synthesize plausible hair image with finer details, and outperforms the state-of-the-art.

翻译:以有限的指导(如草图草图或低分辨率图像)产生貌似合理的毛发图像,随着Generation Adversarial Networks(GANs)的兴起而得以实现。传统的图像到图像翻译网络可以产生可识别的结果,但通常会丢失更细的纹理,而且通常会存在模糊的工艺品。在本文中,我们提出了一个高质量毛发合成的两阶段基因化模型。两阶段管道首先通过现有图像翻译模型生成粗糙的图像,然后将具有自我增强能力的再生成网络应用于粗糙图像。 增强自我能力是通过一个拟议的结构提取层实现的,该层从发型图像中提取质质素和方向图。关于Schach2Hair和海尔超级分辨率这两项任务的广泛实验表明,我们的方法能够用更精细的细节合成貌的毛发图像,并超越了艺术的状态。