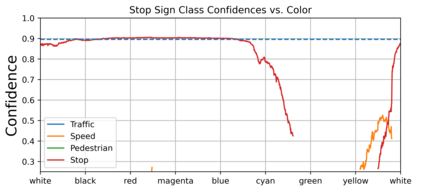

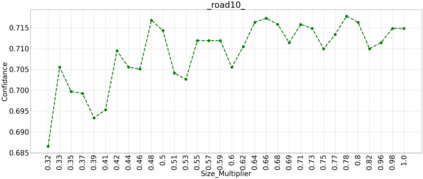

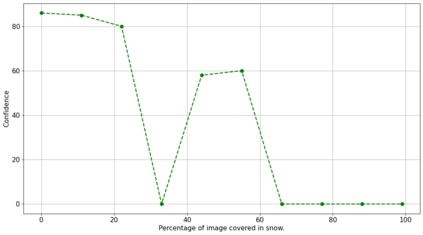

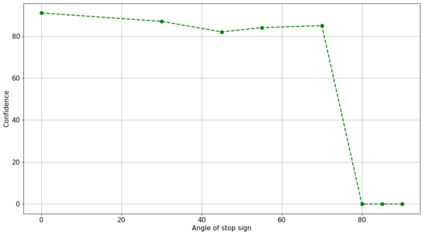

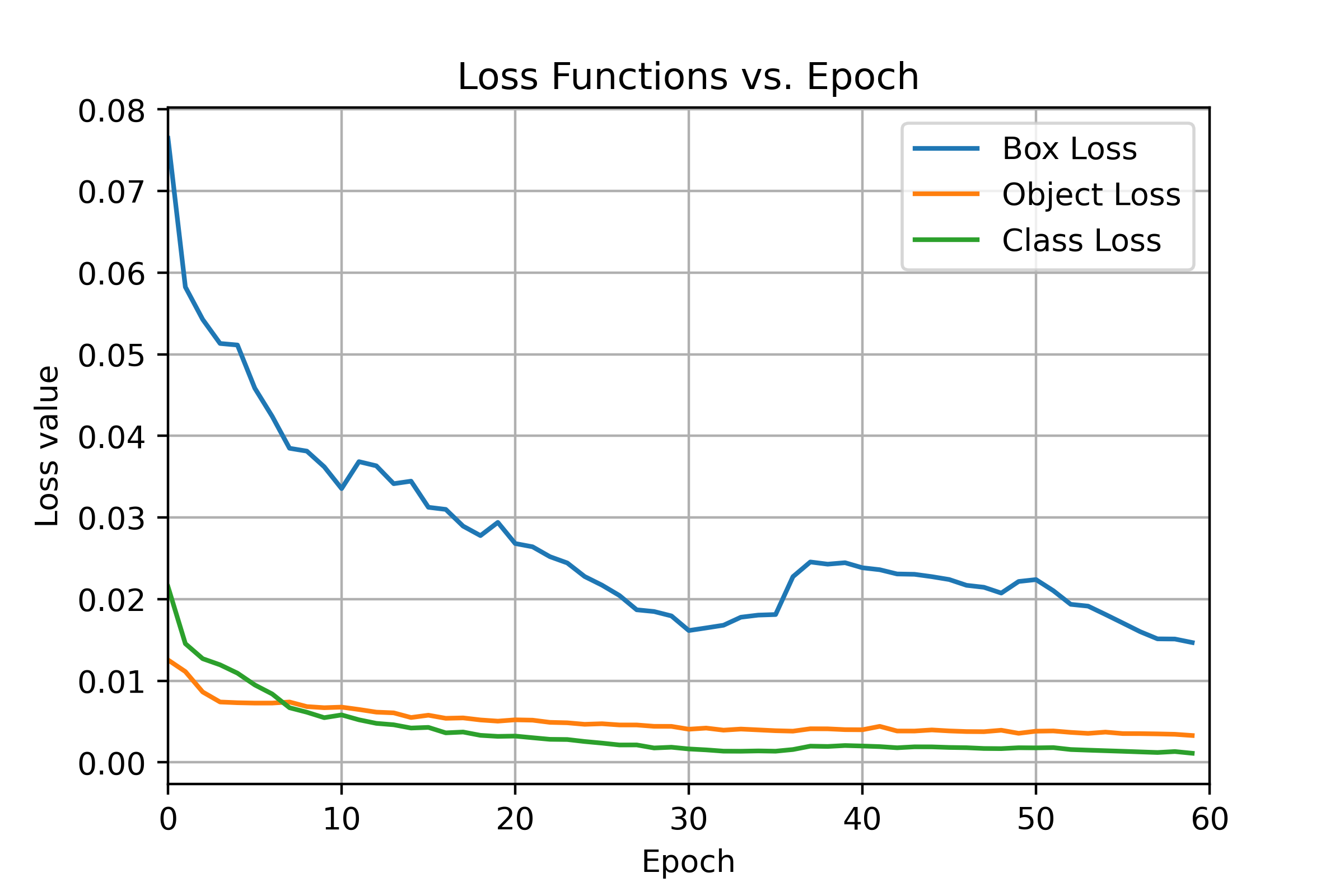

The widespread adoption of Image Processing has propelled Object Recognition (OR) models into essential roles across various applications, demonstrating the power of AI and enabling crucial services. Among the applications, traffic sign recognition stands out as a popular research topic, given its critical significance in the development of autonomous vehicles. Despite their significance, real-world challenges, such as alterations to traffic signs, can negatively impact the performance of OR models. This study investigates the influence of altered traffic signs on the accuracy and effectiveness of object recognition, employing a publicly available dataset to introduce alterations in shape, color, content, visibility, angles and background. Focusing on the YOLOv7 (You Only Look Once) model, the study demonstrates a notable decline in detection and classification accuracy when confronted with traffic signs in unusual conditions including the altered traffic signs. Notably, the alterations explored in this study are benign examples and do not involve algorithms used for generating adversarial machine learning samples. This study highlights the significance of enhancing the robustness of object detection models in real-life scenarios and the need for further investigation in this area to improve their accuracy and reliability.

翻译:暂无翻译