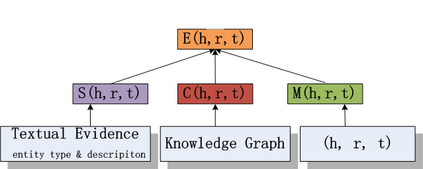

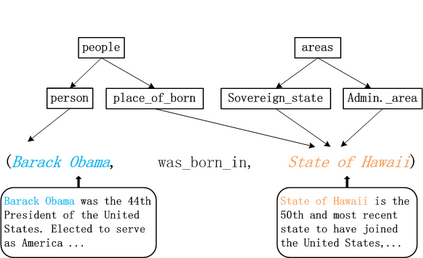

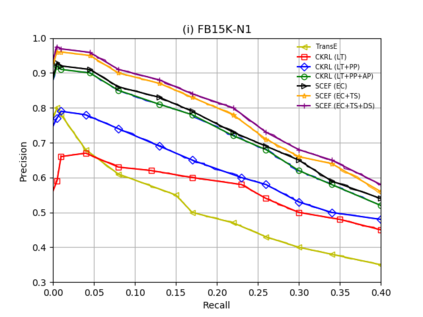

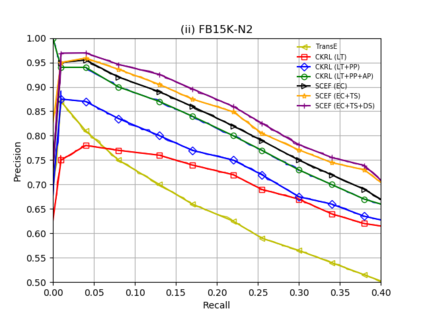

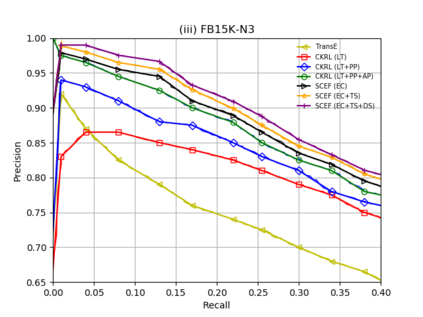

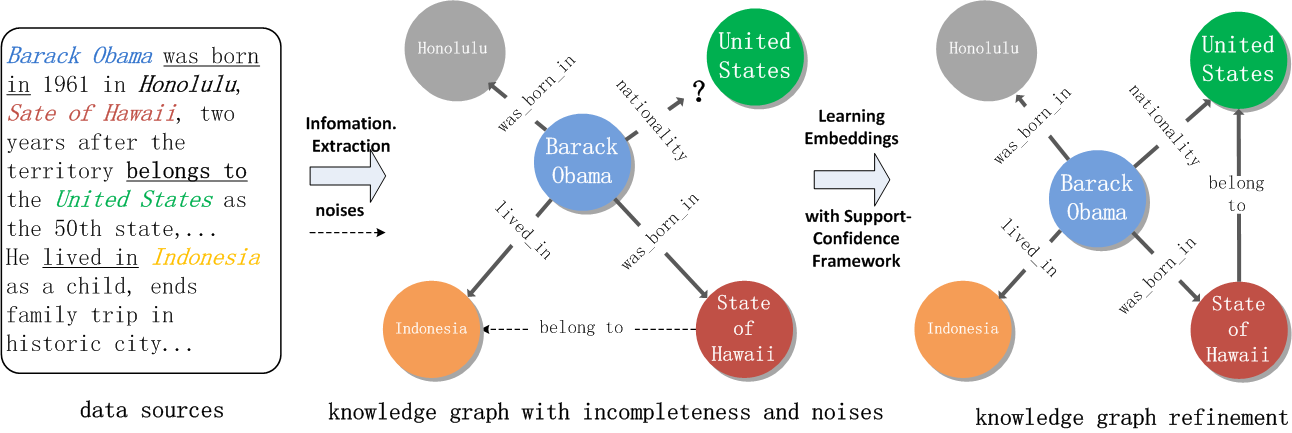

Knowledge graph (KG) refinement mainly aims at KG completion and correction (i.e., error detection). However, most conventional KG embedding models only focus on KG completion with an unreasonable assumption that all facts in KG hold without noises, ignoring error detection which also should be significant and essential for KG refinement.In this paper, we propose a novel support-confidence-aware KG embedding framework (SCEF), which implements KG completion and correction simultaneously by learning knowledge representations with both triple support and triple confidence. Specifically, we build model energy function by incorporating conventional translation-based model with support and confidence. To make our triple support-confidence more sufficient and robust, we not only consider the internal structural information in KG, studying the approximate relation entailment as triple confidence constraints, but also the external textual evidence, proposing two kinds of triple supports with entity types and descriptions respectively.Through extensive experiments on real-world datasets, we demonstrate SCEF's effectiveness.

翻译:知识图(KG)的完善主要着眼于KG的完成和校正(即,误差探测),然而,大多数传统的KG嵌入模型只侧重于KG的完成,不合理地假定KG的所有事实都保持无噪音,忽略错误的探测,这对于KG的完善也十分重要和必要。 在本文件中,我们提议建立一个新的支持-自信心KG嵌入框架(SCEF),通过学习知识的表达方式同时实施KG的完成和校正,同时提供三重支持和三重信任。具体地说,我们通过在支持和信心的基础上纳入传统的翻译模型来建立模型的能源功能。为了使我们的三重支持-信心更加充足和有力,我们不仅将KG的内部结构信息视为三重信任限制,而且还将外部文本证据视为三重信任限制,分别提出两种类型的支持,用实体类型和描述分别提出三重支持。在现实世界数据集上进行广泛的实验,我们展示了SCEF的有效性。