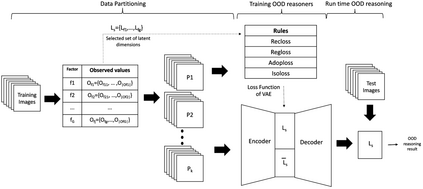

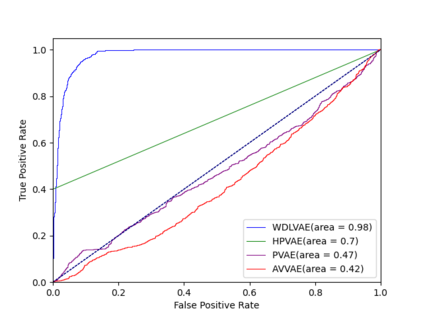

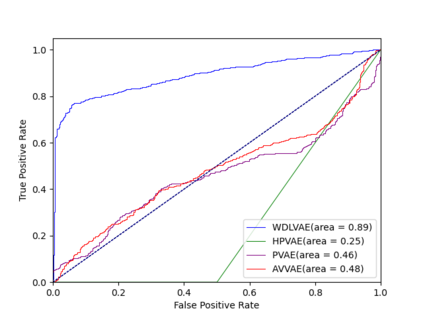

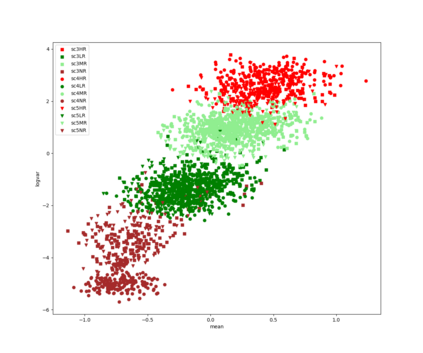

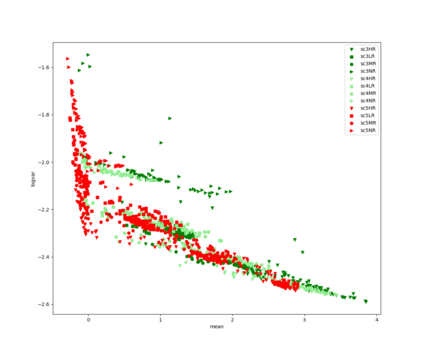

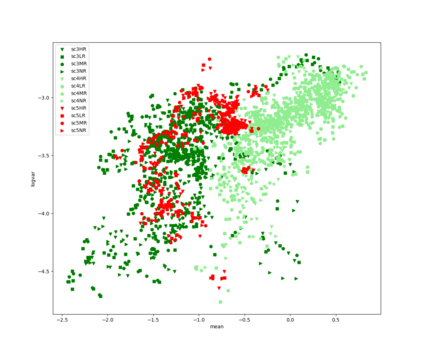

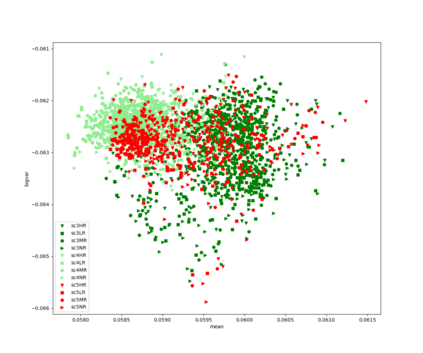

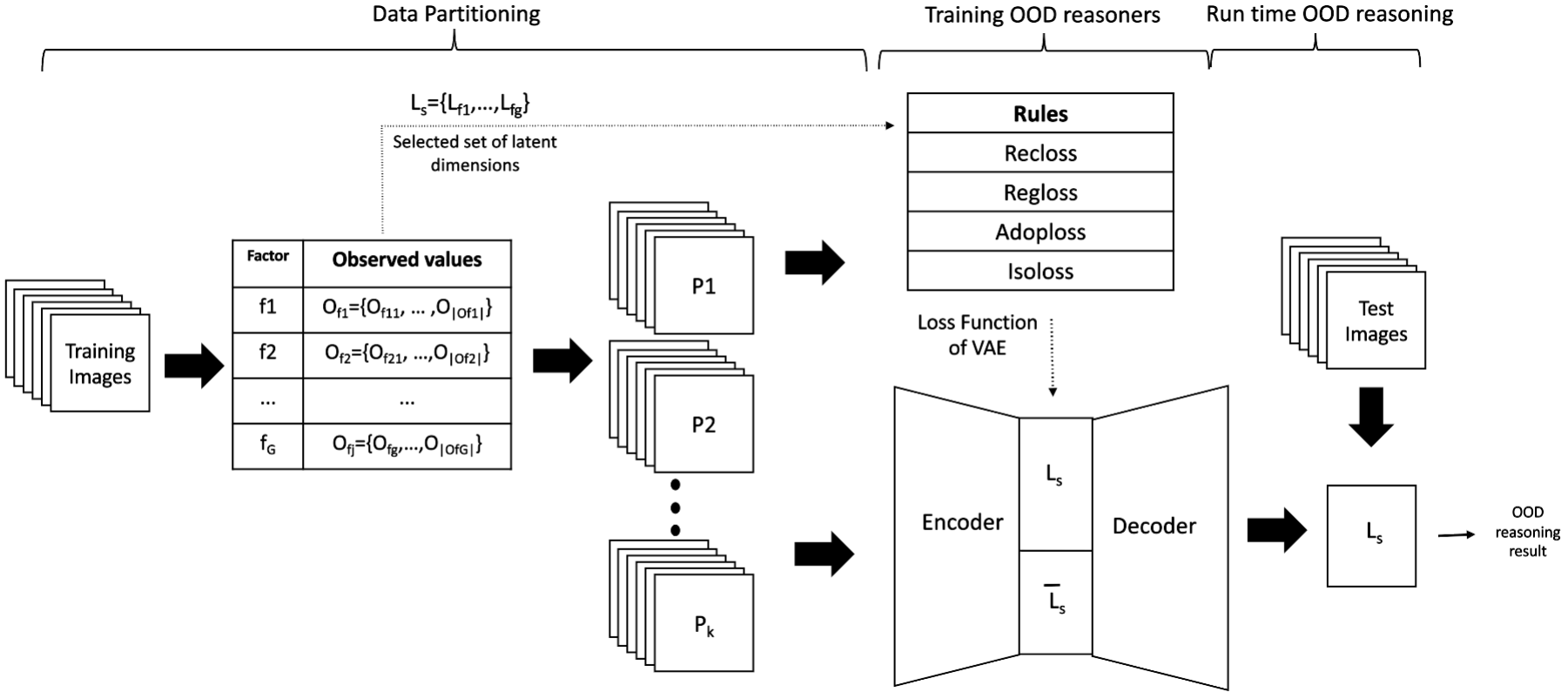

Out-of-distribution (OOD) detection, i.e., finding test samples derived from a different distribution than the training set, as well as reasoning about such samples (OOD reasoning), are necessary to ensure the safety of results generated by machine learning models. Recently there have been promising results for OOD detection in the latent space of variational autoencoders (VAEs). However, without disentanglement, VAEs cannot perform OOD reasoning. Disentanglement ensures a one- to-many mapping between generative factors of OOD (e.g., rain in image data) and the latent variables to which they are encoded. Although previous literature has focused on weakly-supervised disentanglement on simple datasets with known and independent generative factors. In practice, achieving full disentanglement through weak supervision is impossible for complex datasets, such as Carla, with unknown and abstract generative factors. As a result, we propose an OOD reasoning framework that learns a partially disentangled VAE to reason about complex datasets. Our framework consists of three steps: partitioning data based on observed generative factors, training a VAE as a logic tensor network that satisfies disentanglement rules, and run-time OOD reasoning. We evaluate our approach on the Carla dataset and compare the results against three state-of-the-art methods. We found that our framework outperformed these methods in terms of disentanglement and end-to-end OOD reasoning.

翻译:分配外(OOD)检测,即查找来自与培训组不同分布分布的测试样品,以及这些样品的推理(OOOD推理),对于确保机器学习模型产生的结果的安全性是必要的。最近,在变异自动对立器(VAEs)的潜在空间中,OOD检测取得了大有希望的结果。然而,没有分解,VAEs就无法执行OOD推理。分解确保OOD(如图像数据中的降雨)和它们所编码的潜在变量之间的一对一对一绘图。尽管以前的文献侧重于对已知和独立的基因化因素的简单数据集的不严密监督分解。实际上,通过监管的薄弱使完全分解是不可能的,例如卡拉,具有未知和抽象的基因化因素。结果,我们建议ODA推理学的分解框架,从部分分解VAE到复杂数据集的原因。我们的框架包括三个步骤:我们所观察到的逻辑推理学、我们所观察到的逻辑化的ODRalal-rode 、我们所观测到的逻辑推理学的三步调、我们所观察到的逻辑化的逻辑化的逻辑推理学结果。