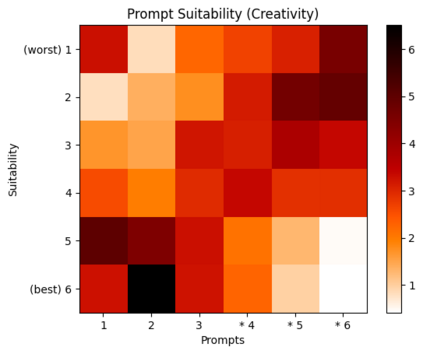

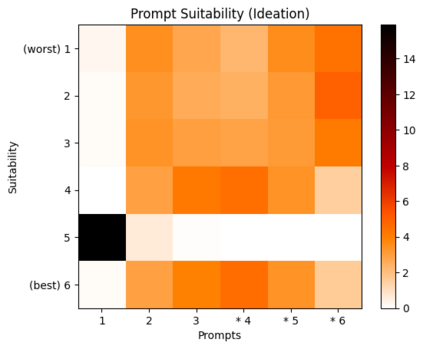

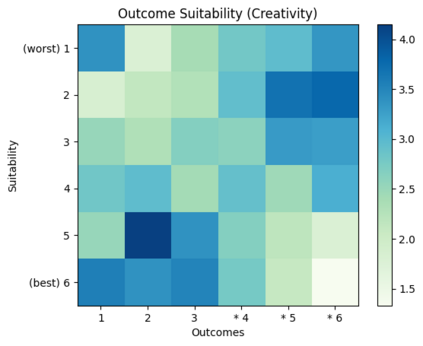

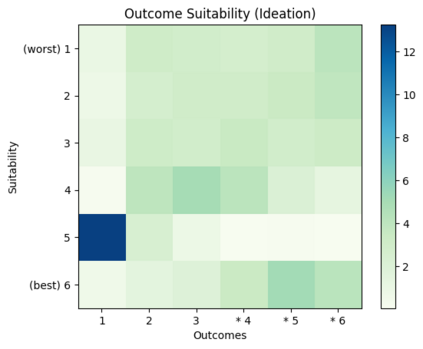

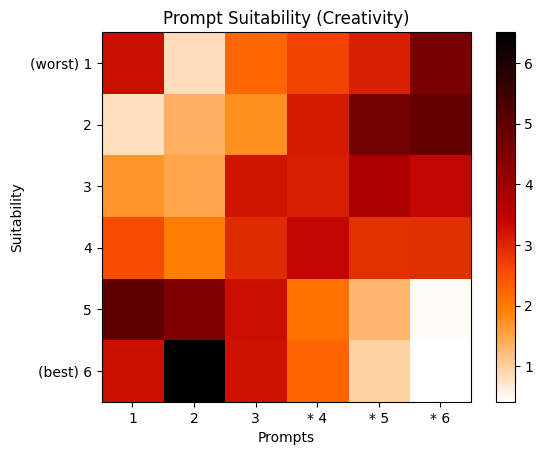

Modern generative language models are capable of interpreting input strings as instructions, or prompts, and carry out tasks based on them. Many approaches to prompting and pre-training these models involve the automated generation of these prompts: meta-prompting, or prompting to obtain prompts. We propose a theoretical framework based on category theory to generalize and describe them. This framework is flexible enough to account for stochasticity, and allows us to obtain formal results around task agnosticity and equivalence of various meta-prompting approaches. Experimentally, we test our framework in two active areas of model research: creativity and ideation. We find that user preference strongly favors (p < 0.01) the prompts generated under meta-prompting, as well as their corresponding outputs, over a series of hardcoded baseline prompts that include the original task definition. Using our framework, we argue that meta-prompting is more effective than basic prompting at generating desirable outputs.

翻译:暂无翻译