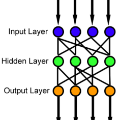

We present a derivation of the gradients of feedforward neural networks using Fr\'echet calculus which is arguably more compact than the ones usually presented in the literature. We first derive the gradients for ordinary neural networks working on vectorial data and show how these derived formulas can be used to derive a simple and efficient algorithm for calculating a neural networks gradients. Subsequently we show how our analysis generalizes to more general neural network architectures including, but not limited to, convolutional networks.

翻译:我们用Fr\'echet 计算法来推断进料神经网络的梯度,这可以说比文献中通常显示的更为紧凑。我们首先为普通神经网络的矢量数据计算出进料神经网络的梯度,并展示如何利用这些衍生公式来得出计算神经网络梯度的简单而有效的算法。随后,我们展示了我们的分析如何概括到更一般的神经网络结构,包括但不局限于连锁网络。

相关内容

Networking:IFIP International Conferences on Networking。

Explanation:国际网络会议。

Publisher:IFIP。

SIT: http://dblp.uni-trier.de/db/conf/networking/index.html

专知会员服务

78+阅读 · 2022年3月15日

Arxiv

0+阅读 · 2022年11月2日