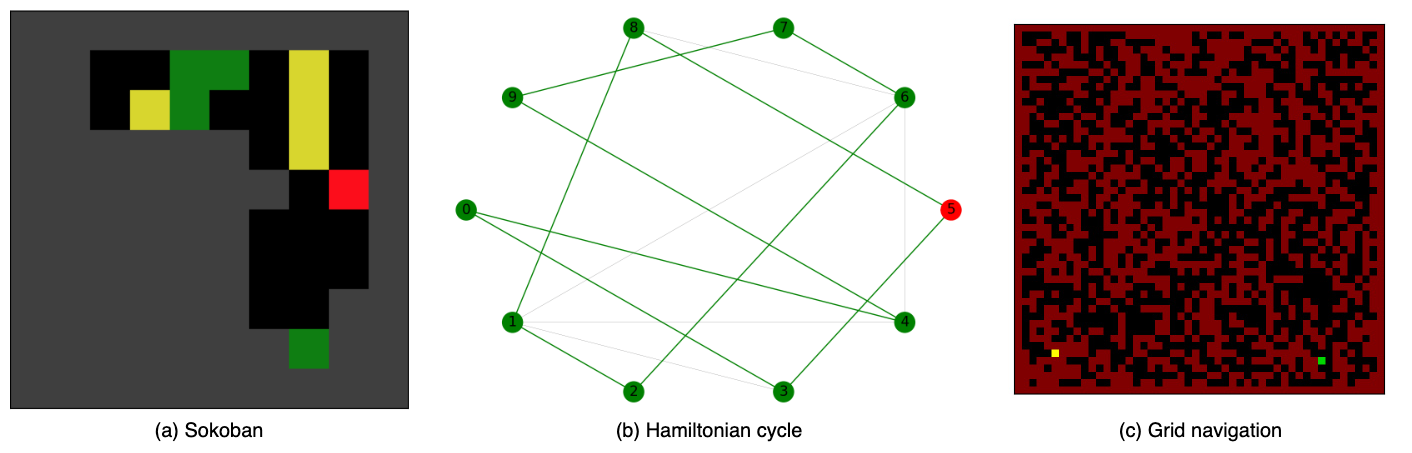

A tree-based online search algorithm iteratively simulates trajectories and updates Q-value information on a set of states represented by a tree structure. Alternatively, policy gradient based online search algorithms update the information obtained from simulated trajectories directly onto the parameters of the policy and has been found to be effective. While tree-based methods limit the updates from simulations to the states that exist in the tree and do not interpolate the information to nearby states, policy gradient search methods do not do explicit exploration. In this paper, we show that it is possible to combine and leverage the strengths of these two methods for improved search performance. We examine the key reasons behind the improvement and propose a simple yet effective online search method, named Exploratory Policy Gradient Search (ExPoSe), that updates both the parameters of the policy as well as search information on the states in the trajectory. We conduct experiments on complex planning problems, which include Sokoban and Hamiltonian cycle search in sparse graphs and show that combining exploration with policy gradient improves online search performance.

翻译:以树为基础的在线搜索算法迭接地模拟了轨迹并更新了以树结构为代表的一组状态的Q值信息。 或者,基于政策梯度的在线搜索算法将从模拟轨迹直接获得的信息更新到政策参数上,并被认为行之有效。虽然基于树的方法将更新从模拟到树上存在的状态,并且不将信息插到邻近各州,但政策梯度搜索方法并不进行明确的探索。在本文中,我们表明有可能将这两种方法的优势结合起来并加以利用,以提高搜索性能。我们研究了改进的关键原因,并提出了一个简单而有效的在线搜索方法,名为探索性政策梯度搜索(ExPOSe),该方法既更新了政策参数,也更新了轨迹中状态的搜索信息。我们在复杂的规划问题上进行了实验,其中包括在稀少的图表中进行索科班和汉密尔顿周期搜索,并表明将探索与政策梯度相结合可以改进在线搜索性能。