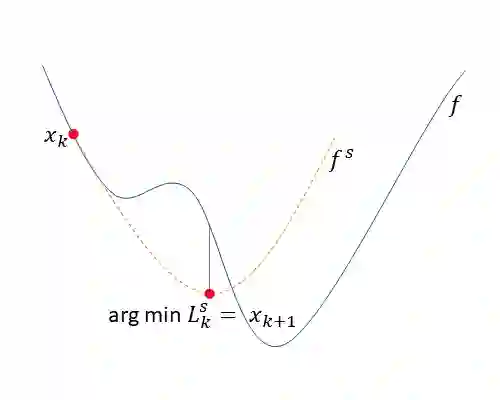

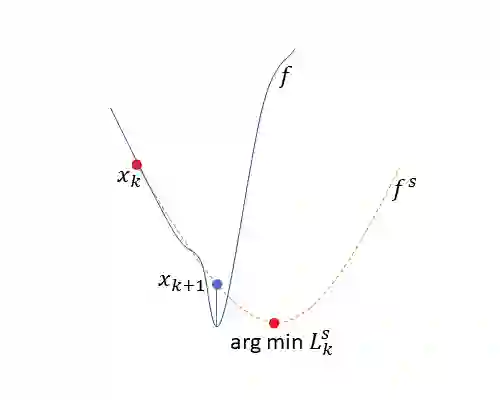

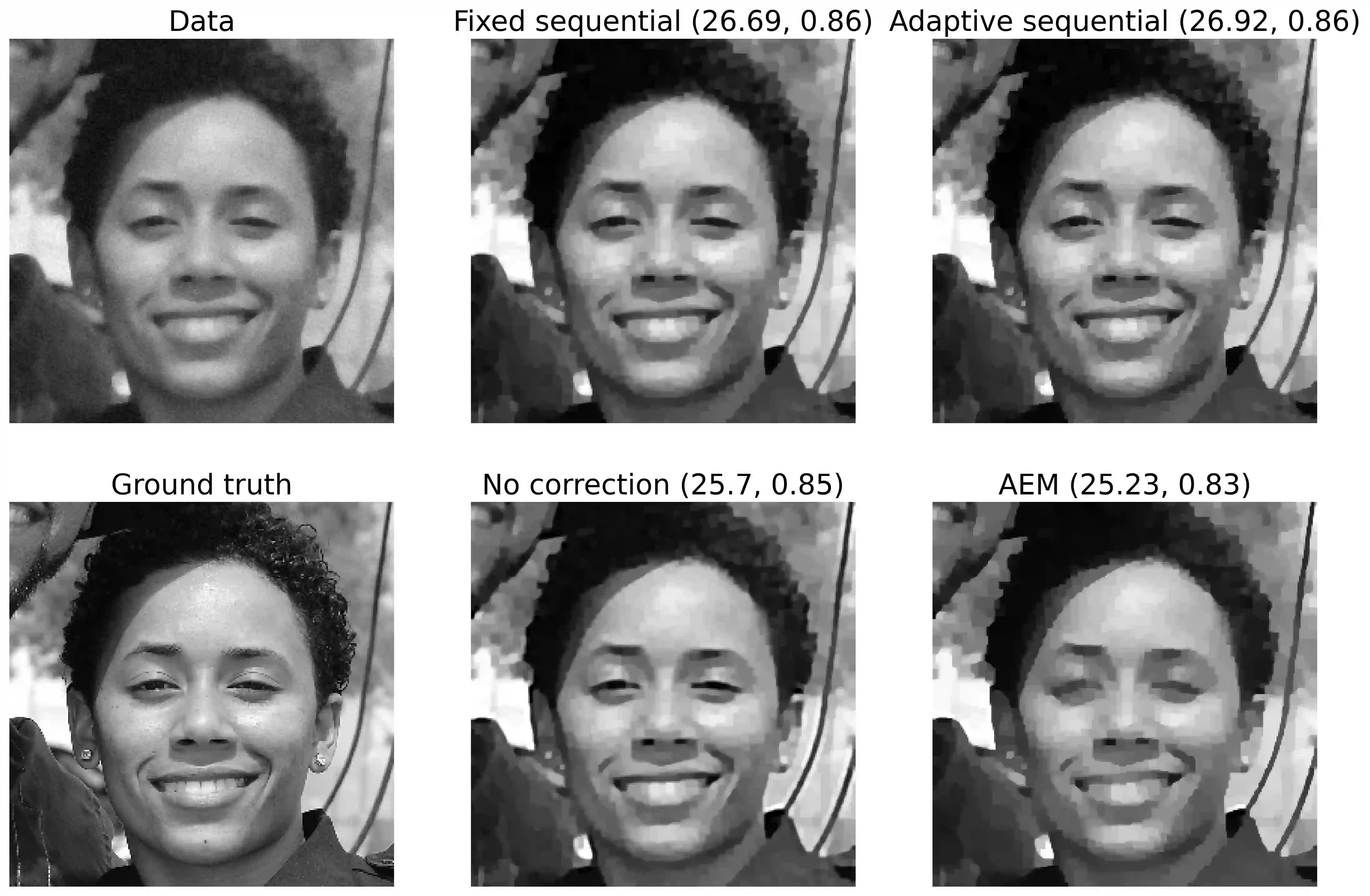

Inverse problems are in many cases solved with optimization techniques. When the underlying model is linear, first-order gradient methods are usually sufficient. With nonlinear models, due to nonconvexity, one must often resort to second-order methods that are computationally more expensive. In this work we aim to approximate a nonlinear model with a linear one and correct the resulting approximation error. We develop a sequential method that iteratively solves a linear inverse problem and updates the approximation error by evaluating it at the new solution. We separately consider cases where the approximation is fixed over iterations and where the approximation is adaptive. In the fixed case we show theoretically under what assumptions the sequence converges. In the adaptive case, particularly considering the special case of approximation by first-order Taylor expansion, we show that the sequence converges to a neighborhood of a local minimizer of the objective function with respect to the exact model. Furthermore, we show that with quadratic objective functions the sequence corresponds to the Gauss-Newton method. Finally, we showcase numerical results superior to the conventional model correction method. We also show, that a fixed approximation can provide competitive results with considerable computational speed-up.

翻译:在很多情况下,问题都是通过优化技术解决的。当基础模型是线性时,一级梯度方法通常就足够了。在非线性模型中,由于不精密,人们往往必须使用计算成本更高的二阶方法。在这项工作中,我们的目标是将非线性模型与线性模型相近,并纠正由此产生的近似错误。我们开发了一种迭代方法,通过在新的解决方案中评估,解决线性反问题并更新近似错误。我们分别考虑近似在迭代上固定且近似适应性强的情况。在固定的模型中,我们从理论上展示了序列交汇的假设。在适应性案例中,我们特别考虑到一级泰勒扩展的近似特例,我们显示该序列与精确模型目标函数的局部最小化点相交汇。此外,我们用四端目标函数显示序列与Gaus-Newton方法相匹配。我们展示的数字结果优于常规模型校正方法。我们还显示,固定近似近似可提供具有相当计算速度的竞争性的结果。