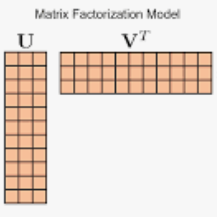

Nonnegative Matrix Factorization is an important tool in unsupervised machine learning to decompose a data matrix into a product of parts that are often interpretable. Many algorithms have been proposed during the last three decades. A well-known method is the Multiplicative Updates algorithm proposed by Lee and Seung in 2002. Multiplicative updates have many interesting features: they are simple to implement and can be adapted to popular variants such as sparse Nonnegative Matrix Factorization, and, according to recent benchmarks, is state-of-the-art for many problems where the loss function is not the Frobenius norm. In this manuscript, we propose to improve the Multiplicative Updates algorithm seen as an alternating majorization minimization algorithm by crafting a tighter upper bound of the Hessian matrix for each alternate subproblem. Convergence is still ensured and we observe in practice on both synthetic and real world dataset that the proposed fastMU algorithm is often several orders of magnitude faster than the regular Multiplicative Updates algorithm, and can even be competitive with state-of-the-art methods for the Frobenius loss.

翻译:非负矩阵分解是无监督机器学习中的重要工具,可以将数据矩阵分解为往往可以解释的部分的乘积。在过去的三十年中,许多算法已经被提出。著名的方法是Lee和Seung于2002年提出的乘性更新算法。乘性更新具有许多有趣的特性:它们易于实现,可适应流行的变体,如稀疏非负矩阵分解。根据最近的基准测试,它是许多损失函数不是Frobenius范数的问题的最先进方法。在本文中,我们提议改进被视为交替Majorization-Minimization算法的乘性更新算法,通过为每个交替子问题构造更紧的Hessian矩阵上界。仍然确保收敛,在合成数据集和现实世界数据集上的实践中,我们观察到所提出的fastMU算法通常比常规乘性更新算法快几个数量级,甚至可以与Frobenius损失的最先进方法竞争。