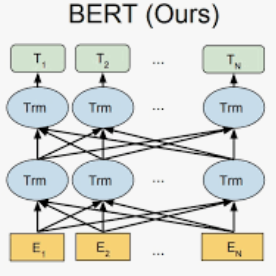

Recent progress in scaling up large language models has shown impressive capabilities in performing few-shot learning across a wide range of text-based tasks. However, a key limitation is that these language models fundamentally lack visual perception - a crucial attribute needed to extend these models to be able to interact with the real world and solve vision tasks, such as in visual-question answering and robotics. Prior works have largely connected image to text through pretraining and/or fine-tuning on curated image-text datasets, which can be a costly and expensive process. In order to resolve this limitation, we propose a simple yet effective approach called Language-Quantized AutoEncoder (LQAE), a modification of VQ-VAE that learns to align text-image data in an unsupervised manner by leveraging pretrained language models (e.g., BERT, RoBERTa). Our main idea is to encode image as sequences of text tokens by directly quantizing image embeddings using a pretrained language codebook. We then apply random masking followed by a BERT model, and have the decoder reconstruct the original image from BERT predicted text token embeddings. By doing so, LQAE learns to represent similar images with similar clusters of text tokens, thereby aligning these two modalities without the use of aligned text-image pairs. This enables few-shot image classification with large language models (e.g., GPT-3) as well as linear classification of images based on BERT text features. To the best of our knowledge, our work is the first work that uses unaligned images for multimodal tasks by leveraging the power of pretrained language models.

翻译:扩大大型语言模型的最近进展表明,在完成广泛的基于文本的任务中进行微小的学习的能力令人印象深刻。然而,一个关键的限制因素是,这些语言模型基本上缺乏视觉感知能力,这是扩展这些模型以能够与真实世界互动并解决视觉任务的关键属性,例如视觉问题回答和机器人等视觉任务。先前的作品在很大程度上通过预先培训和/或微调将图像图像图像文本数据集的图像与文本连接起来,这可能是一个昂贵和昂贵的过程。为了解决这一限制,我们提议了一个简单而有效的方法,称为语言量化自动Encorder(LQAE),对VQ-VAE进行修改,通过利用预先训练的语言模型(例如,BERT, Robreta)等语言模型,使文本与文本相匹配,然后将原始图像的图像进行随机掩码,然后用不直径直径直径直径直的模型,然后将原始图像的图像进行解析,然后将原始的图像与原始的GERT的图像进行比对齐的文本排序。我们的主要想法是将图像转换成直径直径直方的图像。