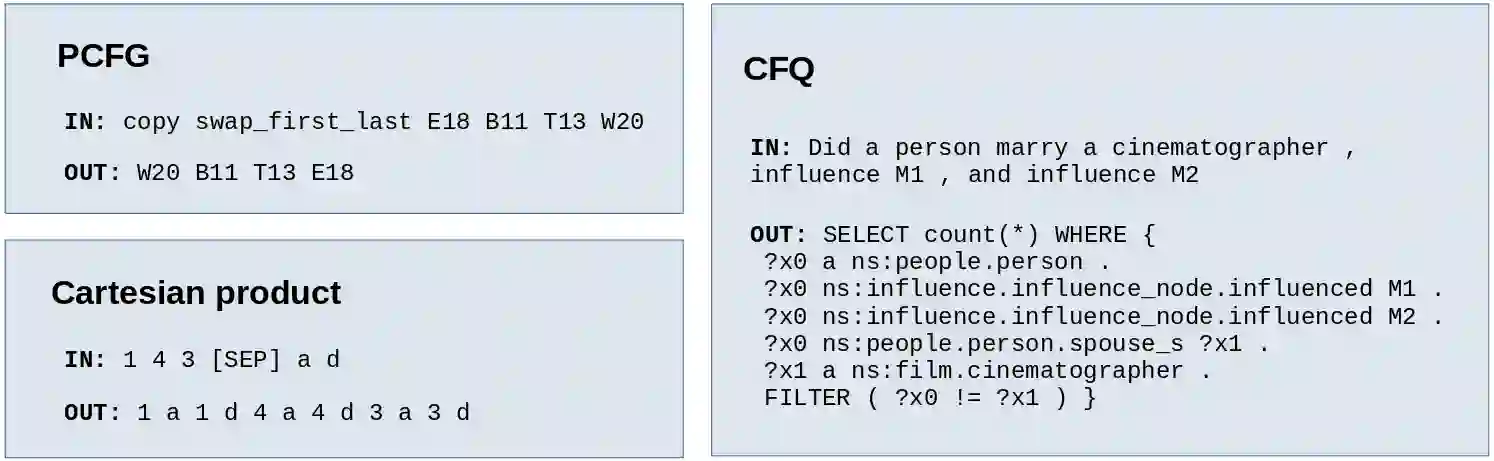

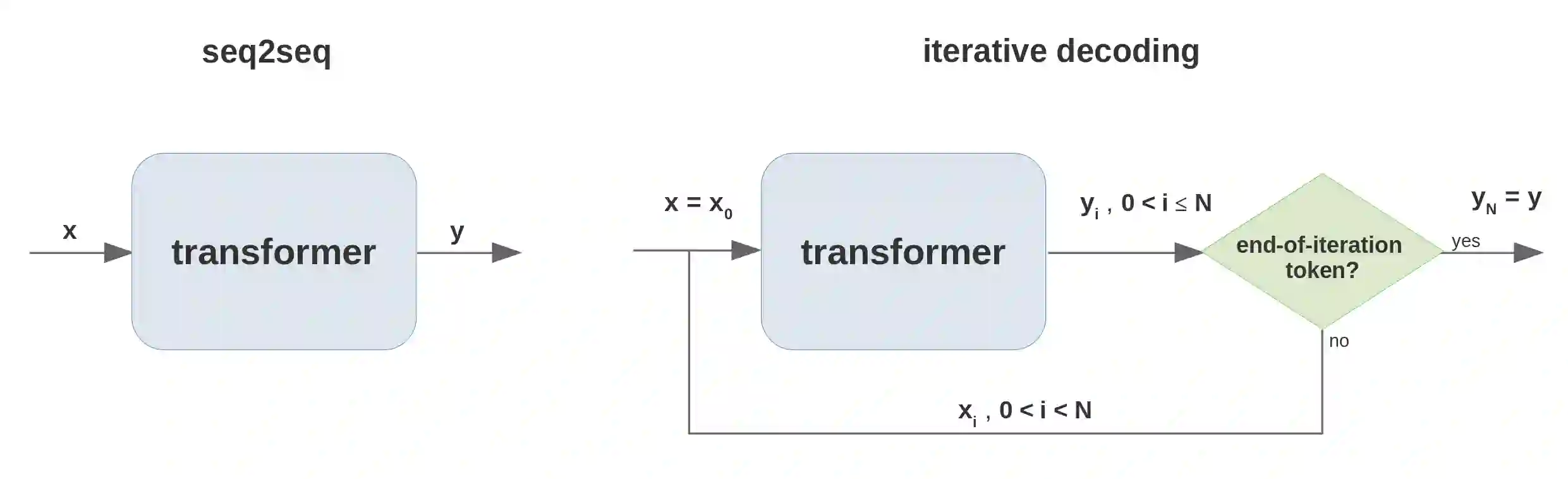

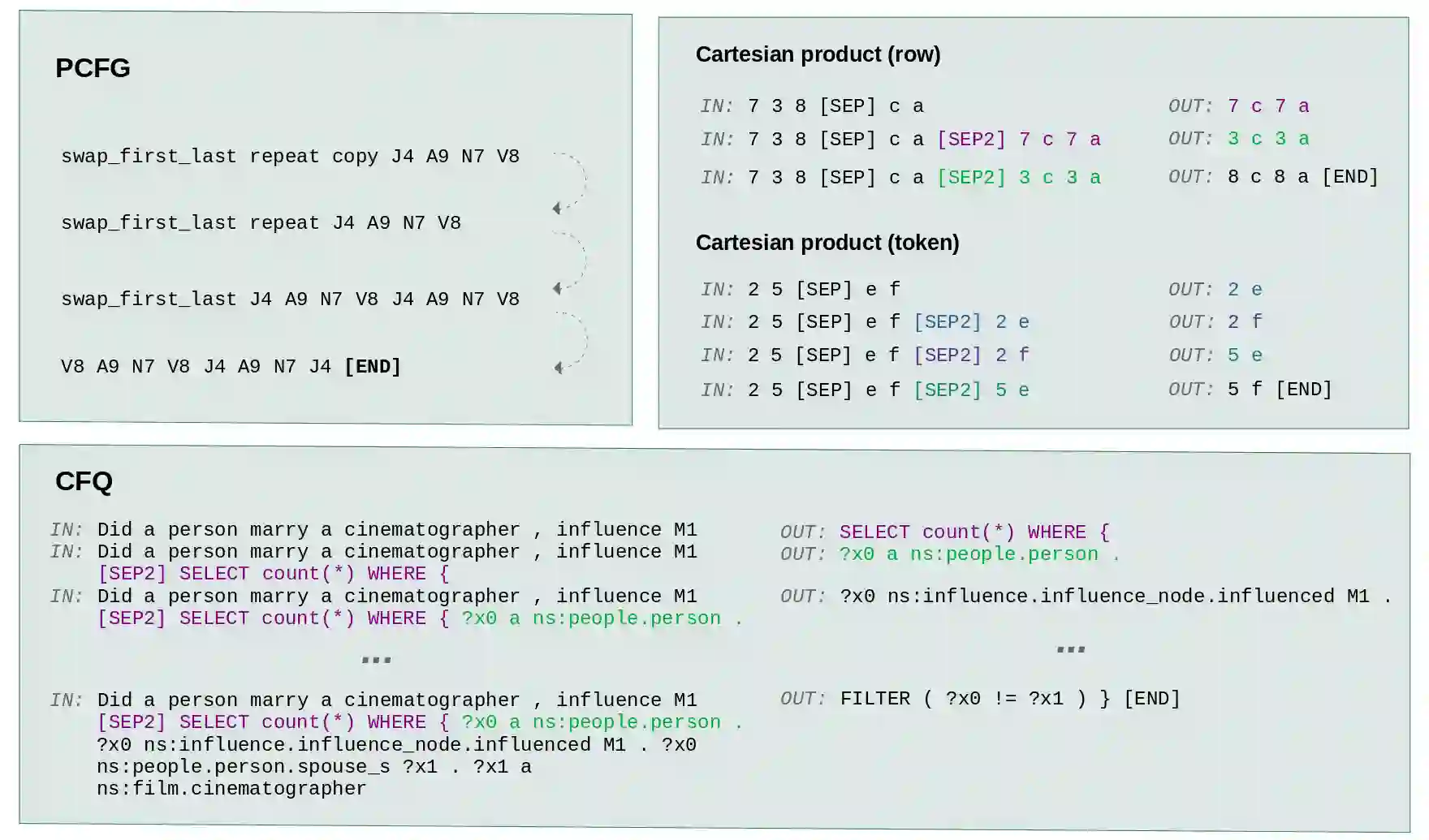

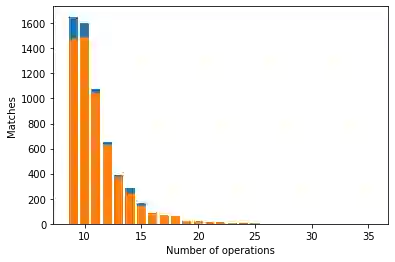

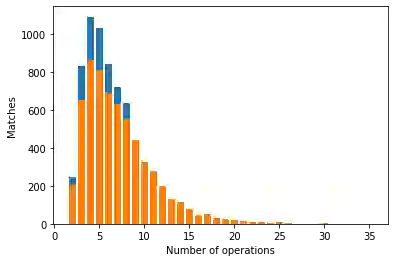

Deep learning models do well at generalizing to in-distribution data but struggle to generalize compositionally, i.e., to combine a set of learned primitives to solve more complex tasks. In particular, in sequence-to-sequence (seq2seq) learning, transformers are often unable to predict correct outputs for even marginally longer examples than those seen during training. This paper introduces iterative decoding, an alternative to seq2seq learning that (i) improves transformer compositional generalization and (ii) evidences that, in general, seq2seq transformers do not learn iterations that are not unrolled. Inspired by the idea of compositionality -- that complex tasks can be solved by composing basic primitives -- training examples are broken down into a sequence of intermediate steps that the transformer then learns iteratively. At inference time, the intermediate outputs are fed back to the transformer as intermediate inputs until an end-of-iteration token is predicted. Through numerical experiments, we show that transfomers trained via iterative decoding outperform their seq2seq counterparts on the PCFG dataset, and solve the problem of calculating Cartesian products between vectors longer than those seen during training with 100% accuracy, a task at which seq2seq models have been shown to fail. We also illustrate a limitation of iterative decoding, specifically, that it can make sorting harder to learn on the CFQ dataset.

翻译:深层次的学习模式在概括分布中的数据时效果良好,但在概括组成方面效果良好,但在概括组成方面挣扎,即将一组学习到的原始原始元素结合起来,以解决更复杂的任务。特别是,在从序列到序列(seq2seq)的学习中,变压器往往无法预测出比培训期间更短的示例的正确产出。本文介绍了迭代解码,这是后继2seq的替代学习方法,学习(一)改进变压器的构成总体化,而(二)一般而言,后继2seq变压器并不学习非无滚动的变相器。受组成概念的启发 -- -- 复杂的任务可以通过基本原始元素的组合来解决 -- -- 培训实例被破碎成中间步骤的序列,变压器随后反复学习。在变压时,中间产出被反馈到变压器作为中间输入器,直到最后的信号得到预测。通过数字实验,我们显示,通过迭代的学习解码变式变异器训练的变异器不会超越其后代2sqequal2 sq) 排序的对应方,在计算中,在100的变压中,我们看到的变压产品中看到了更精确的变压后序产品中, 。