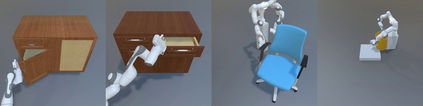

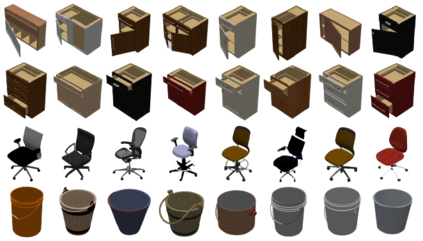

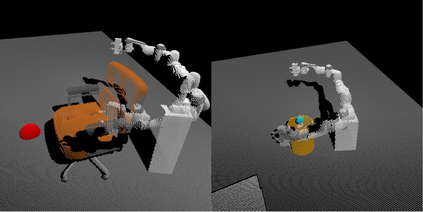

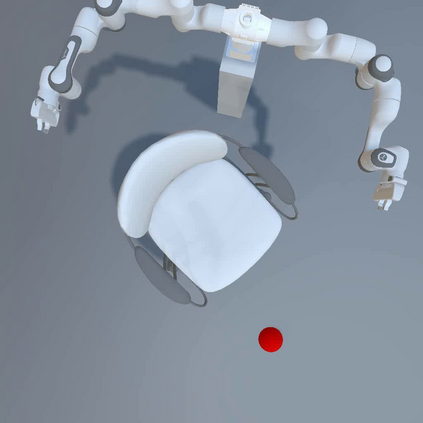

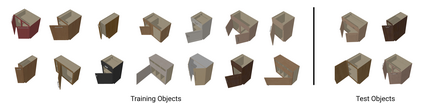

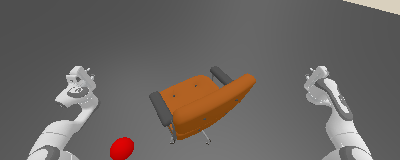

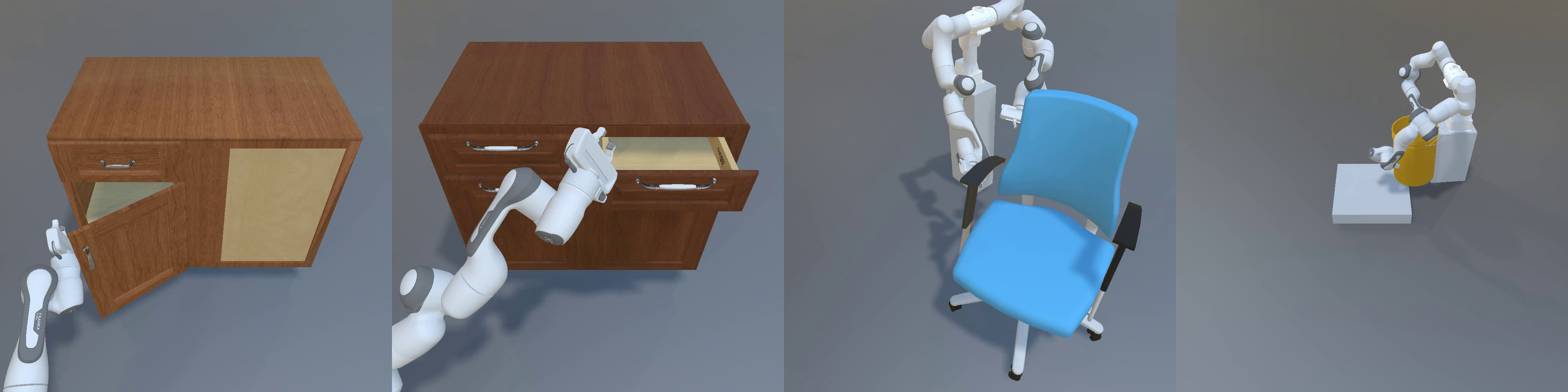

Learning generalizable manipulation skills is central for robots to achieve task automation in environments with endless scene and object variations. However, existing robot learning environments are limited in both scale and diversity of 3D assets (especially of articulated objects), making it difficult to train and evaluate the generalization ability of agents over novel objects. In this work, we focus on object-level generalization and propose SAPIEN Manipulation Skill Benchmark (abbreviated as ManiSkill), a large-scale learning-from-demonstrations benchmark for articulated object manipulation with 3D visual input (point cloud and RGB-D image). ManiSkill supports object-level variations by utilizing a rich and diverse set of articulated objects, and each task is carefully designed for learning manipulations on a single category of objects. We equip ManiSkill with a large number of high-quality demonstrations to facilitate learning-from-demonstrations approaches and perform evaluations on baseline algorithms. We believe that ManiSkill can encourage the robot learning community to explore more on learning generalizable object manipulation skills.

翻译:在无尽的场景和天体变异的环境中,机器人实现任务自动化的关键是学习通用操作技能;然而,现有的机器人学习环境在3D资产的规模和多样性方面都受到限制(特别是3D资产(特别是分解的物体)),因此难以训练和评价代理人对新物品的一般操作能力;在这项工作中,我们注重目标一级的一般化,并提出SAPIEN操纵技能基准(作为ManiSkill的缩写),这是用3D视觉输入(点云和RGB-D图像)进行分解的物体操纵的大规模示范性示范性基准;ManiSkill利用一套丰富多样的分解对象,支持目标一级的变异;每一项任务都经过精心设计,用于学习对某一类物体的操纵。我们用大量高质量的演示来帮助ManiSkill用演示方法进行学习,并对基线算法进行评估。我们相信,ManiSkill可以鼓励机器人学习更多关于学习通用的物体操纵技能的探索。