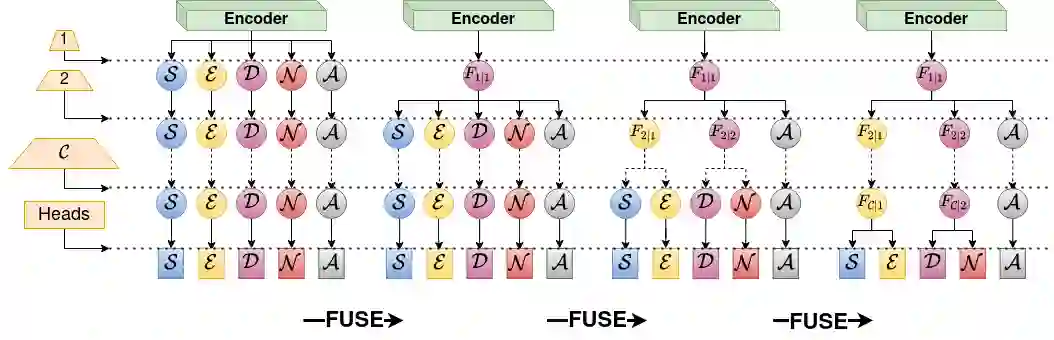

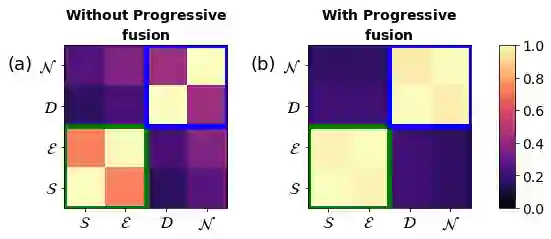

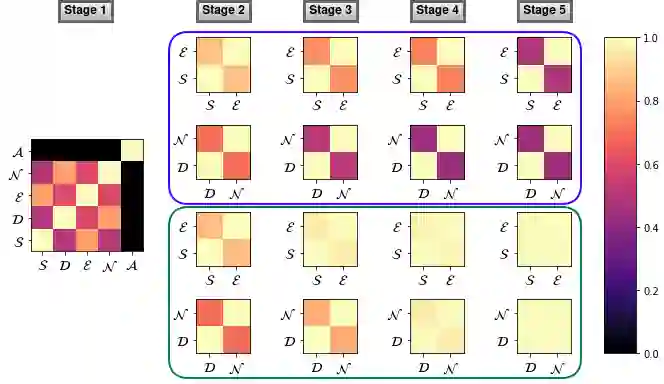

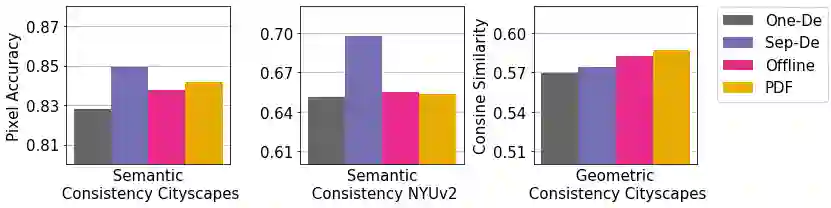

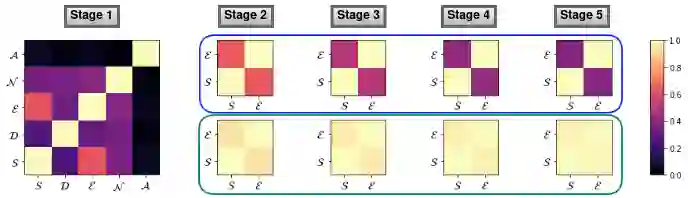

Multi-task learning of dense prediction tasks, by sharing both the encoder and decoder, as opposed to sharing only the encoder, provides an attractive front to increase both accuracy and computational efficiency. When the tasks are similar, sharing the decoder serves as an additional inductive bias providing more room for tasks to share complementary information among themselves. However, increased sharing exposes more parameters to task interference which likely hinders both generalization and robustness. Effective ways to curb this interference while exploiting the inductive bias of sharing the decoder remains an open challenge. To address this challenge, we propose Progressive Decoder Fusion (PDF) to progressively combine task decoders based on inter-task representation similarity. We show that this procedure leads to a multi-task network with better generalization to in-distribution and out-of-distribution data and improved robustness to adversarial attacks. Additionally, we observe that the predictions of different tasks of this multi-task network are more consistent with each other.

翻译:通过共享编码器和解码器,而不是只共享编码器,多任务地学习密集的预测任务,从而提供了提高准确性和计算效率的有吸引力的前沿。当任务相似时,共享解码器则是一种额外的导导偏差,为彼此之间分享补充信息提供了更多空间。然而,共享的增加使更多的参数暴露于可能阻碍一般化和稳健性的任务干扰。在利用共享解码器的内在偏向的同时遏制这种干扰的有效方法仍然是一个公开的挑战。为了应对这一挑战,我们提议渐进解码器整合基于任务间相似性的任务解码器(PDF),我们表明,这一程序导致一个多任务网络,更普遍地传播和流出数据,提高对抗性攻击的强度。此外,我们注意到,对这一多任务网络不同任务的预测更加一致。