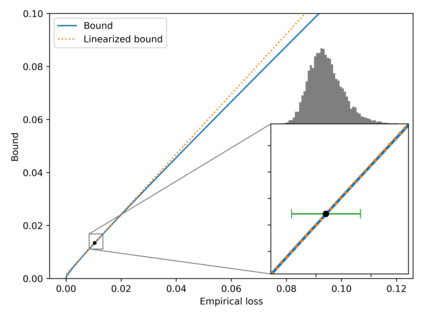

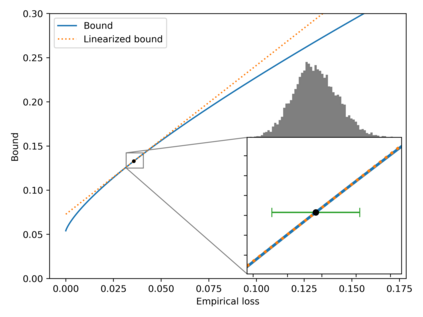

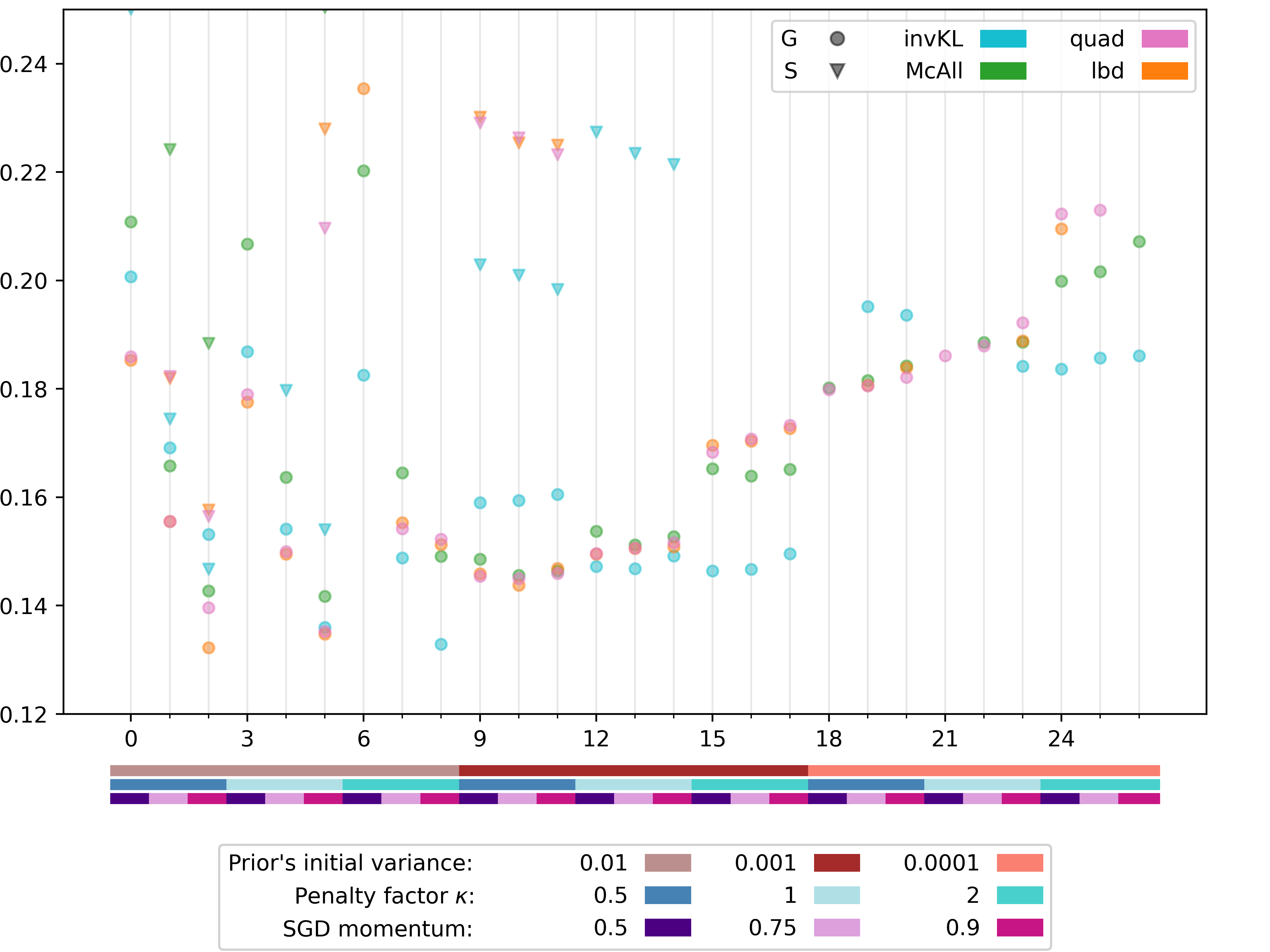

Recent studies have empirically investigated different methods to train a stochastic classifier by optimising a PAC-Bayesian bound via stochastic gradient descent. Most of these procedures need to replace the misclassification error with a surrogate loss, leading to a mismatch between the optimisation objective and the actual generalisation bound. The present paper proposes a novel training algorithm that optimises the PAC-Bayesian bound, without relying on any surrogate loss. Empirical results show that the bounds obtained with this approach are tighter than those found in the literature.

翻译:最近的研究从经验上调查了通过通过随机梯度下降优化PAC-Bayesian捆绑物来训练一个随机分类师的不同方法,其中多数程序需要用替代损失取代错误分类错误,从而导致优化目标与实际概括约束之间的不匹配。本文件提出一种新的培训算法,在不依赖任何替代损失的情况下,选择PAC-Bayesian绑定物。经验性结果显示,采用这一方法获得的界限比文献中发现的界限更紧。