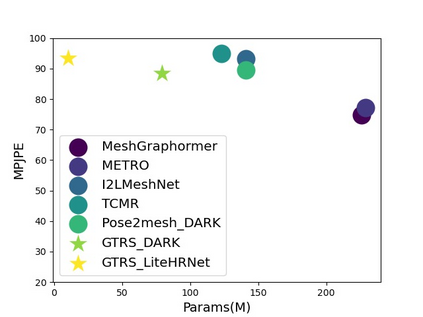

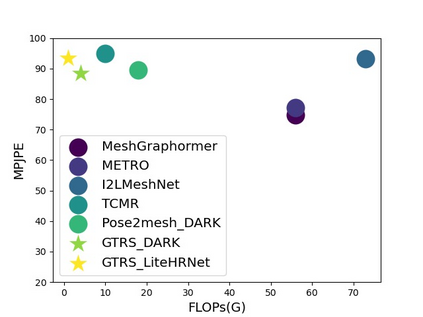

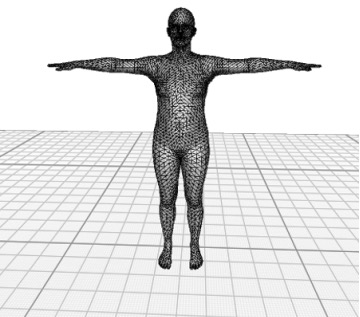

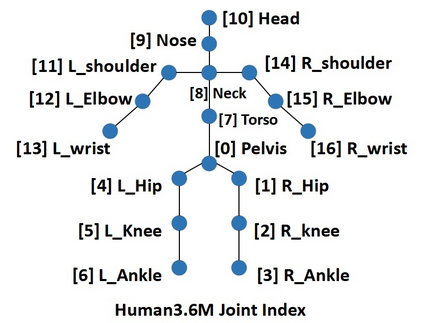

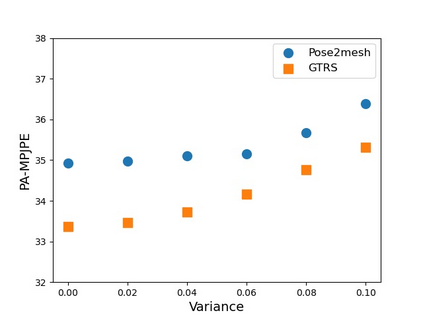

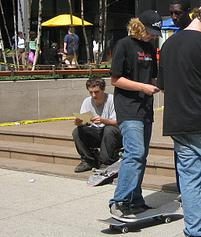

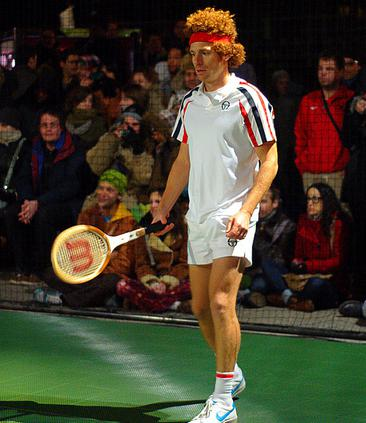

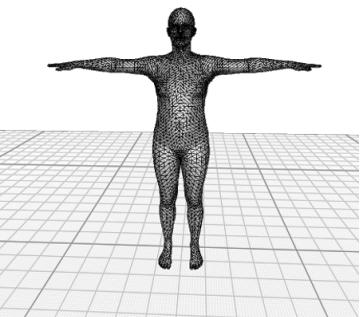

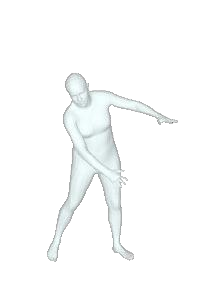

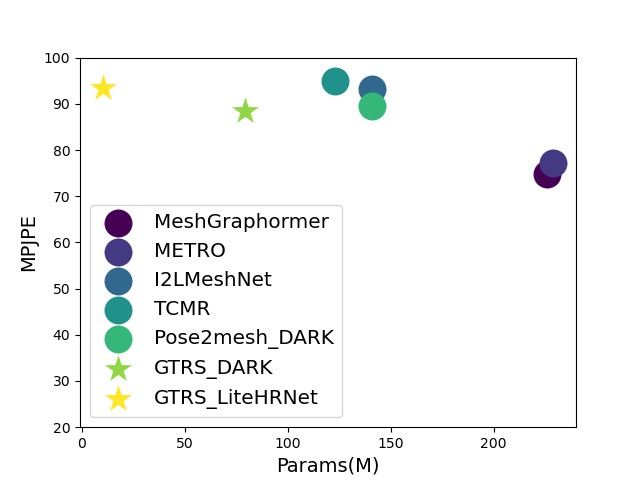

Existing deep learning-based human mesh reconstruction approaches have a tendency to build larger networks in order to achieve higher accuracy. Computational complexity and model size are often neglected, despite being key characteristics for practical use of human mesh reconstruction models (e.g. virtual try-on systems). In this paper, we present GTRS, a lightweight pose-based method that can reconstruct human mesh from 2D human pose. We propose a pose analysis module that uses graph transformers to exploit structured and implicit joint correlations, and a mesh regression module that combines the extracted pose feature with the mesh template to reconstruct the final human mesh. We demonstrate the efficiency and generalization of GTRS by extensive evaluations on the Human3.6M and 3DPW datasets. In particular, GTRS achieves better accuracy than the SOTA pose-based method Pose2Mesh while only using 10.2% of the parameters (Params) and 2.5% of the FLOPs on the challenging in-the-wild 3DPW dataset. Code will be publicly available.

翻译:现有基于深层学习的人类网格重建方法倾向于建立更大的网络,以便达到更高的精确度。尽管计算复杂程度和模型大小是实际使用人类网格重建模型(例如虚拟试运行系统)的关键特征,但计算复杂程度和模型大小往往被忽视。在本文中,我们介绍了GTRS, 这是一种轻量制成成型法,可以从2D型人脸上重建人类网格。我们提议了一个配置分析模块,使用图形变压器来利用结构化和隐含的联合关联,以及一个将提取的外形特征与网格模板结合起来以重建最后的人类网格的网状回归模块。我们通过对人文3.6M和3DPW数据集的广泛评价,展示了GTRS的效率和一般化。特别是,GTRS比SATA基于外观的方法Pose2Mesh更准确,同时只使用10.2%的参数(Params)和2.5%的FLOPs,用于挑战性的3DPW数据集。代码将公开提供。