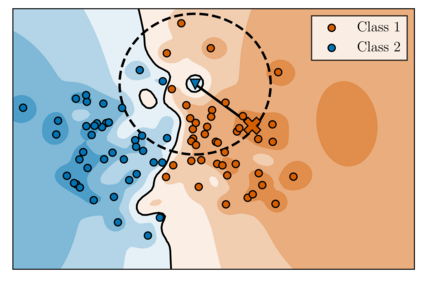

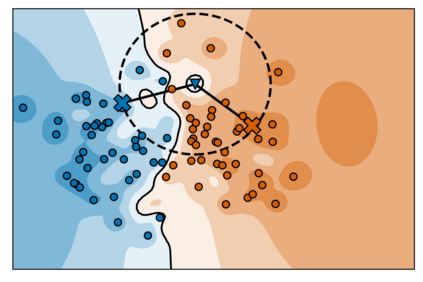

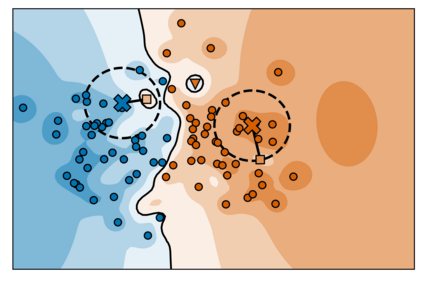

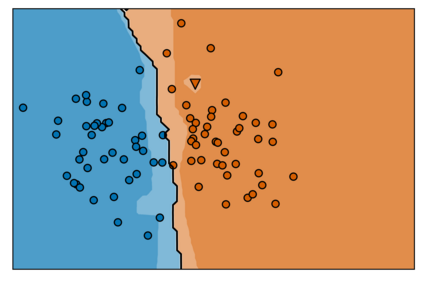

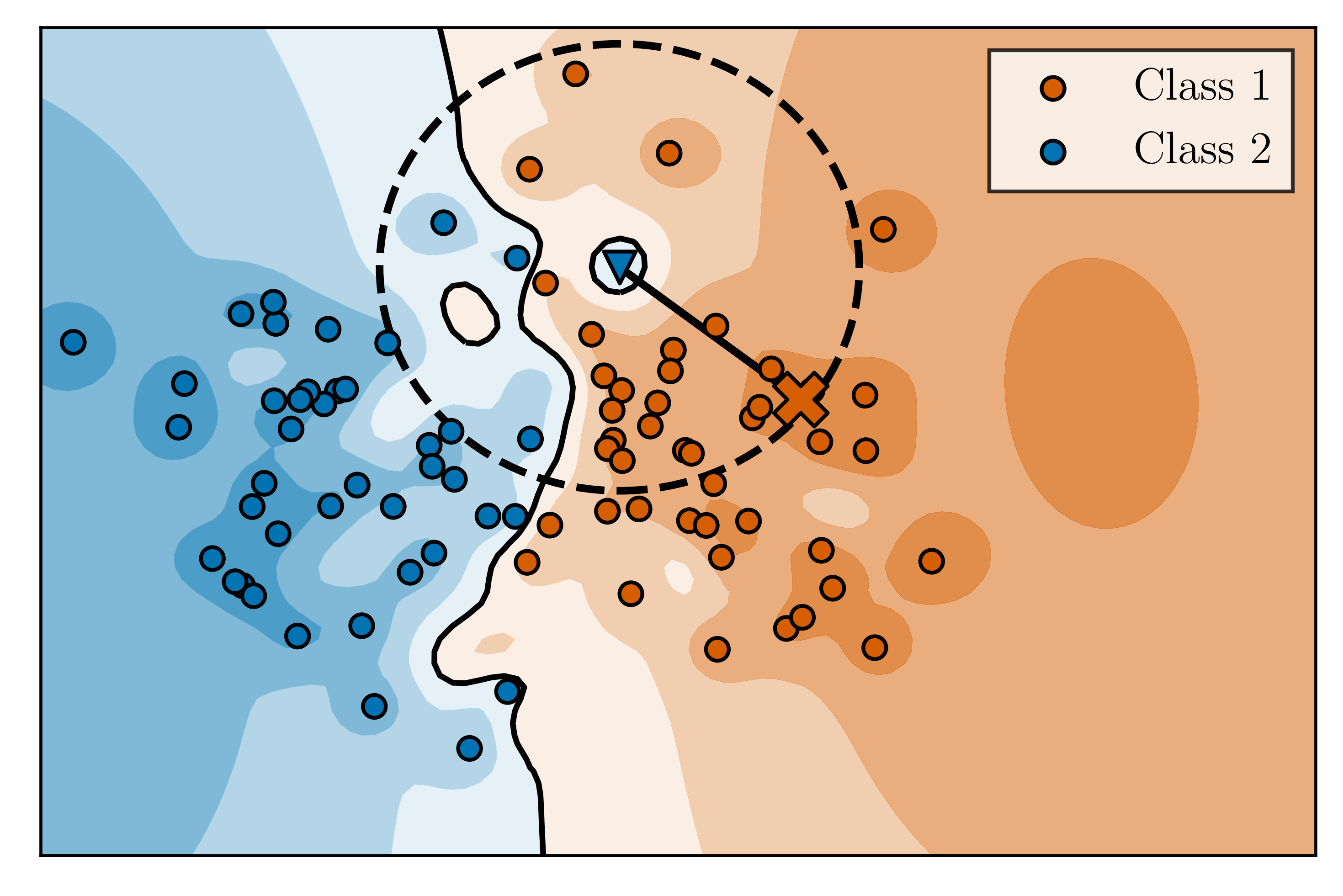

The reliability of neural networks is essential for their use in safety-critical applications. Existing approaches generally aim at improving the robustness of neural networks to either real-world distribution shifts (e.g., common corruptions and perturbations, spatial transformations, and natural adversarial examples) or worst-case distribution shifts (e.g., optimized adversarial examples). In this work, we propose the Decision Region Quantification (DRQ) algorithm to improve the robustness of any differentiable pre-trained model against both real-world and worst-case distribution shifts in the data. DRQ analyzes the robustness of local decision regions in the vicinity of a given data point to make more reliable predictions. We theoretically motivate the DRQ algorithm by showing that it effectively smooths spurious local extrema in the decision surface. Furthermore, we propose an implementation using targeted and untargeted adversarial attacks. An extensive empirical evaluation shows that DRQ increases the robustness of adversarially and non-adversarially trained models against real-world and worst-case distribution shifts on several computer vision benchmark datasets.

翻译:现有方法一般旨在改善神经网络的稳健性,使之适应真实世界分布变化(例如,常见的腐败和扰动、空间转换和自然对抗实例)或最坏的分布变化(例如,最佳对抗性实例);在这项工作中,我们提议采用决定区域量化算法,以提高任何不同的预先训练模型在数据真实世界和最坏的分布变化中具有的稳健性。 DRQ分析在特定数据点附近的地方决策区域是否稳健,以作出更可靠的预测。我们理论上鼓励DRQ算法,表明它有效地平滑了决策表面的虚伪地方极端。此外,我们提议采用有针对性和无目标的对抗性攻击来实施。一项广泛的经验评价表明,DRQ提高了对抗性和非敌对性训练模型在数个计算机基准数据集上对真实世界和最坏分布变化的稳健性。