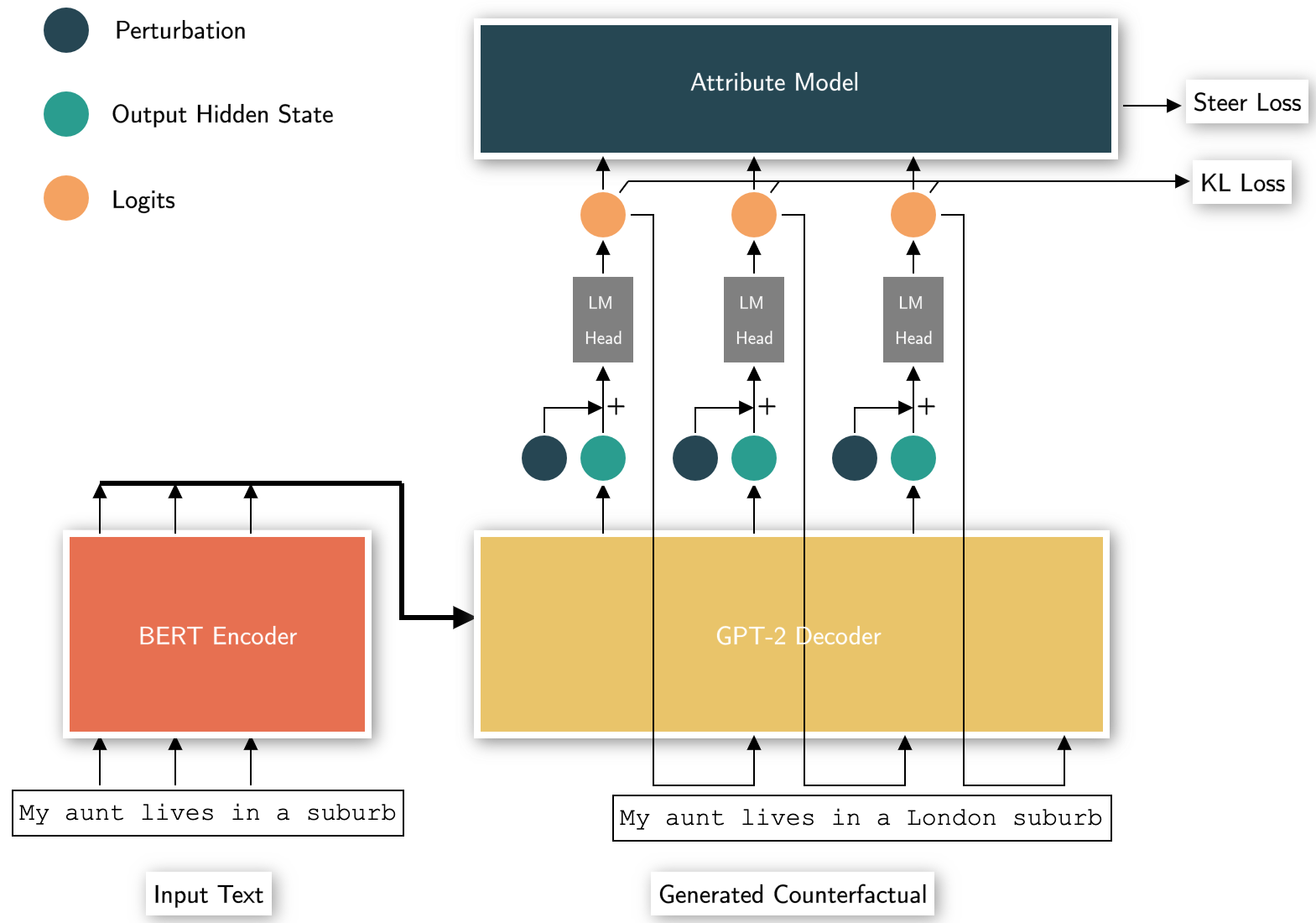

Generating counterfactual test-cases is an important backbone for testing NLP models and making them as robust and reliable as traditional software. In generating the test-cases, a desired property is the ability to control the test-case generation in a flexible manner to test for a large variety of failure cases and to explain and repair them in a targeted manner. In this direction, significant progress has been made in the prior works by manually writing rules for generating controlled counterfactuals. However, this approach requires heavy manual supervision and lacks the flexibility to easily introduce new controls. Motivated by the impressive flexibility of the plug-and-play approach of PPLM, we propose bringing the framework of plug-and-play to counterfactual test case generation task. We introduce CASPer, a plug-and-play counterfactual generation framework to generate test cases that satisfy goal attributes on demand. Our plug-and-play model can steer the test case generation process given any attribute model without requiring attribute-specific training of the model. In experiments, we show that CASPer effectively generates counterfactual text that follow the steering provided by an attribute model while also being fluent, diverse and preserving the original content. We also show that the generated counterfactuals from CASPer can be used for augmenting the training data and thereby fixing and making the test model more robust.

翻译:生成反事实测试箱是测试NLP模型和使其与传统软件一样强大和可靠的重要支柱。在创建测试箱时,一个理想的属性是能够灵活地控制测试箱生成,以测试各种故障案例,并有针对性地解释和修复这些案例。在这方面,先前的工作已经取得重大进展,通过手工写成规则生成受控反事实。然而,这一方法需要大量的手工监督,缺乏容易引入新控制的灵活性。受PPPLM插播方法令人印象深刻的灵活性驱动,我们提议将插播框架引入反事实测试案例生成任务。我们引入了CASPer,即插播反事实生成框架,以生成满足需求目标属性的测试案例。我们的插播模型可以指导测试案例生成过程,而不需要对模型进行特定属性的培训。在实验中,我们显示CASPer有效地生成了反事实文本,遵循了由属性模型提供的指导,同时正在制造更稳健的CASP培训。我们还可以从更稳健的CASP软件中获取原始内容。