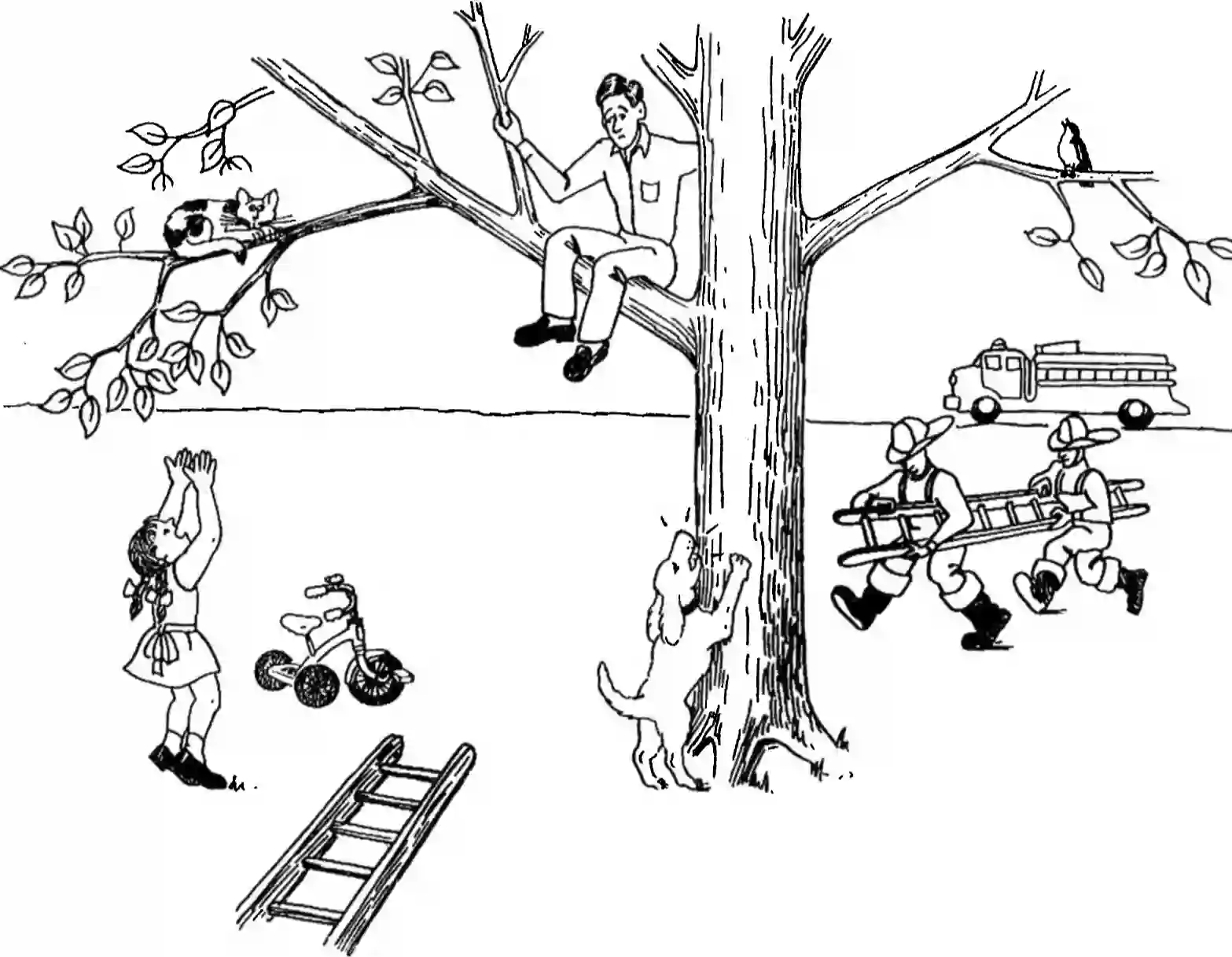

In aphasia research, Speech-Language Pathologists (SLPs) devote extensive time to manually coding speech samples using Correct Information Units (CIUs), a measure of how informative an individual sample of speech is. Developing automated systems to recognize aphasic language is limited by data scarcity. For example, only about 600 transcripts are available in AphasiaBank yet billions of tokens are used to train large language models (LLMs). In the broader field of machine learning (ML), researchers increasingly turn to synthetic data when such are sparse. Therefore, this study constructs and validates two methods to generate synthetic transcripts of the AphasiaBank Cat Rescue picture description task. One method leverages a procedural programming approach while the second uses Mistral 7b Instruct and Llama 3.1 8b Instruct LLMs. The methods generate transcripts across four severity levels (Mild, Moderate, Severe, Very Severe) through word dropping, filler insertion, and paraphasia substitution. Overall, we found, compared to human-elicited transcripts, Mistral 7b Instruct best captures key aspects of linguistic degradation observed in aphasia, showing realistic directional changes in NDW, word count, and word length amongst the synthetic generation methods. Based on the results, future work should plan to create a larger dataset, fine-tune models for better aphasic representation, and have SLPs assess the realism and usefulness of the synthetic transcripts.

翻译:在失语症研究中,言语-语言病理学家(SLPs)需耗费大量时间手动编码语音样本,采用正确信息单位(CIUs)作为衡量个体言语样本信息量的指标。由于数据稀缺,开发自动识别失语性语言的系统受到限制。例如,AphasiaBank中仅存约600份转录本,而训练大型语言模型(LLMs)通常需使用数十亿标记词。在更广泛的机器学习(ML)领域,当数据稀疏时,研究者日益转向合成数据。因此,本研究构建并验证了两种生成AphasiaBank猫救援图片描述任务合成转录本的方法。一种方法采用过程式编程策略,第二种方法则利用Mistral 7b Instruct和Llama 3.1 8b Instruct两种大型语言模型。这些方法通过词汇删除、填充词插入和错语替换,生成了涵盖轻度、中度、重度、极重度四个严重程度的转录本。总体而言,与人工诱导的转录本相比,在合成生成方法中,Mistral 7b Instruct最能捕捉失语症中观察到的语言退化关键特征,在NDW(不同词数)、总词数和词长等指标上展现出符合现实的方向性变化。基于研究结果,未来工作应计划构建更大规模数据集,微调模型以提升失语症表征能力,并邀请言语-语言病理学家评估合成转录本的真实性与实用性。