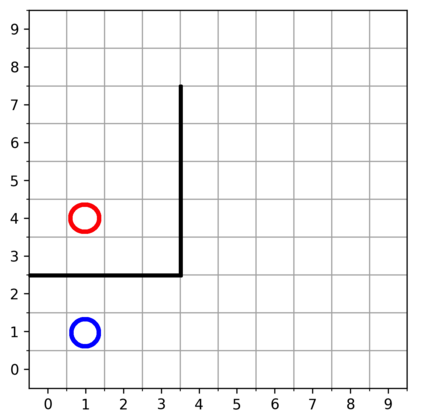

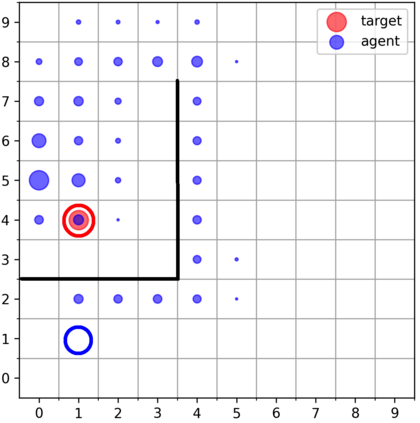

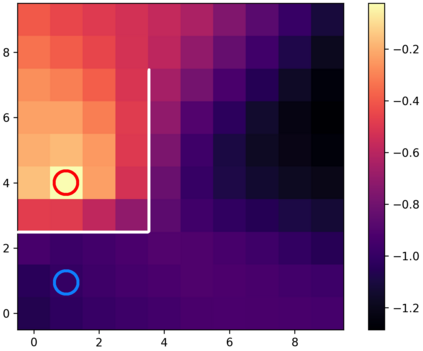

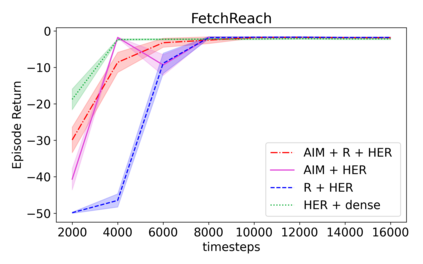

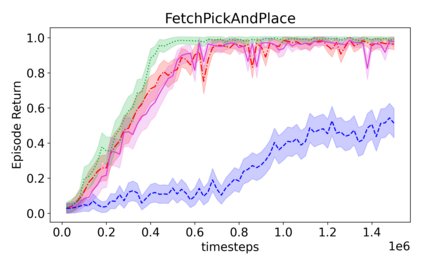

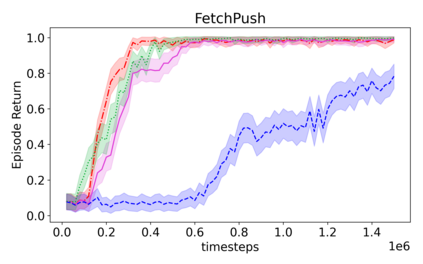

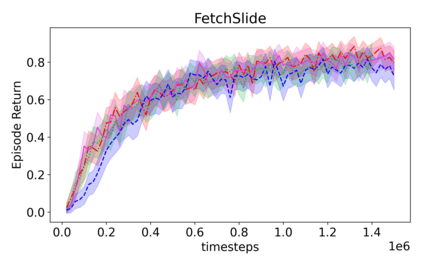

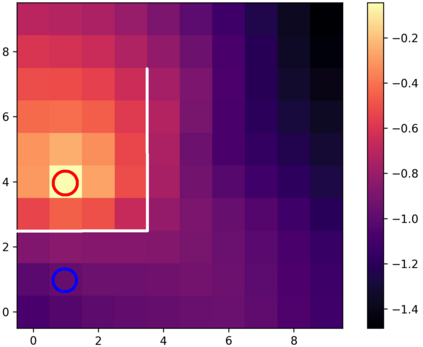

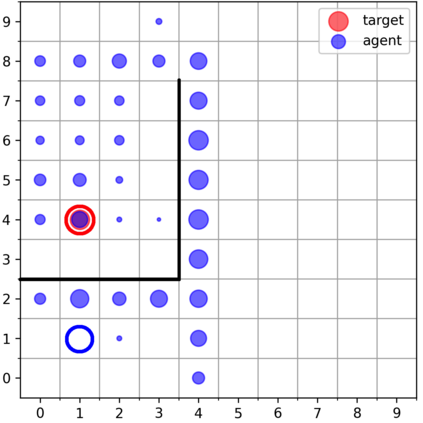

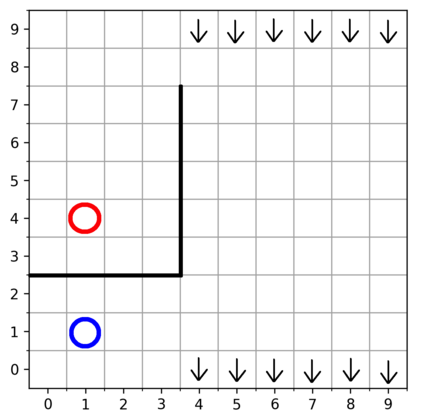

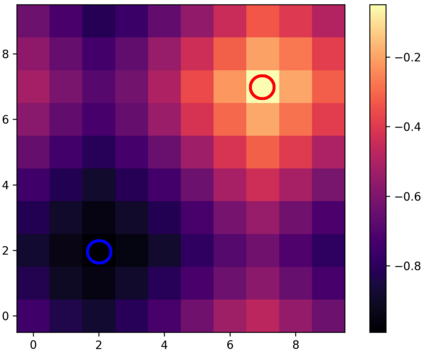

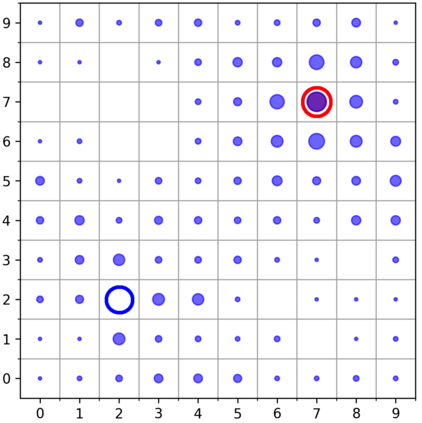

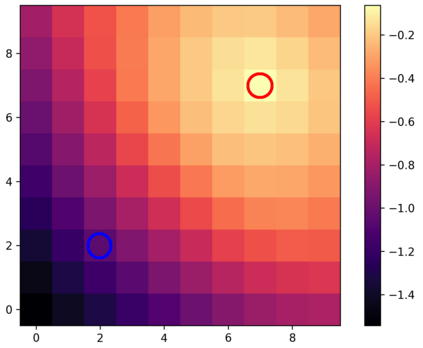

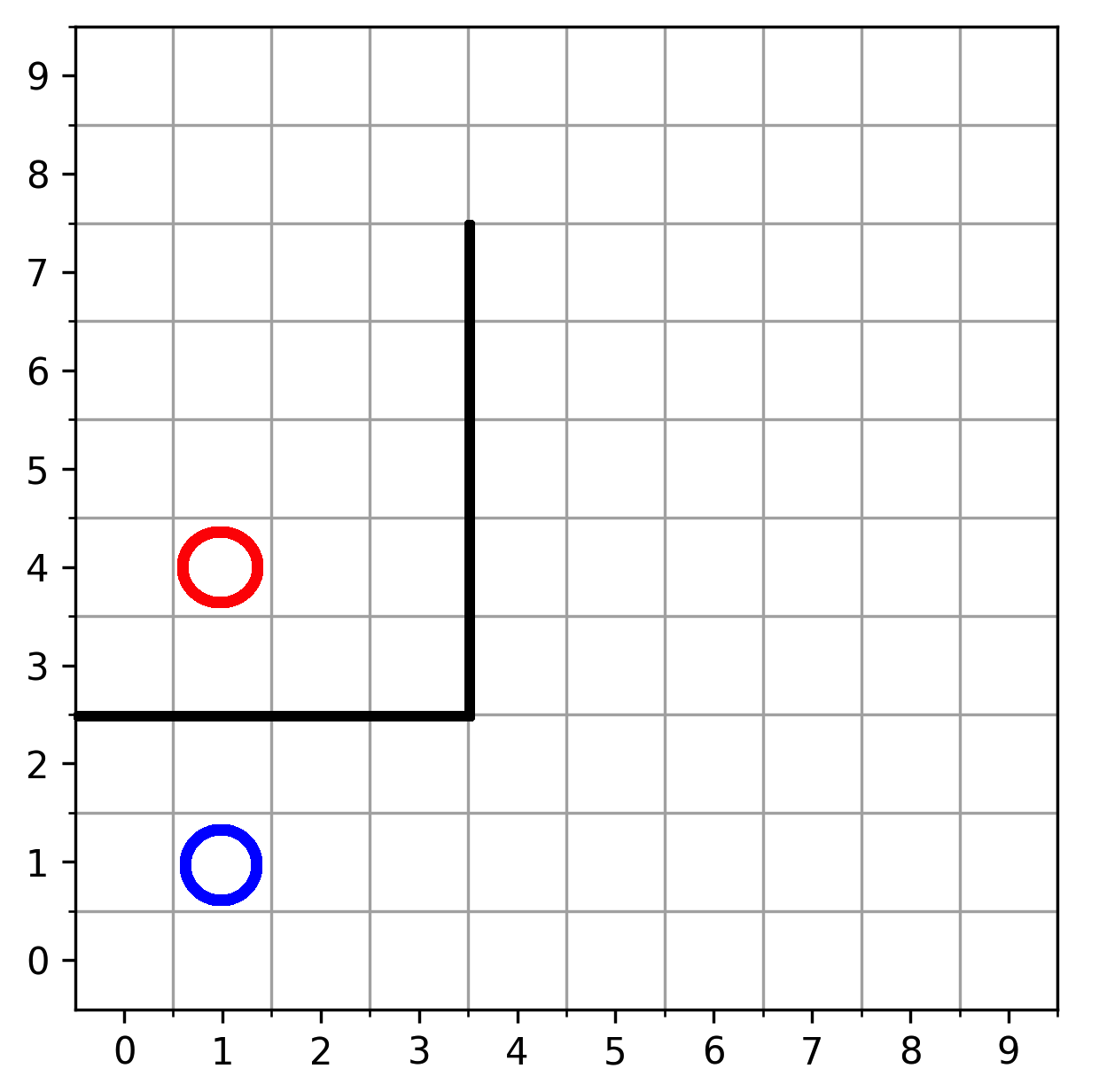

Learning with an objective function that seeks to minimize the mismatch with a reference distribution has been shown to be useful for generative modeling and imitation learning. In this paper, we investigate whether one such objective, the Wasserstein-1 distance between a policy's state visitation distribution and a target distribution, can be utilized effectively for reinforcement learning (RL) tasks. Specifically, this paper focuses on goal-conditioned reinforcement learning where the idealized (unachievable) target distribution has all the probability mass at the goal. We introduce a quasimetric specific to Markov Decision Processes (MDPs), and show that the policy that minimizes the Wasserstein-1 distance of its state visitation distribution to this target distribution under this quasimetric is the policy that reaches the goal in as few steps as possible. Our approach, termed Adversarial Intrinsic Motivation (AIM), estimates this Wasserstein-1 distance through its dual objective and uses it to compute a supplemental reward function. Our experiments show that this reward function changes smoothly with respect to transitions in the MDP and assists the agent in learning. Additionally, we combine AIM with Hindsight Experience Replay (HER) and show that the resulting algorithm accelerates learning significantly on several simulated robotics tasks when compared to HER with a sparse positive reward at the goal state.

翻译:在本文中,我们调查这样一个目标,即政策国家访问分布与目标分布之间的瓦瑟斯坦-1距离,是否可以有效地用于强化学习任务。具体地说,本文件侧重于目标条件强化学习,即理想化(无法实现)目标分布具有目标所有概率的强化学习。我们引入了针对Markov决策程序(MDPs)的准参数,并表明在这种准度下尽量减少其州访问分布与目标分布之间的瓦瑟斯坦-1距离的政策是尽可能少步骤达到目标的政策。我们的方法,即Aversarial Intrinsic Motition(AIM),通过其双重目标估计瓦瑟斯坦-1距离,并使用它来计算补充奖励功能。我们的实验表明,这一奖励功能与MDP的过渡相比变化顺利,并协助代理人学习。此外,我们把AIM与HERIM的州访问分布与高超速的模拟学习任务结合起来,在高超速的模型上显示甚高超速的智能模拟任务。