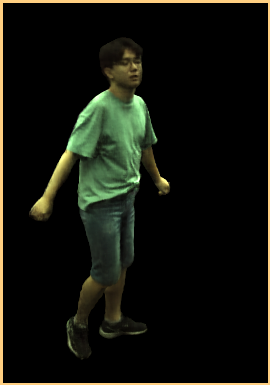

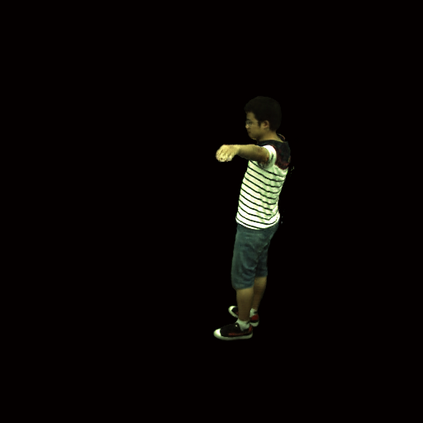

This paper introduces a novel representation of volumetric videos for real-time view synthesis of dynamic scenes. Recent advances in neural scene representations demonstrate their remarkable capability to model and render complex static scenes, but extending them to represent dynamic scenes is not straightforward due to their slow rendering speed or high storage cost. To solve this problem, our key idea is to represent the radiance field of each frame as a set of shallow MLP networks whose parameters are stored in 2D grids, called MLP maps, and dynamically predicted by a 2D CNN decoder shared by all frames. Representing 3D scenes with shallow MLPs significantly improves the rendering speed, while dynamically predicting MLP parameters with a shared 2D CNN instead of explicitly storing them leads to low storage cost. Experiments show that the proposed approach achieves state-of-the-art rendering quality on the NHR and ZJU-MoCap datasets, while being efficient for real-time rendering with a speed of 41.7 fps for $512 \times 512$ images on an RTX 3090 GPU. The code is available at https://zju3dv.github.io/mlp_maps/.

翻译:本文提出了一种新颖的体积视频表示法,用于动态场景的实时视图合成。最近神经场景表示的进展展示了它们对建模和渲染复杂静态场景的显著能力,但将它们扩展到表示动态场景却并不简单,因为它们渲染速度慢或存储成本高。为了解决这个问题,本文的关键思路是通过一组浅 MLP 网络将每帧的辐射场表示为存储在 2D 网格中的一组参数,称为 MLP 地图,然后通过一个被所有帧共享的 2D CNN 解码器进行动态预测。通过使用浅 MLP 来表示 3D 场景,可以显著提高渲染速度,而通过使用共享的 2D CNN 来动态预测 MLP 参数,而非显式存储它们,可以降低存储成本。实验表明,所提出的方法在 NHR 和 ZJU-MoCap 数据集上实现了最先进的渲染质量,同时在 RTX 3090 GPU 上实时渲染 512x512 图像的速度为 41.7 fps。代码可在 https://zju3dv.github.io/mlp_maps/ 上获取。