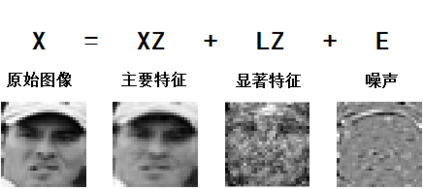

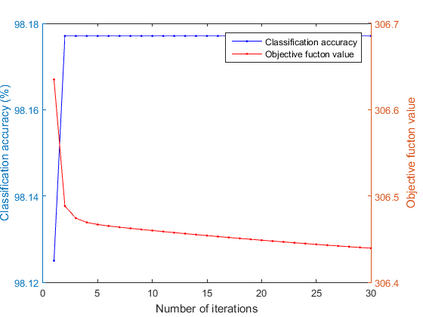

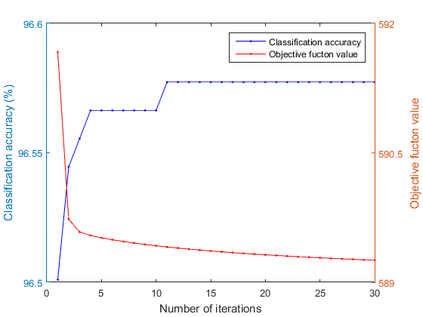

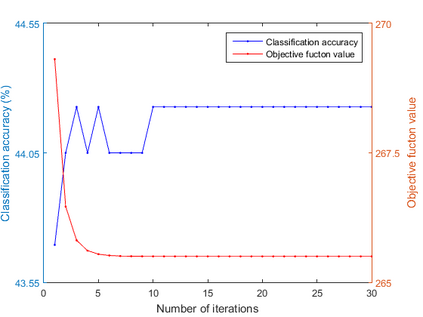

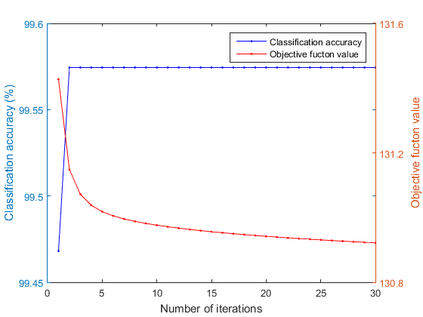

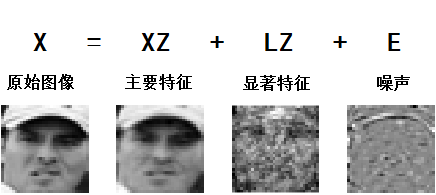

Linear regression is a supervised method that has been widely used in classification tasks. In order to apply linear regression to classification tasks, a technique for relaxing regression targets was proposed. However, methods based on this technique ignore the pressure on a single transformation matrix due to the complex information contained in the data. A single transformation matrix in this case is too strict to provide a flexible projection, thus it is necessary to adopt relaxation on transformation matrix. This paper proposes a double transformation matrices learning method based on latent low-rank feature extraction. The core idea is to use double transformation matrices for relaxation, and jointly projecting the learned principal and salient features from two directions into the label space, which can share the pressure of a single transformation matrix. Firstly, the low-rank features are learned by the latent low rank representation (LatLRR) method which processes the original data from two directions. In this process, sparse noise is also separated, which alleviates its interference on projection learning to some extent. Then, two transformation matrices are introduced to process the two features separately, and the information useful for the classification is extracted. Finally, the two transformation matrices can be easily obtained by alternate optimization methods. Through such processing, even when a large amount of redundant information is contained in samples, our method can also obtain projection results that are easy to classify. Experiments on multiple data sets demonstrate the effectiveness of our approach for classification, especially for complex scenarios.

翻译:线性回归是一种监督方法,在分类任务中广泛使用。为了对分类任务应用线性回归,提出了放松回归目标的技术。但是,基于这一技术的方法忽视了由于数据所含信息复杂而对单一转换矩阵的压力。在这种情况下,单一转换矩阵过于严格,无法提供灵活的预测,因此有必要对转换矩阵采取放松措施。本文件建议采用基于潜伏低级特征提取的双重转换矩阵学习方法。核心想法是使用双向转换矩阵来放松,并联合将从两个方向学到的主要特征和突出特征投射到标签空间,这可以分享单一转换矩阵的压力。首先,低级别特征通过潜在的低级别代表法(LatLRRR)来学习,从两个方向处理原始数据。在这一过程中,将微小的噪音分开,这在一定程度上减轻了其对预测学习的干扰。然后,引入了两个转换矩阵来分别处理这两个特征,并提取了对分类有用的信息。最后,两个转换矩阵可以通过替代的优化方法很容易获得。首先通过潜在的低级别代表法来学习低层次特征,然后在大量的实验性模型中进行分类,然后,我们又可以轻松地分析。