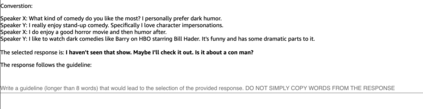

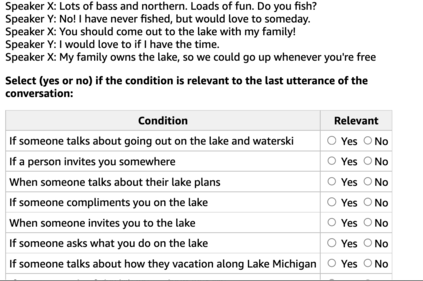

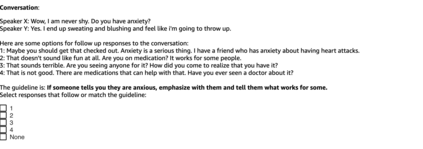

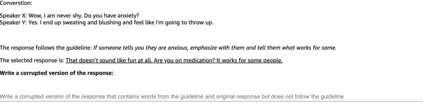

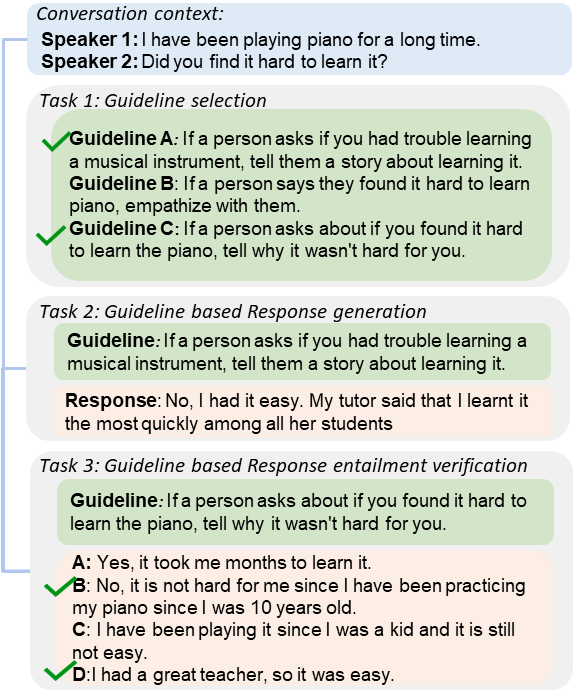

Dialogue models are able to generate coherent and fluent responses, but they can still be challenging to control and may produce non-engaging, unsafe results. This unpredictability diminishes user trust and can hinder the use of the models in the real world. To address this, we introduce DialGuide, a novel framework for controlling dialogue model behavior using natural language rules, or guidelines. These guidelines provide information about the context they are applicable to and what should be included in the response, allowing the models to generate responses that are more closely aligned with the developer's expectations and intent. We evaluate DialGuide on three tasks in open-domain dialogue response generation: guideline selection, response generation, and response entailment verification. Our dataset contains 10,737 positive and 15,467 negative dialogue context-response-guideline triplets across two domains - chit-chat and safety. We provide baseline models for the tasks and benchmark their performance. We also demonstrate that DialGuide is effective in the dialogue safety domain, producing safe and engaging responses that follow developer guidelines.

翻译:对话模式能够产生一致和流畅的反应,但是它们仍然对控制具有挑战性,并可能产生非参与、不安全的结果。这种不可预测性会降低用户信任度,并会妨碍模型在现实世界中的使用。为了解决这个问题,我们引入了DialGuide,这是一个利用自然语言规则或准则来控制对话模式行为的新框架。这些指导方针提供了它们适用于哪些背景和哪些内容应纳入反应中的信息,使模型能够产生更符合开发者期望和意图的响应。我们评估了公开对话响应生成的三项任务的 DialGuide:准则选择、反应生成和反应要求核查。我们的数据集包含10,737正和15,467个负面对话环境-反应-指南三重线,横跨两个领域――奇特聊天和安全。我们为任务提供了基准模型并衡量其业绩。我们还证明DialGuide在对话安全领域是有效的,产生符合开发者指导方针的安全和参与性反应。