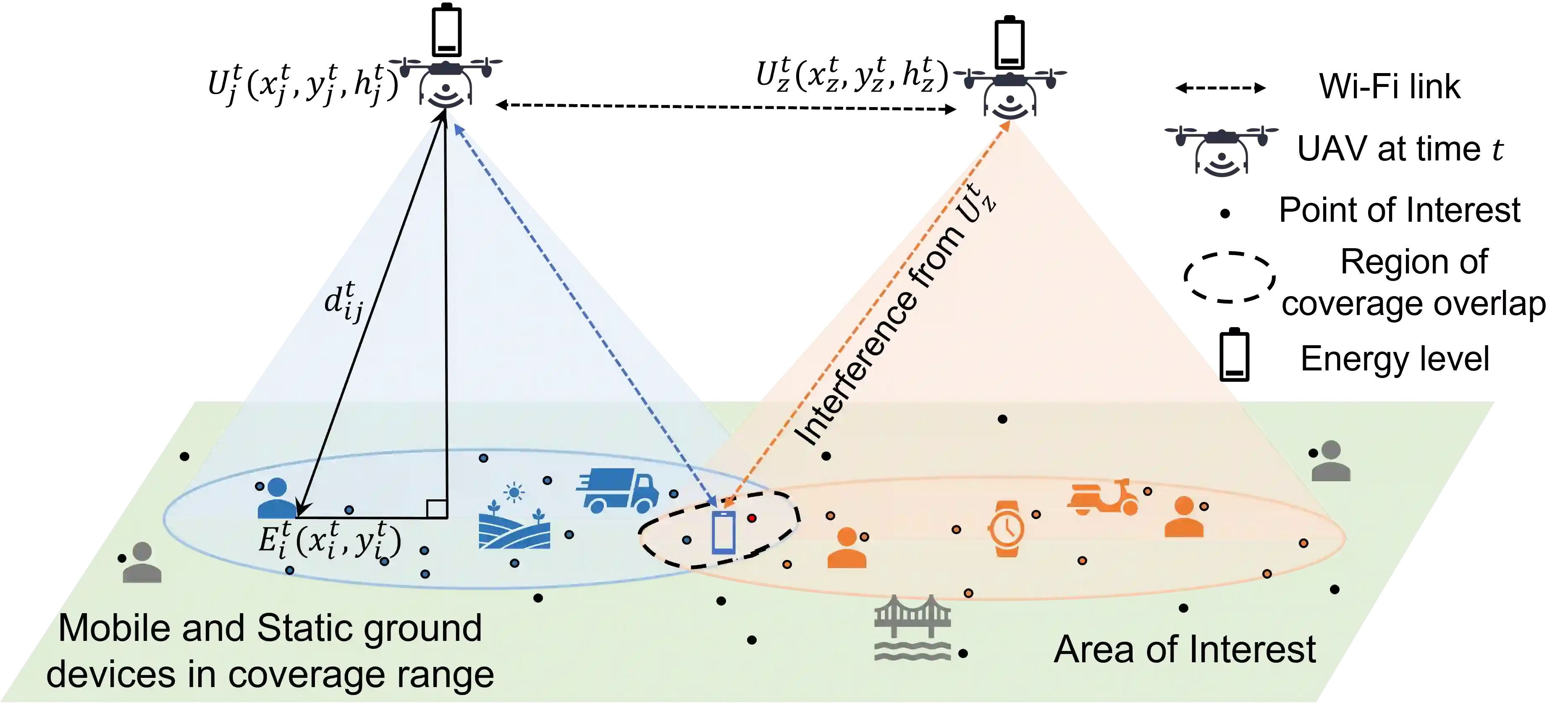

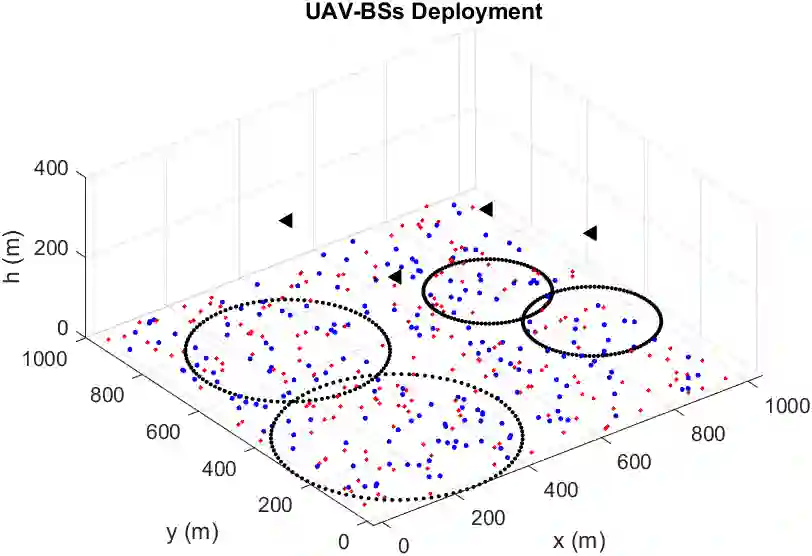

Unmanned aerial vehicles serving as aerial base stations (UAV-BSs) can be deployed to provide wireless connectivity to ground devices in events of increased network demand, points-of-failure in existing infrastructure, or disasters. However, it is challenging to conserve the energy of UAVs during prolonged coverage tasks, considering their limited on-board battery capacity. Reinforcement learning-based (RL) approaches have been previously used to improve energy utilization of multiple UAVs, however, a central cloud controller is assumed to have complete knowledge of the end-devices' locations, i.e., the controller periodically scans and sends updates for UAV decision-making. This assumption is impractical in dynamic network environments with UAVs serving mobile ground devices. To address this problem, we propose a decentralized Q-learning approach, where each UAV-BS is equipped with an autonomous agent that maximizes the connectivity of mobile ground devices while improving its energy utilization. Experimental results show that the proposed design significantly outperforms the centralized approaches in jointly maximizing the number of connected ground devices and the energy utilization of the UAV-BSs.

翻译:在网络需求增加、现有基础设施出现故障或发生灾害时,可部署无人驾驶飞行器作为空基站(UAV-BS),为地面装置提供无线连接;然而,考虑到无人驾驶飞行器在机载电池容量有限,在长时间的覆盖任务中保护无人驾驶飞行器的能源具有挑战性;以往曾使用强化学习(RL)方法来改进多架无人驾驶飞行器的能源利用;然而,假设中央云控制器完全了解最终装置的位置,即控制器定期扫描和为无人驾驶飞行器决策发送最新消息,但这一假设在动态网络环境中不切实际,无人驾驶飞行器为移动地面装置服务;为解决这一问题,我们提议采用分散式的Q学习方法,即每个无人驾驶飞行器配备一个自主代理器,最大限度地扩大移动地面装置的连通性,同时提高其能源利用率。实验结果表明,拟议的设计大大超出了联合优化连接地面装置的数量和无人驾驶飞行器的能源利用的集中方法。