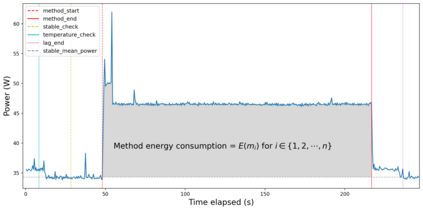

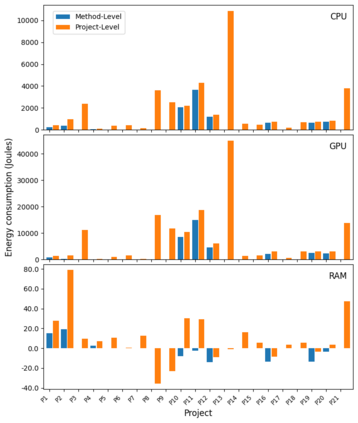

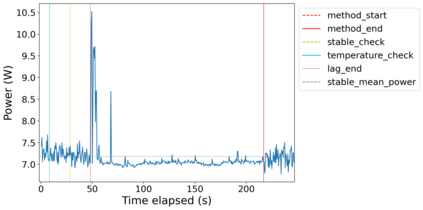

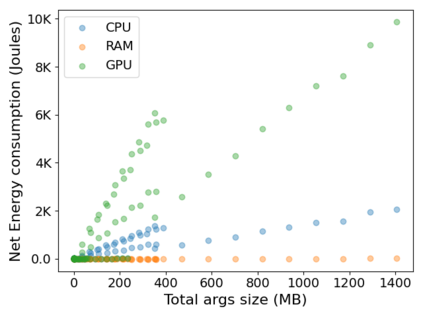

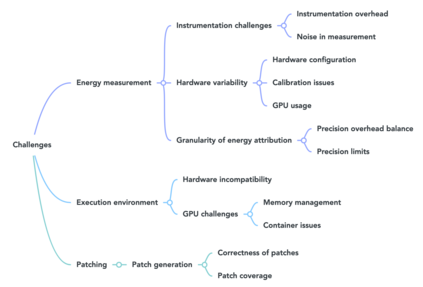

With the increasing usage, scale, and complexity of Deep Learning (DL) models, their rapidly growing energy consumption has become a critical concern. Promoting green development and energy awareness at different granularities is the need of the hour to limit carbon emissions of DL systems. However, the lack of standard and repeatable tools to accurately measure and optimize energy consumption at a fine granularity (e.g., at method level) hinders progress in this area. This paper introduces FECoM (Fine-grained Energy Consumption Meter), a framework for fine-grained DL energy consumption measurement. FECoM enables researchers and developers to profile DL APIs from energy perspective. FECoM addresses the challenges of measuring energy consumption at fine-grained level by using static instrumentation and considering various factors, including computational load and temperature stability. We assess FECoM's capability to measure fine-grained energy consumption for one of the most popular open-source DL frameworks, namely TensorFlow. Using FECoM, we also investigate the impact of parameter size and execution time on energy consumption, enriching our understanding of TensorFlow APIs' energy profiles. Furthermore, we elaborate on the considerations, issues, and challenges that one needs to consider while designing and implementing a fine-grained energy consumption measurement tool. This work will facilitate further advances in DL energy measurement and the development of energy-aware practices for DL systems.

翻译:暂无翻译